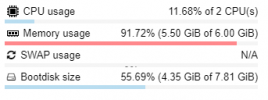

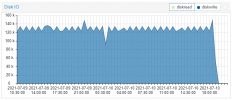

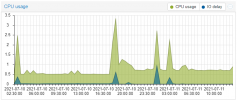

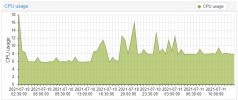

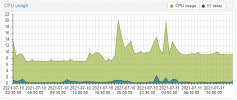

Nothing we know of, can you please open a new thread with the VM config (i update my proxmox to version 7. The openmediavault vm as HDD in direct attach with SCSI (passtrought). But the VM failed and crash all my proxmox machine.

It seems to be an error during read/write HDD. I do not have probleme on proxmox 6.

Is there a bug on direct drive attaching to a VM?

qm config VMID) posted.Also, do you see the IO errors on the Proxmox VE level or in the guest?