This issue appears to be completely fixed now. Thank you!Seems to be caused by differences in how the devices controller in cgroupv1 behaves vs what lxc emulates. We'll probably fix this by rolling out a default config for cgroupv2-devices to restore the previous behavior.

Proxmox VE 7.0 (beta) released!

- Thread starter martin

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

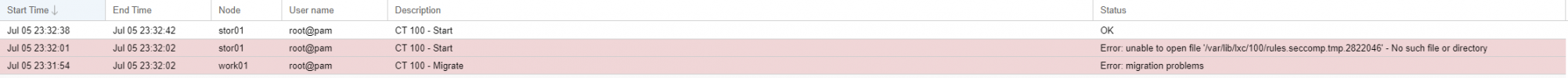

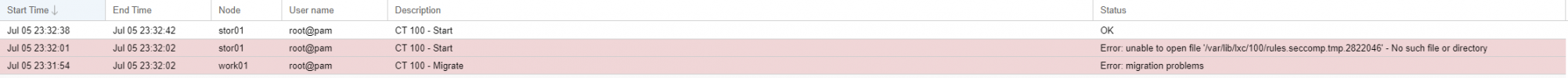

Encountered a few issues migrating existing containers to other nodes in the cluster failing to start.

Troubleshooting led to the following:

From there, looked into a few areas until stumbling on the following:

In turn, it now starts:

At the moment, any containers created on a respective node will start without issue; if said container were to be migrated it would fail to start until its corresponding directory is created on the target node.

The output below matches on 3 upgraded nodes (from 6.4).

Output below are from 2 newly installed nodes (7.0 beta).

Troubleshooting led to the following:

Code:

lxc-start: 101: conf.c: mount_autodev: 1222 Invalid cross-device link - Failed to mount tmpfs on "(null)"

lxc-start: 101: conf.c: lxc_setup: 3628 Failed to mount "/dev"

lxc-start: 101: start.c: do_start: 1265 Failed to setup container "101"

lxc-start: 101: sync.c: sync_wait: 36 An error occurred in another process (expected sequence number 5)

lxc-start: 101: start.c: __lxc_start: 2073 Failed to spawn container "101"

lxc-start: 101: tools/lxc_start.c: main: 308 The container failed to start

Code:

23:27:58

root@work01:~# ls /var/lib/lxc/101/

ls: cannot access '/var/lib/lxc/101/': No such file or directory

23:28:49

root@work01:~# ls /var/lib/lxc/

100

23:28:52

root@work01:~# ll /var/lib/lxc/

total 8.0K

drwxr-xr-x 3 root 4.0K Jul 5 18:00 100/

drwxr-xr-x 2 root 4.0K Jul 5 17:53 .pve-staged-mounts/

23:29:02

root@work01:~# mkdir /var/lib/lxc/101/

mkdir: created directory '/var/lib/lxc/101/'

23:29:22

root@work01:~# ll /var/lib/lxc/101/

total 12K

-rw-r--r-- 1 root 916 Jul 5 23:29 config

drwxr-xr-x 2 root 4.0K Jul 5 23:29 rootfs/

-rw-r--r-- 1 root 265 Jul 5 23:29 rules.seccomp

23:29:48

root@work01:~# ll /var/lib/lxc/101/config

-rw-r--r-- 1 root 916 Jul 5 23:29 /var/lib/lxc/101/config

23:29:58

root@work01:~# ll /var/lib/lxc/

total 12K

drwxr-xr-x 4 root 4.0K Jul 5 23:31 100/

drwxr-xr-x 4 root 4.0K Jul 5 23:31 101/

drwxr-xr-x 3 root 4.0K Jul 5 23:31 .pve-staged-mounts/

At the moment, any containers created on a respective node will start without issue; if said container were to be migrated it would fail to start until its corresponding directory is created on the target node.

The output below matches on 3 upgraded nodes (from 6.4).

Code:

~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-1-pve)

pve-manager: 7.0-6 (running version: 7.0-6/36bad4fc)

pve-kernel-5.11: 7.0-3

pve-kernel-helper: 7.0-3

pve-kernel-5.11.22-1-pve: 5.11.22-2

pve-kernel-5.11.21-1-pve: 5.11.21-1

ceph: 16.2.4-pve1

ceph-fuse: 16.2.4-pve1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.0.0-1+pve5

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.1.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-4

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-7

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-2

lxcfs: 4.0.8-pve1

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.1-1

proxmox-backup-file-restore: 2.0.1-1

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.2-4

pve-cluster: 7.0-3

pve-container: 4.0-5

pve-docs: 7.0-3

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.2-4

pve-ha-manager: 3.3-1

pve-i18n: 2.4-1

pve-qemu-kvm: 6.0.0-2

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-7

smartmontools: 7.2-pve2

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.4-pve1

Code:

~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-1-pve)

pve-manager: 7.0-6 (running version: 7.0-6/36bad4fc)

pve-kernel-5.11: 7.0-3

pve-kernel-helper: 7.0-3

pve-kernel-5.11.22-1-pve: 5.11.22-2

ceph: 16.2.4-pve1

ceph-fuse: 16.2.4-pve1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.0.0-1+pve5

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.1.1

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-4

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-7

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-2

lxcfs: 4.0.8-pve1

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.1-1

proxmox-backup-file-restore: 2.0.1-1

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.2-4

pve-cluster: 7.0-3

pve-container: 4.0-5

pve-docs: 7.0-3

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.2-4

pve-ha-manager: 3.3-1

pve-i18n: 2.4-1

pve-qemu-kvm: 6.0.0-2

pve-xtermjs: 4.12.0-1

qemu-server: 7.0-7

smartmontools: 7.2-1

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.4-pve1

Last edited:

Can you please post the container config?Encountered a few issues migrating existing containers to other nodes in the cluster failing to start.

pct config VMIDAlso, what distro+version is running in that container?

Can you please post the container config?pct config VMID

Also, what distro+version is running in that container?

Code:

00:31:56

root@work01:~# pct config 101

arch: amd64

cores: 4

hostname: mon-lxc

memory: 4096

net0: name=eth0,bridge=vmbr0,hwaddr=2A:FF:0A:9F:F0:7B,ip=dhcp,tag=100,type=veth

onboot: 0

ostype: debian

rootfs: ceph-hybrid-storage:vm-101-disk-0,size=20G

swap: 0

unprivileged: 1

Code:

Linux mon-lxc 5.11.22-1-pve #1 SMP PVE 5.11.22-2 (Fri, 02 Jul 2021 16:22:45 +0200) x86_64

root@mon-lxc:~# lsb_release -d

Description: Debian GNU/Linux 10 (buster)

Last edited:

Thanks, we'll look into it!Code:00:31:56 root@work01:~# pct config 101 arch: amd64 cores: 4 hostname: mon-lxc memory: 4096 net0: name=eth0,bridge=vmbr0,hwaddr=2A:FF:0A:9F:F0:7B,ip=dhcp,tag=100,type=veth onboot: 0 ostype: debian rootfs: ceph-hybrid-storage:vm-101-disk-0,size=20G swap: 0 unprivileged: 1Code:Linux mon-lxc 5.11.22-1-pve #1 SMP PVE 5.11.22-2 (Fri, 02 Jul 2021 16:22:45 +0200) x86_64 root@mon-lxc:~# lsb_release -d Description: Debian GNU/Linux 10 (buster)

Can you update to the just released pve-container version 4.0-6 and try with that one? It contains a fix for this issue.At the moment, any containers created on a respective node will start without issue; if said container were to be migrated it would fail to start until its corresponding directory is created on the target node.

Apologies for the wait (a few things delayed this); performed a few tests to confirm fix. ex;Can you update to the just released pve-container version 4.0-6 and try with that one? It contains a fix for this issue.

Before migrating container:

Code:

04:25:28

root@stor01:~# pct config 101

Configuration file 'nodes/stor01/lxc/101.conf' does not exist

Code:

2021-07-06 04:26:15 shutdown CT 101

2021-07-06 04:26:23 use dedicated network address for sending migration traffic (10.0.15.31)

2021-07-06 04:26:23 starting migration of CT 101 to node 'stor01' (10.0.15.31)

2021-07-06 04:26:24 volume 'ceph-hybrid-storage:vm-101-disk-0' is on shared storage 'ceph-hybrid-storage'

2021-07-06 04:26:24 start final cleanup

2021-07-06 04:26:25 start container on target node

2021-07-06 04:26:25 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=stor01' root@10.0.15.31 pct start 101

2021-07-06 04:26:30 migration finished successfully (duration 00:00:15)

TASK OK

Code:

04:26:07

root@stor01:~# pct config 101

arch: amd64

cores: 4

hostname: mon-lxc

memory: 4096

net0: name=eth0,bridge=vmbr0,hwaddr=2A:FF:0A:9F:F0:7B,ip=dhcp,tag=100,type=veth

onboot: 0

ostype: debian

rootfs: ceph-hybrid-storage:vm-101-disk-0,size=20G

swap: 0

unprivileged: 1Confirmed resolved; thank you for looking into this so quickly!

Thanks for the report and the feedback for the fix!Confirmed resolved; thank you for looking into this so quickly!

Naturally, if you would like to try BTRFS as root filesystem then that is only possible in the PVE 7.0 ISO

What is the benefit of BTRFS for the root filesystem? I thought ZFS was supposed to the king of everything?

ZFS could be surely called a king of (local) filesystems, and we definitively recommend it to anyone unsure, I mean now with BTRFS in technology preview that's a no-brainer, but we plan to do so even if BTRFS integration would become stable.What is the benefit of BTRFS for the root filesystem? I thought ZFS was supposed to the king of everything?

We got quite some request for BTRFS over the years, so one big reason of adding it is definitively user demand.

BTRFS also is designed a bit differently, snapshots are a very loose thing compared to ZFS, that adds benefits like being able to rollback to any snapshot, not just the most recent one, but also has disadvantages like being harder to use in replication.

For some user it's maybe also just a preference to use a file-system that is in the Linux kernel tree, even if there are certain trade-offs.

After the ceph update I get this per e-mail for each osd:

pve01.cloudfighter.de : Jul 7 00:08:41 : ceph : a password is required ; PWD=/ ; USER=root ; COMMAND=nvme wus4bb096d7p3e4 smart-log-add --json /dev/nvme2n1

I never set a password for the ceph user manually, is this needed now?

pve01.cloudfighter.de : Jul 7 00:08:41 : ceph : a password is required ; PWD=/ ; USER=root ; COMMAND=nvme wus4bb096d7p3e4 smart-log-add --json /dev/nvme2n1

I never set a password for the ceph user manually, is this needed now?

No. That's ceph's smart-mon usage trying to callAfter the ceph update I get this per e-mail for each osd:

pve01.cloudfighter.de : Jul 7 00:08:41 : ceph : a password is required ; PWD=/ ; USER=root ; COMMAND=nvme wus4bb096d7p3e4 smart-log-add --json /dev/nvme2n1

I never set a password for the ceph user manually, is this needed now?

nvme-cli, you can just install that package manually to make it go away. We'll default to do that for new Pacific installations anyway.Hello,

I updated Proxmox to version 7.

I have problems with my LXC privileged container mode: the devices do not work anymore!

Example :

arch: amd64

cores: 4

hostname: zwave

memory: 2048

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=XXXXXXX,ip=dhcp,ip6=auto,type=veth

onboot: 1

ostype: debian

parent: MAJ_5_1_0

protection: 1

rootfs: local-zfs:subvol-105-disk-0,size=8G

startup: order=2,up=5

swap: 0

lxc.cgroup.devices.allow: c 189:2 rwm

lxc.cgroup.devices.allow: c 166:1 rwm

lxc.mount.entry: /dev/bus/usb/001/003 dev/bus/usb/001/003 none bind,optional,create=file

lxc.mount.entry: /dev/ttyACM1 dev/ttyACM1 none bind,optional,create=file

The ttyACM1 device not working anymore. Do you have an idea ?

Thanks

I updated Proxmox to version 7.

I have problems with my LXC privileged container mode: the devices do not work anymore!

Example :

arch: amd64

cores: 4

hostname: zwave

memory: 2048

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=XXXXXXX,ip=dhcp,ip6=auto,type=veth

onboot: 1

ostype: debian

parent: MAJ_5_1_0

protection: 1

rootfs: local-zfs:subvol-105-disk-0,size=8G

startup: order=2,up=5

swap: 0

lxc.cgroup.devices.allow: c 189:2 rwm

lxc.cgroup.devices.allow: c 166:1 rwm

lxc.mount.entry: /dev/bus/usb/001/003 dev/bus/usb/001/003 none bind,optional,create=file

lxc.mount.entry: /dev/ttyACM1 dev/ttyACM1 none bind,optional,create=file

The ttyACM1 device not working anymore. Do you have an idea ?

Thanks

I updated Proxmox to version 7.

I have problems with my LXC privileged container mode: the devices do not work anymore!

see https://forum.proxmox.com/threads/p...strough-not-working-anymore.92025/post-400916

you need to change the lxc.cgroup to lxc.cgroup2

I hope this helps!

Last edited by a moderator:

Thank you very much !!!!!!!!!see https://forum.proxmox.com/threads/p...strough-not-working-anymore.92025/post-400916

you need to change the lxc.cgroup to lxc.cgroup2

I hope this helps!

Hi,Could you tell us what parameters you had set there? In theory some things like for example moving only a subset of cgroups to v2 *could* work with lxc (but I wouldn't recommend it for production use).

do you have any Update on this?

I have the same error but i already set the kernel Command LIne:

GRUB_CMDLINE_LINUX="systemd.unified_cgroup_hierarchy=0"

Cheers

Daniel

is the container which does not start privileged? if yes - you might want to check the following thread (roughly from the post in the link):Hi,

do you have any Update on this?

I have the same error but i already set the kernel Command LIne:

https://forum.proxmox.com/threads/l...ter-upgrade-from-6-4-to-7-0.92034/post-401299

We're currently working on finding a solution to the issue

I hope this helps!

Hello,

after update proxmox 6 to 7 i become the following error on startup a pct container:

pct config 100:

after update proxmox 6 to 7 i become the following error on startup a pct container:

Code:

run_buffer: 316 Script exited with status 1

lxc_setup: 3686 Failed to run mount hooks

do_start: 1265 Failed to setup container "100"

sync_wait: 36 An error occurred in another process (expected sequence number 5)

__lxc_start: 2073 Failed to spawn container "100"

TASK ERROR: startup for container '100' failedpct config 100:

Code:

arch: amd64

cores: 1

hostname: Status

memory: 1024

net0: name=eth0,bridge=vmbr0,firewall=1,gw=5.XXX.XXX.XXX,gw6=2a01:XXXXXX,hwaddr=8E:22:F5:62:90:17,ip=5.XXXXX/29,ip6=2a01:XXXXXX/64,type=veth

onboot: 1

ostype: debian

rootfs: local:100/vm-100-disk-0.raw,size=10G

swap: 512

unprivileged: 1please share the debug logs - https://pve.proxmox.com/pve-docs/chapter-pct.html#_obtaining_debugging_logsafter update proxmox 6 to 7 i become the following error on startup a pct container:

and check if the issue is resolved when you switch to the old hybrid cgroup layout as described in:

https://pve.proxmox.com/pve-docs/chapter-pct.html#pct_cgroup

Thanks!

please share the debug logs - https://pve.proxmox.com/pve-docs/chapter-pct.html#_obtaining_debugging_logs

and check if the issue is resolved when you switch to the old hybrid cgroup layout as described in:

https://pve.proxmox.com/pve-docs/chapter-pct.html#pct_cgroup

Thanks!

after editing the cgroup version and update proxmox kernel boot, it doesnt fuction. Above the debug.

Debug:

Code:

root@vhost07 ~ # pct start 100 --debug

run_buffer: 316 Script exited with status 1

lxc_setup: 3686 Failed to run mount hooks

do_start: 1265 Failed to setup container "100"

sync_wait: 36 An error occurred in another process (expected sequence number 5)

__lxc_start: 2073 Failed to spawn container "100"

driver AppArmor

INFO conf - conf.c:run_script_argv:332 - Executing script "/usr/share/lxc/hooks/lxc-pve-prestart-hook" for container "100", config section "lxc"

DEBUG terminal - terminal.c:lxc_terminal_peer_default:665 - No such device - The process does not have a controlling terminal

DEBUG seccomp - seccomp.c:parse_config_v2:656 - Host native arch is [3221225534]

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "reject_force_umount # comment this to allow umount -f; not recommended"

INFO seccomp - seccomp.c:do_resolve_add_rule:524 - Set seccomp rule to reject force umounts

INFO seccomp - seccomp.c:do_resolve_add_rule:524 - Set seccomp rule to reject force umounts

INFO seccomp - seccomp.c:do_resolve_add_rule:524 - Set seccomp rule to reject force umounts

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "[all]"

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "kexec_load errno 1"

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding native rule for syscall[246:kexec_load] action[327681:errno] arch[0]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[246:kexec_load] action[327681:errno] arch[1073741827]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[246:kexec_load] action[327681:errno] arch[1073741886]

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "open_by_handle_at errno 1"

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding native rule for syscall[304:open_by_handle_at] action[327681:errno] arch[0]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[304:open_by_handle_at] action[327681:errno] arch[1073741827]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[304:open_by_handle_at] action[327681:errno] arch[1073741886]

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "init_module errno 1"

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding native rule for syscall[175:init_module] action[327681:errno] arch[0]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[175:init_module] action[327681:errno] arch[1073741827]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[175:init_module] action[327681:errno] arch[1073741886]

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "finit_module errno 1"

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding native rule for syscall[313:finit_module] action[327681:errno] arch[0]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[313:finit_module] action[327681:errno] arch[1073741827]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[313:finit_module] action[327681:errno] arch[1073741886]

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "delete_module errno 1"

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding native rule for syscall[176:delete_module] action[327681:errno] arch[0]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[176:delete_module] action[327681:errno] arch[1073741827]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[176:delete_module] action[327681:errno] arch[1073741886]

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "ioctl errno 1 [1,0x9400,SCMP_CMP_MASKED_EQ,0xff00]"

INFO seccomp - seccomp.c:do_resolve_add_rule:547 - arg_cmp[0]: SCMP_CMP(1, 7, 65280, 37888)

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding native rule for syscall[16:ioctl] action[327681:errno] arch[0]

INFO seccomp - seccomp.c:do_resolve_add_rule:547 - arg_cmp[0]: SCMP_CMP(1, 7, 65280, 37888)

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[16:ioctl] action[327681:errno] arch[1073741827]

INFO seccomp - seccomp.c:do_resolve_add_rule:547 - arg_cmp[0]: SCMP_CMP(1, 7, 65280, 37888)

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[16:ioctl] action[327681:errno] arch[1073741886]

INFO seccomp - seccomp.c:parse_config_v2:807 - Processing "keyctl errno 38"

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding native rule for syscall[250:keyctl] action[327718:errno] arch[0]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[250:keyctl] action[327718:errno] arch[1073741827]

INFO seccomp - seccomp.c:do_resolve_add_rule:564 - Adding compat rule for syscall[250:keyctl] action[327718:errno] arch[1073741886]

INFO seccomp - seccomp.c:parse_config_v2:1017 - Merging compat seccomp contexts into main context

INFO start - start.c:lxc_init:855 - Container "100" is initialized

INFO cgfsng - cgroups/cgfsng.c:cgfsng_monitor_create:1070 - The monitor process uses "lxc.monitor/100" as cgroup

DEBUG storage - storage/storage.c:storage_query:233 - Detected rootfs type "dir"

DEBUG storage - storage/storage.c:storage_query:233 - Detected rootfs type "dir"

INFO cgfsng - cgroups/cgfsng.c:cgfsng_setup_limits_legacy:2772 - Limits for the legacy cgroup hierarchies have been setup

INFO cgfsng - cgroups/cgfsng.c:cgfsng_payload_create:1178 - The container process uses "lxc/100/ns" as inner and "lxc/100" as limit cgroup

INFO start - start.c:lxc_spawn:1757 - Cloned CLONE_NEWUSER

INFO start - start.c:lxc_spawn:1757 - Cloned CLONE_NEWNS

INFO start - start.c:lxc_spawn:1757 - Cloned CLONE_NEWPID

INFO start - start.c:lxc_spawn:1757 - Cloned CLONE_NEWUTS

INFO start - start.c:lxc_spawn:1757 - Cloned CLONE_NEWIPC

DEBUG start - start.c:lxc_try_preserve_namespace:139 - Preserved user namespace via fd 67 and stashed path as user:/proc/2301027/fd/67

DEBUG start - start.c:lxc_try_preserve_namespace:139 - Preserved mnt namespace via fd 68 and stashed path as mnt:/proc/2301027/fd/68

DEBUG start - start.c:lxc_try_preserve_namespace:139 - Preserved pid namespace via fd 69 and stashed path as pid:/proc/2301027/fd/69

DEBUG start - start.c:lxc_try_preserve_namespace:139 - Preserved uts namespace via fd 70 and stashed path as uts:/proc/2301027/fd/70

DEBUG start - start.c:lxc_try_preserve_namespace:139 - Preserved ipc namespace via fd 71 and stashed path as ipc:/proc/2301027/fd/71

DEBUG conf - conf.c:idmaptool_on_path_and_privileged:2948 - The binary "/usr/bin/newuidmap" does have the setuid bit set

DEBUG conf - conf.c:idmaptool_on_path_and_privileged:2948 - The binary "/usr/bin/newgidmap" does have the setuid bit set

DEBUG conf - conf.c:lxc_map_ids:3018 - Functional newuidmap and newgidmap binary found

INFO start - start.c:do_start:1085 - Unshared CLONE_NEWNET

DEBUG cgfsng - cgroups/cgfsng.c:cgfsng_setup_limits_legacy:2767 - Set controller "memory.limit_in_bytes" set to "1073741824"

DEBUG cgfsng - cgroups/cgfsng.c:cgfsng_setup_limits_legacy:2767 - Set controller "memory.memsw.limit_in_bytes" set to "1610612736"

DEBUG cgfsng - cgroups/cgfsng.c:cgfsng_setup_limits_legacy:2767 - Set controller "cpu.shares" set to "1024"

DEBUG cgfsng - cgroups/cgfsng.c:cgfsng_setup_limits_legacy:2767 - Set controller "cpuset.cpus" set to "3"

INFO cgfsng - cgroups/cgfsng.c:cgfsng_setup_limits_legacy:2772 - Limits for the legacy cgroup hierarchies have been setup

WARN cgfsng - cgroups/cgfsng.c:cgfsng_setup_limits:2837 - Invalid argument - Ignoring cgroup2 limits on legacy cgroup system

DEBUG conf - conf.c:idmaptool_on_path_and_privileged:2948 - The binary "/usr/bin/newuidmap" does have the setuid bit set

DEBUG conf - conf.c:idmaptool_on_path_and_privileged:2948 - The binary "/usr/bin/newgidmap" does have the setuid bit set

DEBUG conf - conf.c:lxc_map_ids:3018 - Functional newuidmap and newgidmap binary found

NOTICE utils - utils.c:lxc_drop_groups:1345 - Dropped supplimentary groups

WARN cgfsng - cgroups/cgfsng.c:fchowmodat:1293 - No such file or directory - Failed to fchownat(64, memory.oom.group, 65536, 0, AT_EMPTY_PATH | AT_SYMLINK_NOFOLLOW )

DEBUG start - start.c:lxc_try_preserve_namespace:139 - Preserved net namespace via fd 5 and stashed path as net:/proc/2301027/fd/5

INFO conf - conf.c:run_script_argv:332 - Executing script "/usr/share/lxc/lxcnetaddbr" for container "100", config section "net"

DEBUG network - network.c:netdev_configure_server_veth:849 - Instantiated veth tunnel "veth100i0 <--> vethxE4gzq"

NOTICE utils - utils.c:lxc_drop_groups:1345 - Dropped supplimentary groups

NOTICE utils - utils.c:lxc_switch_uid_gid:1321 - Switched to gid 0

NOTICE utils - utils.c:lxc_switch_uid_gid:1330 - Switched to uid 0

INFO start - start.c:do_start:1196 - Unshared CLONE_NEWCGROUP

DEBUG conf - conf.c:lxc_mount_rootfs:1394 - Mounted rootfs "/var/lib/lxc/100/rootfs" onto "/usr/lib/x86_64-linux-gnu/lxc/rootfs" with options "(null)"

INFO conf - conf.c:setup_utsname:846 - Set hostname to "Status"

DEBUG network - network.c:setup_hw_addr:3814 - Mac address "8E:22:F5:62:90:17" on "eth0" has been setup

DEBUG network - network.c:lxc_network_setup_in_child_namespaces_common:3964 - Network device "eth0" has been setup

INFO network - network.c:lxc_setup_network_in_child_namespaces:4022 - Finished setting up network devices with caller assigned names

INFO conf - conf.c:mount_autodev:1182 - Preparing "/dev"

DEBUG conf - conf.c:mount_autodev:1212 - Using mount options: size=500000,mode=755

INFO conf - conf.c:mount_autodev:1242 - Prepared "/dev"

DEBUG conf - conf.c:lxc_mount_auto_mounts:725 - Invalid argument - Tried to ensure procfs is unmounted

DEBUG conf - conf.c:lxc_mount_auto_mounts:740 - Invalid argument - Tried to ensure sysfs is unmounted

DEBUG conf - conf.c:mount_entry:2116 - Remounting "/sys/fs/fuse/connections" on "/usr/lib/x86_64-linux-gnu/lxc/rootfs/sys/fs/fuse/connections" to respect bind or remount options

DEBUG conf - conf.c:mount_entry:2135 - Flags for "/sys/fs/fuse/connections" were 4096, required extra flags are 0

DEBUG conf - conf.c:mount_entry:2144 - Mountflags already were 4096, skipping remount

DEBUG conf - conf.c:mount_entry:2179 - Mounted "/sys/fs/fuse/connections" on "/usr/lib/x86_64-linux-gnu/lxc/rootfs/sys/fs/fuse/connections" with filesystem type "none"

INFO conf - conf.c:run_script_argv:332 - Executing script "/usr/share/lxcfs/lxc.mount.hook" for container "100", config section "lxc"

DEBUG conf - conf.c:run_buffer:305 - Script exec /usr/share/lxcfs/lxc.mount.hook 100 lxc mount produced output: missing /var/lib/lxcfs/proc/ - lxcfs not running?

ERROR conf - conf.c:run_buffer:316 - Script exited with status 1

ERROR conf - conf.c:lxc_setup:3686 - Failed to run mount hooks

ERROR start - start.c:do_start:1265 - Failed to setup container "100"

ERROR sync - sync.c:sync_wait:36 - An error occurred in another process (expected sequence number 5)

DEBUG network - network.c:lxc_delete_network:4180 - Deleted network devices

ERROR start - start.c:__lxc_start:2073 - Failed to spawn container "100"

WARN start - start.c:lxc_abort:1016 - No such process - Failed to send SIGKILL via pidfd 66 for process 2301053

startup for container '100' failed