Proxmox VE 6.0 released!

- Thread starter martin

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I installed fresh Proxmox ve v6 but import zfs pool from 5.4. When run zpool status -t it show "trim unsupported" on my zfs pool1 consist of Crucial MX500 SSD. Why not supported?

root@pve1:~# zpool status -t

pool: pool1

state: ONLINE

scan: scrub repaired 0B in 0 days 01:03:32 with 0 errors on Sun Aug 11 01:27:33 2019

config:

NAME STATE READ WRITE CKSUM

pool1 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdb ONLINE 0 0 0 (trim unsupported)

sdc ONLINE 0 0 0 (trim unsupported)

mirror-1 ONLINE 0 0 0

sdd ONLINE 0 0 0 (trim unsupported)

sde ONLINE 0 0 0 (trim unsupported)

mirror-2 ONLINE 0 0 0

sdf ONLINE 0 0 0 (trim unsupported)

sdg ONLINE 0 0 0 (trim unsupported)

errors: No known data errors

pool: rpool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-INTEL_SSDSC2BB160G4T_BTWL409602P5160MGN-part3 ONLINE 0 0 0 (100% trimmed, completed at Wed 14 Aug 2019 09:20:59 AM +08)

ata-INTEL_SSDSC2BB160G4T_BTWL411200WV160MGN-part3 ONLINE 0 0 0 (100% trimmed, completed at Wed 14 Aug 2019 09:20:59 AM +08)

errors: No known data errors

root@pve1:~# zpool status -t

pool: pool1

state: ONLINE

scan: scrub repaired 0B in 0 days 01:03:32 with 0 errors on Sun Aug 11 01:27:33 2019

config:

NAME STATE READ WRITE CKSUM

pool1 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdb ONLINE 0 0 0 (trim unsupported)

sdc ONLINE 0 0 0 (trim unsupported)

mirror-1 ONLINE 0 0 0

sdd ONLINE 0 0 0 (trim unsupported)

sde ONLINE 0 0 0 (trim unsupported)

mirror-2 ONLINE 0 0 0

sdf ONLINE 0 0 0 (trim unsupported)

sdg ONLINE 0 0 0 (trim unsupported)

errors: No known data errors

pool: rpool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-INTEL_SSDSC2BB160G4T_BTWL409602P5160MGN-part3 ONLINE 0 0 0 (100% trimmed, completed at Wed 14 Aug 2019 09:20:59 AM +08)

ata-INTEL_SSDSC2BB160G4T_BTWL411200WV160MGN-part3 ONLINE 0 0 0 (100% trimmed, completed at Wed 14 Aug 2019 09:20:59 AM +08)

errors: No known data errors

@Stoiko Ivanov Thanks for the explanation. It seems that consumer based SSD will not have TRIM enable for LSI HBA in IT mode. Only enterprise SSD will work.

Sorry for not open a fresh thread.

Sorry for not open a fresh thread.

Since upgrading to 6.0 I have noticed that my 4-node cluster has become unstable. At random times up to 3 of the 4 nodes will suddenly drop all connections and appear as if they have rebooted. However inspecting my machines iDRAC logs no reboots or power issues occur. It would appear that this may be related to https://bugzilla.proxmox.com/show_bug.cgi?id=2326 as what is described there is exactly what is happening to my cluster as well. Does anyone know if there are any fixes for this yet? I need my cluster to be stable again, its really starting to annoy me that it keeps randomly restarting!!

All 4 of my nodes have the following packages

All 4 of my nodes have the following packages

Code:

proxmox-ve: 6.0-2 (running kernel: 5.0.18-1-pve)

pve-manager: 6.0-5 (running version: 6.0-5/f8a710d7)

pve-kernel-5.0: 6.0-6

pve-kernel-helper: 6.0-6

pve-kernel-4.15: 5.4-6

pve-kernel-5.0.18-1-pve: 5.0.18-3

pve-kernel-5.0.15-1-pve: 5.0.15-1

pve-kernel-4.15.18-18-pve: 4.15.18-44

pve-kernel-4.15.18-12-pve: 4.15.18-36

ceph: 14.2.1-pve2

ceph-fuse: 14.2.1-pve2

corosync: 3.0.2-pve2

criu: 3.11-3

glusterfs-client: 5.5-3

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.10-pve2

libpve-access-control: 6.0-2

libpve-apiclient-perl: 3.0-2

libpve-common-perl: 6.0-3

libpve-guest-common-perl: 3.0-1

libpve-http-server-perl: 3.0-2

libpve-storage-perl: 6.0-7

libqb0: 1.0.5-1

lvm2: 2.03.02-pve3

lxc-pve: 3.1.0-63

lxcfs: 3.0.3-pve60

novnc-pve: 1.0.0-60

openvswitch-switch: 2.10.0+2018.08.28+git.8ca7c82b7d+ds1-12

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.0-5

pve-cluster: 6.0-5

pve-container: 3.0-5

pve-docs: 6.0-4

pve-edk2-firmware: 2.20190614-1

pve-firewall: 4.0-7

pve-firmware: 3.0-2

pve-ha-manager: 3.0-2

pve-i18n: 2.0-2

pve-qemu-kvm: 4.0.0-5

pve-xtermjs: 3.13.2-1

qemu-server: 6.0-7

smartmontools: 7.0-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.1-pve1Check the logs (especially for messages from corosync and pve-cluster/pmxcfs) - if this does not lead to a solution please open a new thread (with the logs attached/pasted in code-tags) - Thanks!After upgrading to 6.0, most part of VMs started going to HA error status on different hosts every 2-3 days

please create a new thread. ThanksHi , I have a problem with instalation, at the finish of install, i had error.

Someone have any idea?

sorry my english.

Thanks

Try to run the installer in debug mode and post the output in the last debug-shell

Hi!

Today I upgraded to PVE6 on a 4-node cluster. The update went smoothly!

Ceph updated to Nautilus without problems too!

Thanks for the great work!

Best regards

Gosha

Today I upgraded to PVE6 on a 4-node cluster. The update went smoothly!

Ceph updated to Nautilus without problems too!

Thanks for the great work!

Best regards

Gosha

We need to update xx nodes so that will take a long time (currently running Debian, Proxmox 5.4, Corosync 2 with Ceph).

I was thinking to update Corosync to v3 on all nodes first (at the same time), this will keep everything running right? Then start the Proxmox update per node incl Ceph, updating Ceph feels a bit dangerous.

It's not a problem if we are down at night for lets say an hour.

Is this the best way to follow? What do you recommend to do if we are talking about a large number of nodes.

Thanks in advance!

I was thinking to update Corosync to v3 on all nodes first (at the same time), this will keep everything running right? Then start the Proxmox update per node incl Ceph, updating Ceph feels a bit dangerous.

It's not a problem if we are down at night for lets say an hour.

Is this the best way to follow? What do you recommend to do if we are talking about a large number of nodes.

Thanks in advance!

Yes, if your network backing corosync is stable and not loaded to much - which should be the case, as else the current one would show issues already. But still, test it first, e.g., in a (virtual) test setup.I was thinking to update Corosync to v3 on all nodes first (at the same time), this will keep everything running right?

Then start the Proxmox update per node incl Ceph, updating Ceph feels a bit dangerous.

Do not update Proxmox VE and Ceph in one go. First Proxmox VE, and only then, once PVE was update to 6.0 in the cluster, all nodes restarted, all healthy for a bit do the update from Ceph Luminous to Ceph Nautilus.

Just be sure to follow our Upgrade Guide: https://pve.proxmox.com/wiki/Upgrade_from_5.x_to_6.0#In-place_upgradeIs this the best way to follow? What do you recommend to do if we are talking about a large number of nodes.

Do not miss steps or change order, if you're not 100% sure.

There's not much to change for big clusters. Just be sure to go slowly one by one and first to a test upgrade on a test machine or virtual PVE cluster to get a feeling.

Last edited:

Hi Proxmox,

I just want to say THANKS TO YOU for the new 6.0 release!

Yesterday I successfully upgraded a 5.4 cluster (3 nodes) to 6.0-7.

Followed the upgrade instruction closely and had ZERO issues. That cluster went across all the upgrades from 5.0 to 6.0 and never went down because of upgrades.

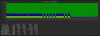

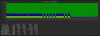

We are using local zfs replication and were suffering from the missing TRIM function.

After upgrading to 6.0, I issued "zpool upgrade <poolname>" and "zpool trim <poolname>".

Hopefully the attached screenshot will pass, anyway IOwait time dropped to almost zero. The whole cluster is benefiting a lot from the upgrade.

Best regards,

Marco

I just want to say THANKS TO YOU for the new 6.0 release!

Yesterday I successfully upgraded a 5.4 cluster (3 nodes) to 6.0-7.

Followed the upgrade instruction closely and had ZERO issues. That cluster went across all the upgrades from 5.0 to 6.0 and never went down because of upgrades.

We are using local zfs replication and were suffering from the missing TRIM function.

After upgrading to 6.0, I issued "zpool upgrade <poolname>" and "zpool trim <poolname>".

Hopefully the attached screenshot will pass, anyway IOwait time dropped to almost zero. The whole cluster is benefiting a lot from the upgrade.

Best regards,

Marco

just curious i have a proxmox on 5.4 not yet on cluster the idea is to upgrade to 6.0 because cannot get ZFS to boot im guessing because it running HP smart array P440ar on HBA mode (even disabled uefi) but saw on 6.0 it boots up on uefi my question is how stable is it? going to try out this week and if it works how stable or recomended to combine it on a cluster with 5.4 on the other hosts?

just curious i have a proxmox on 5.4 not yet on cluster the idea is to upgrade to 6.0 because cannot get ZFS to boot im guessing because it running HP smart array P440ar on HBA mode (even disabled uefi) but saw on 6.0 it boots up on uefi my question is how stable is it? going to try out this week and if it works how stable or recomended to combine it on a cluster with 5.4 on the other hosts?

My experience with the upgrade was very smooth, the current 6.0 (6.0-7) is very stable on our cluster. A "lazy sysadmin" can freeze it and forget it for a while.

In the first step of my upgrade only one node was running 6.0. I did a breief test on what was working on the 5.4 web UI and had no particular problems. Could still stop/start (even the 6.0 node machines), replicate vms and also migrating is supported from a lower version to a higher one, the other way around should work but it's not supported.

It goes by itself however that you should upgrade all the cluster nodes as soon as you can. No need to have different configurations and kernels on the cluster nodes.

It also goes by itself that you have to stick closely to the upgrade procedure, which is not difficult but missing steps would lead to issues I guess.

Regards,

Marco

Thanks for the reply, so the upgrade procedure would be something like this?

add this in nano /etc/apt/sources.list

deb http://download.proxmox.com/debian/pve stretch pve-no-subscription

then run

apt update

apt upgrade

apt clean && apt autoclean

then reboot

add this in nano /etc/apt/sources.list

deb http://download.proxmox.com/debian/pve stretch pve-no-subscription

then run

apt update

apt upgrade

apt clean && apt autoclean

then reboot

The upgrade procedure is explained in quite some detail on our wiki https://pve.proxmox.com/wiki/Upgrade_from_5.x_to_6.0

PVE 6 is based on debian buster - not stretch!deb http://download.proxmox.com/debian/pve stretch pve-no-subscription