Thanks to your testing feedback and the nature of the fix, which is quite targeted and contained, with a basically non-existent regression potential, we decided to fast track that fix. It should be available on all repos now.

Proxmox update from 7.2-4 GRUB update failure

- Thread starter kocherjj

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It's not hard, check out the patch: https://git.proxmox.com/?p=pve-kern...ff;h=18a8d30651498aaf64d3a8aee1d759fb9a9147a3can you explain to me how to do that patching the file /usr/sbin/grub-install ?

If it is easy, I will do it directly

You basically need to source a specific helper file, which is done with the dot command, so edit the file and add the following line bewlo the

set -e command, almost at the top:. /usr/share/pve-kernel-helper/scripts/functionsIn my case i just did three things.

1. add repo into /etc/apt/sources.list: deb http://download.proxmox.com/debian/pve bullseye pvetest

2. apt update && apt install pve-kernel-helper

3. grub-install.real /dev/sdX (replace sdX with your device where you have grub. in my case it was "grub-install.real /dev/sdq")

then reboot.

1. add repo into /etc/apt/sources.list: deb http://download.proxmox.com/debian/pve bullseye pvetest

2. apt update && apt install pve-kernel-helper

3. grub-install.real /dev/sdX (replace sdX with your device where you have grub. in my case it was "grub-install.real /dev/sdq")

then reboot.

If I nano this:It's not hard, check out the patch: https://git.proxmox.com/?p=pve-kern...ff;h=18a8d30651498aaf64d3a8aee1d759fb9a9147a3

You basically need to source a specific helper file, which is done with the dot command, so edit the file and add the following line bewlo theset -ecommand, almost at the top:

. /usr/share/pve-kernel-helper/scripts/functions

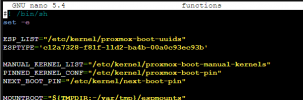

/usr/share/pve-kernel-helper/scripts/functions I see this:

Is this the correct place to edit and add this line?

. /usr/share/pve-kernel-helper/scripts/functionsafter that, what to do?

Many many thanks for you quick responses !!!

Last edited:

I don't think that's the right file. I did look into that git patch link which is above and that patch is for filename called grub-install-wrapper which should be part of pve-kernel-meta. I don't know where it should be exactly. Maybe someone other can tell you more.If I nano this:/usr/share/pve-kernel-helper/scripts/functionsI see this:

View attachment 41029

Is this the correct place to edit and add this line?

. /usr/share/pve-kernel-helper/scripts/functions

after that, what to do?

Many many thanks for you quick responses !!!

Maybe somewhere in /tmp but i don't know.

Last edited:

OK, all good here now.

Steps I did

nano /usr/sbin/grub-install

changed

to

however, this did not seem to do anything so I selected "Continue without installing GRUB?" <YES>

After that, the update process continued and ended (seemingly normal).

For reference, this was the output of the original update (with loop in grub2 update)

CLI update text 1: Link

Directly when it was done i did a refresh of the updates, I saw two updates and ran those.

CLI update text 2: Link

Also those two updates ended normal now.

Reboot PVE

Came back normal. My ZFS volumes as normal

Steps I did

nano /usr/sbin/grub-install

changed

Code:

set -e

ESP_LIST="/etc/kernel/proxmox-boot-uuids"

...

...

Code:

set -e

. /usr/share/pve-kernel-helper/scripts/functions

ESP_LIST="/etc/kernel/proxmox-boot-uuids"

...

...however, this did not seem to do anything so I selected "Continue without installing GRUB?" <YES>

After that, the update process continued and ended (seemingly normal).

For reference, this was the output of the original update (with loop in grub2 update)

CLI update text 1: Link

Directly when it was done i did a refresh of the updates, I saw two updates and ran those.

CLI update text 2: Link

Also those two updates ended normal now.

Reboot PVE

Came back normal. My ZFS volumes as normal

Attachments

When the kernel-helper update wasn't available yesterday, I upgraded and chose "update anyway" as grub-install complained about my ZFS/rpool it couldn't use. After that I manually did

so both of my Pool-Disks where affected.

I did not reboot afterwards.

Today I installed the pve-kernel-helper update and rebootet afterwards.

Worked for me!

grub-install.real /dev/sda

grub-install.real /dev/sdbso both of my Pool-Disks where affected.

I did not reboot afterwards.

Today I installed the pve-kernel-helper update and rebootet afterwards.

Worked for me!

Had the same problem on my Proxmox backup Server, worked! Thanks for the fast solution

Reboot, no problems so far.

Reboot, no problems so far.

FYI - this problem is not only PVE side, because last night maintenance it hit (semirandomly) about 5% VMs.

this particular issue was present on Proxmox systems (theoretically all could be affected PVE, PBS, PMG, or for that matter any system that has pve-kernel-helper installed) - but if you ran into issues during an `apt dist-upgrade` on plain debian/ubuntu after the weekend I think it should not be related to this issue (if you post some logs of the issue we can maybe tell more)

To add an extra data point:

Just upgraded a physical pve server, installed from 6.4, no manual adjustments to proxmox-boot-tool, booted with legacy bios.

It's using pve-no-subscription repo, the upgrade included pve-kernel-helper: 7.2-12.

No issues, upgrade went fine. Thanks for the quick response.

Just upgraded a physical pve server, installed from 6.4, no manual adjustments to proxmox-boot-tool, booted with legacy bios.

It's using pve-no-subscription repo, the upgrade included pve-kernel-helper: 7.2-12.

No issues, upgrade went fine. Thanks for the quick response.

hello here Same problemThanks to your testing feedback and the nature of the fix, which is quite targeted and contained, with a basically non-existent regression potential, we decided to fast track that fix. It should be available on all repos now.

sorry but I'm not very experienced I try to summarize:

so if I understood correctly from your post it is no longer necessary to insert the test repo, can you keep it with the repo "pve no subscription" now?

So at the grub error you can click on "YES" and before rebooting I give the command "grub-install.real /dev/sdXXXXX" correct?

Code:

root@S1-web:~# lsblk -o NAME,MOUNTPOINT

NAME MOUNTPOINT

nvme1n1

├─nvme1n1p1 /boot/efi

├─nvme1n1p2

│ └─md2 /boot

├─nvme1n1p3

│ └─md3 /

├─nvme1n1p4 [SWAP]

└─nvme1n1p5

└─md5

└─vg-data /var/lib/vz

nvme0n1

├─nvme0n1p1

├─nvme0n1p2

│ └─md2 /boot

├─nvme0n1p3

│ └─md3 /

├─nvme0n1p4 [SWAP]

├─nvme0n1p5

│ └─md5

│ └─vg-data /var/lib/vz

└─nvme0n1p6in my case I have a raid which is correct?

Code:

grub-install.real /dev/nvme1n1

Code:

grub-install.real /dev/nvme1n1p1

Code:

grub-install.real /dev/md2thank you

Last edited:

yes the fix is even in pve-enterprise by now.so if I understood correctly from your post it is no longer necessary to insert the test repo, can you keep it with the repo "pve no subscription" now?

should work - sadly it's been quite a long while since I had to deal with mdraid (which Proxmox VE does not really support) - but from what I can remember - you want to run grub-install.real on /dev/nvme1n1 and /dev/nvme0n1 (the 2 blockdevices) and that should be all - however it shouldn't hurt to also run in on /dev/md2 - usually grub-install will tell you that it can't install itself thereSo at the grub error you can click on "YES" and before rebooting I give the command "grub-install.real /dev/sdXXXXX" correct?

as always - make sure you have a working and tested backup before those operations!

should you run into any issues - you can boot with a linux-livecd (or the pve-installer in debug mode (there change to the second debug-shell) and fix the boot-loader installation (google for the necessary commands - but https://pve.proxmox.com/wiki/Recover_From_Grub_Failure should be a good start (you will need to adapt to your nvme drives instead of /dev/sda...)

I hope this helps!

Hello, I had the same problem.yes the fix is even in pve-enterprise by now.

should work - sadly it's been quite a long while since I had to deal with mdraid (which Proxmox VE does not really support) - but from what I can remember - you want to run grub-install.real on /dev/nvme1n1 and /dev/nvme0n1 (the 2 blockdevices) and that should be all - however it shouldn't hurt to also run in on /dev/md2 - usually grub-install will tell you that it can't install itself there

as always - make sure you have a working and tested backup before those operations!

should you run into any issues - you can boot with a linux-livecd (or the pve-installer in debug mode (there change to the second debug-shell) and fix the boot-loader installation (google for the necessary commands - but https://pve.proxmox.com/wiki/Recover_From_Grub_Failure should be a good start (you will need to adapt to your nvme drives instead of /dev/sda...)

I hope this helps!

I pressed 'YES' and after the update I entered the commands:

Code:

root@pve:~# grub-install.real /dev/sda

Installing for i386-pc platform.

Installation finished. No error reported.

root@pve:~# grub-install.real /dev/sdb

Installing for i386-pc platform.

Installation finished. No error reported.However, there was still an error before the end of the update:

Code:

update-initramfs: Generating /boot/initrd.img-5.15.60-2-pve

Running hook script 'zz-proxmox-boot'..

Re-executing '/etc/kernel/postinst.d/zz-proxmox-boot' in new private mount namespace..

Copying and configuring kernels on /dev/disk/by-uuid/85F8-7F41

Copying kernel 5.13.19-2-pve

Copying kernel 5.13.19-6-pve

Copying kernel 5.15.35-3-pve

cp: error writing '/var/tmp/espmounts/85F8-7F41/initrd.img-5.15.35-3-pve': No space left on device

run-parts: /etc/initramfs/post-update.d//proxmox-boot-sync exited with return code 1

dpkg: error processing package initramfs-tools (--configure):

installed initramfs-tools package post-installation script subprocess returned error exit status 1

Errors were encountered while processing:

pve-kernel-5.15.60-2-pve

pve-kernel-5.15

pve-kernel-5.15.35-3-pve

initramfs-tools

E: Sub-process /usr/bin/dpkg returned an error code (1)

Your System is up-to-dateBut why is no space for /var/tmp/espmounts/85F8-7F41/initrd.img-5.15.35-3-pve?

Code:

root@pve:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 32G 0 32G 0% /dev

tmpfs 6.3G 1.6M 6.3G 1% /run

rpool/ROOT/pve-1 2.1T 275G 1.9T 13% /

tmpfs 32G 49M 32G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

rpool 1.9T 128K 1.9T 1% /rpool

rpool/data 1.9T 128K 1.9T 1% /rpool/data

rpool/ROOT 1.9T 128K 1.9T 1% /rpool/ROOT

rpool/data/subvol-101-disk-0 600G 62G 539G 11% /rpool/data/subvol-101-disk-0

rpool/share 6.4T 4.6T 1.9T 72% /rpool/share

nvmepool 826G 128K 826G 1% /nvmepool

nvmepool/subvol-102-disk-1 60G 36G 25G 59% /nvmepool/subvol-102-disk-1

nvmepool/subvol-103-disk-0 20G 235M 20G 2% /nvmepool/subvol-103-disk-0

nvmepool/subvol-105-disk-0 1.0G 182M 843M 18% /nvmepool/subvol-105-disk-0

nvmepool/subvol-102-disk-0 2.0G 46M 2.0G 3% /nvmepool/subvol-102-disk-0

nvmepool/subvol-101-disk-0 2.0G 112M 1.9G 6% /nvmepool/subvol-101-disk-0

nvmepool/subvol-300-disk-0 16G 3.6G 13G 23% /nvmepool/subvol-300-disk-0

nvmepool/subvol-102-disk-2 30G 128K 30G 1% /nvmepool/subvol-102-disk-2

rpool/data/subvol-106-disk-0 1.0G 128M 897M 13% /rpool/data/subvol-106-disk-0

nvmepool/subvol-104-disk-0 2.0G 1.4G 646M 69% /nvmepool/subvol-104-disk-0

/dev/fuse 128M 40K 128M 1% /etc/pve

tmpfs 6.3G 0 6.3G 0% /run/user/0Now I don't trust any reboot. This is a Hetzner Server PX where there is no access to a boot screen. I can only boot with network rescue system.

Ho can I check, that the grub installation is correct and the server will reboot without error?

The disk config:

Best regards,

Peter

seems you have too many kernels on your ESPsHowever, there was still an error before the end of the update:

try running:

* `apt autoremove`

* if this does not free up enough space - try removing a few pve-kernel packages manually (`pveversion -v` should give you a list)

if you still run into errors - post them here - along with the output of `proxmox-boot-tool kernel list`

The first apt autoremove want removed this, but the error remain:seems you have too many kernels on your ESPs

try running:

* `apt autoremove`

* if this does not free up enough space - try removing a few pve-kernel packages manually (`pveversion -v` should give you a list)

if you still run into errors - post them here - along with the output of `proxmox-boot-tool kernel list`

Code:

root@pve:~# apt autoremove

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following packages will be REMOVED:

pve-kernel-5.13.19-3-pve pve-kernel-5.15.35-3-pve pve-kernel-5.4 pve-kernel-5.4.157-1-pve

0 upgraded, 0 newly installed, 4 to remove and 0 not upgraded.

4 not fully installed or removed.

After this operation, 998 MB disk space will be freed.

Do you want to continue? [Y/n] y

(Reading database ... 115254 files and directories currently installed.)

Removing pve-kernel-5.15.35-3-pve (5.15.35-6) ...

Examining /etc/kernel/postrm.d.

run-parts: executing /etc/kernel/postrm.d/initramfs-tools 5.15.35-3-pve /boot/vmlinuz-5.15.35-3-pve

update-initramfs: Deleting /boot/initrd.img-5.15.35-3-pve

run-parts: executing /etc/kernel/postrm.d/proxmox-auto-removal 5.15.35-3-pve /boot/vmlinuz-5.15.35-3-pve

run-parts: executing /etc/kernel/postrm.d/zz-proxmox-boot 5.15.35-3-pve /boot/vmlinuz-5.15.35-3-pve

Re-executing '/etc/kernel/postrm.d/zz-proxmox-boot' in new private mount namespace..

Copying and configuring kernels on /dev/disk/by-uuid/85F8-7F41

Copying kernel 5.13.19-2-pve

Copying kernel 5.13.19-6-pve

Copying kernel 5.15.35-2-pve

Copying kernel 5.15.60-2-pve

cp: error writing '/var/tmp/espmounts/85F8-7F41/vmlinuz-5.15.60-2-pve': No space left on device

run-parts: /etc/kernel/postrm.d/zz-proxmox-boot exited with return code 1

Failed to process /etc/kernel/postrm.d at /var/lib/dpkg/info/pve-kernel-5.15.35-3-pve.postrm line 14.

dpkg: error processing package pve-kernel-5.15.35-3-pve (--remove):

installed pve-kernel-5.15.35-3-pve package post-removal script subprocess returned error exit status 1

dpkg: too many errors, stopping

Errors were encountered while processing:

pve-kernel-5.15.35-3-pve

Processing was halted because there were too many errors.

E: Sub-process /usr/bin/dpkg returned an error code (1)

Code:

root@pve:~# apt autoremove

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following packages will be REMOVED:

pve-kernel-5.15.35-3-pve

0 upgraded, 0 newly installed, 1 to remove and 0 not upgraded.

4 not fully installed or removed.

After this operation, 379 MB disk space will be freed.

Do you want to continue? [Y/n] y

(Reading database ... 108033 files and directories currently installed.)

Removing pve-kernel-5.15.35-3-pve (5.15.35-6) ...

Examining /etc/kernel/postrm.d.

run-parts: executing /etc/kernel/postrm.d/initramfs-tools 5.15.35-3-pve /boot/vmlinuz-5.15.35-3-pve

update-initramfs: Deleting /boot/initrd.img-5.15.35-3-pve

run-parts: executing /etc/kernel/postrm.d/proxmox-auto-removal 5.15.35-3-pve /boot/vmlinuz-5.15.35-3-pve

run-parts: executing /etc/kernel/postrm.d/zz-proxmox-boot 5.15.35-3-pve /boot/vmlinuz-5.15.35-3-pve

Re-executing '/etc/kernel/postrm.d/zz-proxmox-boot' in new private mount namespace..

Copying and configuring kernels on /dev/disk/by-uuid/85F8-7F41

Copying kernel 5.13.19-2-pve

Copying kernel 5.13.19-6-pve

Copying kernel 5.15.35-2-pve

Copying kernel 5.15.60-2-pve

cp: error writing '/var/tmp/espmounts/85F8-7F41/vmlinuz-5.15.60-2-pve': No space left on device

run-parts: /etc/kernel/postrm.d/zz-proxmox-boot exited with return code 1

Failed to process /etc/kernel/postrm.d at /var/lib/dpkg/info/pve-kernel-5.15.35-3-pve.postrm line 14.

dpkg: error processing package pve-kernel-5.15.35-3-pve (--remove):

installed pve-kernel-5.15.35-3-pve package post-removal script subprocess returned error exit status 1

dpkg: too many errors, stopping

Errors were encountered while processing:

pve-kernel-5.15.35-3-pve

Processing was halted because there were too many errors.

E: Sub-process /usr/bin/dpkg returned an error code (1)

Code:

root@pve:~# pveversion -v

proxmox-ve: 7.2-1 (running kernel: 5.13.19-2-pve)

pve-manager: 7.2-11 (running version: 7.2-11/b76d3178)

pve-kernel-helper: 7.2-13

pve-kernel-5.13: 7.1-9

pve-kernel-5.4: 6.4-11

pve-kernel-5.0: 6.0-11

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.15.35-1-pve: 5.15.35-3

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-3-pve: 5.13.19-7

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.4.157-1-pve: 5.4.157-1

pve-kernel-5.0.21-5-pve: 5.0.21-10

pve-kernel-5.0.15-1-pve: 5.0.15-1

...

The active kernel is:

root@pve:~# uname -r

5.13.19-2-pve

Code:

root@pve:~# proxmox-boot-tool kernel list

Manually selected kernels:

None.

Automatically selected kernels:

5.13.19-2-pve

5.13.19-6-pve

5.15.35-2-pve

5.15.60-2-pve

Code:

apt purge pve-kernel-5.0.15-1-pve

apt purge pve-kernel-5.0

apt purge pve-kernel-5.4.157-1-pveThat's a bit odd - since 512M should really be enough for 4 kernels...

* what's the output of `proxmox-boot-tool status` ?

I would suggest the following - mount each ESP listed in /etc/kernel/proxmox-boot-uuids somewhere and see where the diskspace is used:

* `mount /dev/disk-by-uuid/<UUID> /mnt/tmp` (the <UUID> is listed in /etc/kernel/proxmox-boot-uuids)

* du -smc /mnt/tmp/*

then clean it up - or resetup the esps using `proxmox-boot-tool format` and `proxmox-boot-tool init`

see the reference-documentation:

https://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysboot

and the following wiki-page:

https://pve.proxmox.com/wiki/ZFS:_Switch_Legacy-Boot_to_Proxmox_Boot_Tool

I hope this helps!

* what's the output of `proxmox-boot-tool status` ?

I would suggest the following - mount each ESP listed in /etc/kernel/proxmox-boot-uuids somewhere and see where the diskspace is used:

* `mount /dev/disk-by-uuid/<UUID> /mnt/tmp` (the <UUID> is listed in /etc/kernel/proxmox-boot-uuids)

* du -smc /mnt/tmp/*

then clean it up - or resetup the esps using `proxmox-boot-tool format` and `proxmox-boot-tool init`

see the reference-documentation:

https://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysboot

and the following wiki-page:

https://pve.proxmox.com/wiki/ZFS:_Switch_Legacy-Boot_to_Proxmox_Boot_Tool

I hope this helps!