Hello everyone this is my first attempt at creating a proxmox with an ubuntu server so i can create a media streaming nas for my home.

i came so far but i am at a point where i do not know if this is the normal or an issue that i have to handle.

after installing the ct template ubuntu

ubuntu-24.04-standard 24.04-2 amd64.tar.zst

and creating and from the template creating an LXC .

i have run my ubuntu but it is giving me a failed to connect error on the login

this always happens after mounting the zfs pool

in the first instalation no issue is shown .

updates are tested.

after i add the zfs pool nas drive and open the ubuntu back again i get this .

```

Welcome to Ubuntu 24.04 LTS (GNU/Linux 6.14.8-2-pve x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settings

```

```

root@media-server:~# apt update

Ign:1 http://archive.ubuntu.com/ubuntu noble InRelease

Ign:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Ign:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Ign:1 http://archive.ubuntu.com/ubuntu noble InRelease

Ign:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Ign:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Ign:1 http://archive.ubuntu.com/ubuntu noble InRelease

Ign:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Ign:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Err:1 http://archive.ubuntu.com/ubuntu noble InRelease

Temporary failure resolving 'archive.ubuntu.com'

Err:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Temporary failure resolving 'archive.ubuntu.com'

Err:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Temporary failure resolving 'archive.ubuntu.com'

Reading package lists... Done

Building dependency tree... Done

All packages are up to date.

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/noble/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/noble-updates/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/noble-security/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Some index files failed to download. They have been ignored, or old ones used instead.

```

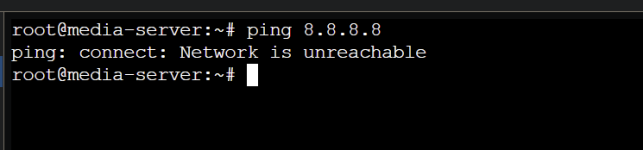

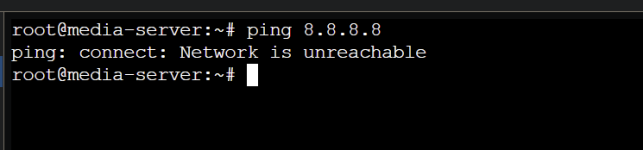

not only the update but all connection seems to be corrupted.

i know i am doing something wrong but i couldn't put my finger on it for a long time now.

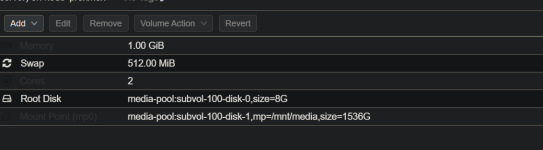

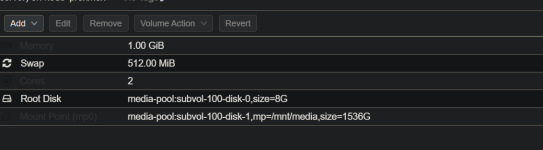

i tested to add the mount option in the main shell

with "pct set 100 -mp0 /media-pool/mnt/media,mp=/mnt/media"

or create it manually on the gui

but all results in the same end.

i tested it with diffrent size / memory / core allocations at the main setup

i tested mounting sizes with

- 512

- 1024

- 1536

all end up at the same place

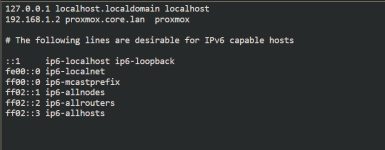

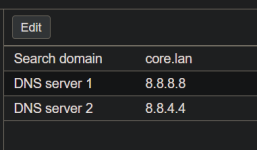

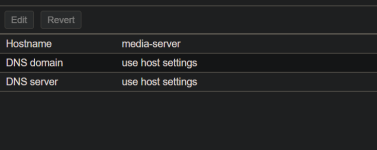

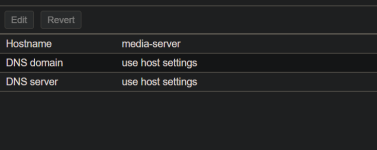

### DNS - Network Setup on LXC

Network ip - dhcp

the issue doesn't go away if i detach / remove the newly added mount as well.

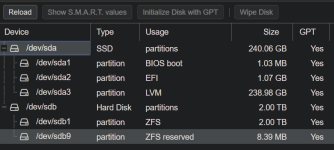

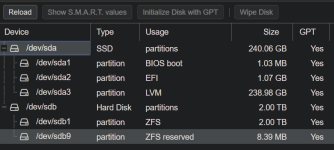

## Setup Detail.

proxmox is setup on the ssd 250

2tb wd red nas added formated and turned into pool

i came so far but i am at a point where i do not know if this is the normal or an issue that i have to handle.

after installing the ct template ubuntu

ubuntu-24.04-standard 24.04-2 amd64.tar.zst

and creating and from the template creating an LXC .

i have run my ubuntu but it is giving me a failed to connect error on the login

this always happens after mounting the zfs pool

in the first instalation no issue is shown .

updates are tested.

after i add the zfs pool nas drive and open the ubuntu back again i get this .

```

Welcome to Ubuntu 24.04 LTS (GNU/Linux 6.14.8-2-pve x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/pro

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settings

```

```

root@media-server:~# apt update

Ign:1 http://archive.ubuntu.com/ubuntu noble InRelease

Ign:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Ign:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Ign:1 http://archive.ubuntu.com/ubuntu noble InRelease

Ign:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Ign:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Ign:1 http://archive.ubuntu.com/ubuntu noble InRelease

Ign:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Ign:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Err:1 http://archive.ubuntu.com/ubuntu noble InRelease

Temporary failure resolving 'archive.ubuntu.com'

Err:2 http://archive.ubuntu.com/ubuntu noble-updates InRelease

Temporary failure resolving 'archive.ubuntu.com'

Err:3 http://archive.ubuntu.com/ubuntu noble-security InRelease

Temporary failure resolving 'archive.ubuntu.com'

Reading package lists... Done

Building dependency tree... Done

All packages are up to date.

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/noble/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/noble-updates/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Failed to fetch http://archive.ubuntu.com/ubuntu/dists/noble-security/InRelease Temporary failure resolving 'archive.ubuntu.com'

W: Some index files failed to download. They have been ignored, or old ones used instead.

```

not only the update but all connection seems to be corrupted.

i know i am doing something wrong but i couldn't put my finger on it for a long time now.

i tested to add the mount option in the main shell

with "pct set 100 -mp0 /media-pool/mnt/media,mp=/mnt/media"

or create it manually on the gui

but all results in the same end.

i tested it with diffrent size / memory / core allocations at the main setup

i tested mounting sizes with

- 512

- 1024

- 1536

all end up at the same place

### DNS - Network Setup on LXC

Network ip - dhcp

the issue doesn't go away if i detach / remove the newly added mount as well.

## Setup Detail.

proxmox is setup on the ssd 250

2tb wd red nas added formated and turned into pool