Hi, can someone help me with this strange issue..?

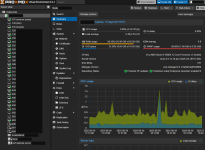

So, for some reason Proxmox RAM usage is steadily increasing when the system is on, until it gets totally full and then things starts to happen - as expected. Strange thing is that RAM reserved for the VMs (combined) does not exceed the total physical memory amount (64Gb) on server. Last time the memory started to run out on the server (>99% in use, SWAP full), I calculated that combined actual usage of RAM on VMs was only around 40Gb, so I wonder what caching (or other) action Proxmox does in the background to use 24Gb of RAM? I am not using ballooing for any of VMs if that could affect on things.

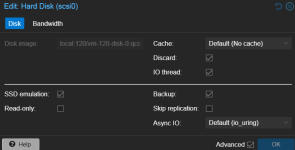

I have red that storage caching could cause this issue, but since I am not using ZFS, this should not be the cause? VMs use qcow2 as a disk format (not sure does this affect).

More information about the system in the screenshot.

The server is dedicated rent server from OVH and it had preinstalled Proxmox with 2GiB of SWAP: I am not sure why, because I have understood that Proxmox should work better without SWAP at all. I was thinking that probably issue could be fixed by increasing the SWAP to 64Gb but this would be bad for performance... right?

As a solution, I restart the server once a month, which is just fine since some updates require this anyways but I would like to understand what is causing this issue on the ground level.

Any ideas?

So, for some reason Proxmox RAM usage is steadily increasing when the system is on, until it gets totally full and then things starts to happen - as expected. Strange thing is that RAM reserved for the VMs (combined) does not exceed the total physical memory amount (64Gb) on server. Last time the memory started to run out on the server (>99% in use, SWAP full), I calculated that combined actual usage of RAM on VMs was only around 40Gb, so I wonder what caching (or other) action Proxmox does in the background to use 24Gb of RAM? I am not using ballooing for any of VMs if that could affect on things.

I have red that storage caching could cause this issue, but since I am not using ZFS, this should not be the cause? VMs use qcow2 as a disk format (not sure does this affect).

More information about the system in the screenshot.

The server is dedicated rent server from OVH and it had preinstalled Proxmox with 2GiB of SWAP: I am not sure why, because I have understood that Proxmox should work better without SWAP at all. I was thinking that probably issue could be fixed by increasing the SWAP to 64Gb but this would be bad for performance... right?

As a solution, I restart the server once a month, which is just fine since some updates require this anyways but I would like to understand what is causing this issue on the ground level.

Any ideas?