Hi,

the autoscaler increased the number of PGs on our Ceph storage (Hardware like this but 5 nodes).

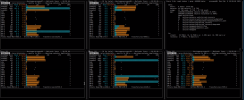

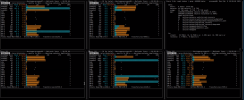

As soon as the backfill starts the VMs become unusable and we startet killing OSD processes that cause high read io load. So as in this picture we would kill the ceph-osd process working on dm-3on proxmox07 and the two on proxmox10. As soon as we killed the 3 OSDs the Ceph woud go into recovery and the VMs would become usable again.

How to throttle backfilling?

We tried:

...but it does not prevent the NVMe's to go into 100% read io usage.

the autoscaler increased the number of PGs on our Ceph storage (Hardware like this but 5 nodes).

As soon as the backfill starts the VMs become unusable and we startet killing OSD processes that cause high read io load. So as in this picture we would kill the ceph-osd process working on dm-3on proxmox07 and the two on proxmox10. As soon as we killed the 3 OSDs the Ceph woud go into recovery and the VMs would become usable again.

How to throttle backfilling?

We tried:

Code:

osd_backfill_scan_max 16 mon

osd_backfill_scan_min 4 mon

osd_client_op_priority 63 mon

osd_max_backfills 1 mon

osd_recovery_op_priority 1 mon

osd_recovery_sleep 3.000000 mon

Last edited: