We are using proxmox with a 3 node cluster setup.

After a power outage we are not able to see node1 in node2,3. node 2,3 in node 1.

FIrst I thought it was a time sync issue. But the time is totally in sync.

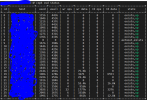

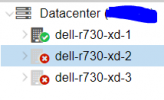

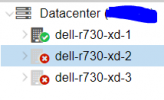

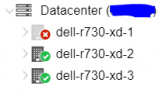

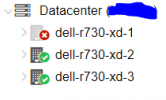

after logging into 1.1.1.1

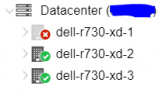

after logging into 1.1.1.2

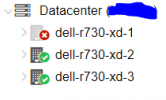

after logging into 1.1.1.3

This is the output of systemctl status pve-cluster corosync -l node1

# systemctl status pve-cluster corosync -l

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2021-01-11 18:05:00 IST; 26min ago

Process: 2522 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 2533 (pmxcfs)

Tasks: 12 (limit: 12287)

Memory: 59.8M

CGroup: /system.slice/pve-cluster.service

└─2533 /usr/bin/pmxcfs

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [dcdb] crit: cpg_initialize failed: 2

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [dcdb] crit: can't initialize service

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [status] crit: cpg_initialize failed: 2

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [status] crit: can't initialize service

Jan 11 18:05:00 dell-r730-xd-1 systemd[1]: Started The Proxmox VE cluster filesystem.

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [status] notice: update cluster info (cluster name ####, version = 3)

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [dcdb] notice: members: 1/2533

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [dcdb] notice: all data is up to date

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [status] notice: members: 1/2533

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [status] notice: all data is up to date

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2021-01-11 18:05:00 IST; 26min ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 2645 (corosync)

Tasks: 9 (limit: 12287)

Memory: 144.7M

CGroup: /system.slice/corosync.service

└─2645 /usr/sbin/corosync -f

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 has no active links

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 has no active links

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 0)

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 1 has no active links

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [TOTEM ] A new membership (1.128) was formed. Members joined: 1

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [QUORUM] Members[1]: 1

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 11 18:05:00 dell-r730-xd-1 systemd[1]: Started Corosync Cluster Engine.

This is the output of systemctl status pve-cluster corosync -l node2

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Process: 2513 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 2523 (pmxcfs)

Tasks: 10 (limit: 12287)

Memory: 85.6M

CGroup: /system.slice/pve-cluster.service

└─2523 /usr/bin/pmxcfs

Jan 11 17:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:31 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:07:29 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:26 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:38 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:54 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:55 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:22:21 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:37:22 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 2655 (corosync)

Tasks: 9 (limit: 12287)

Memory: 146.1M

CGroup: /system.slice/corosync.service

└─2655 /usr/sbin/corosync -f

Jan 05 13:44:31 dell-r730-xd-2 corosync[2655]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] link: host: 1 link: 0 is down

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

root@dell-r730-xd-2:~# systemctl status pve-cluster corosync -l

root@dell-r730-xd-2:~# systemctl status pve-cluster corosync -l

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Process: 2513 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 2523 (pmxcfs)

Tasks: 10 (limit: 12287)

Memory: 85.6M

CGroup: /system.slice/pve-cluster.service

└─2523 /usr/bin/pmxcfs

Jan 11 17:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:31 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:07:29 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:26 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:38 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:54 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:55 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:22:21 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:37:22 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 2655 (corosync)

Tasks: 9 (limit: 12287)

Memory: 146.1M

CGroup: /system.slice/corosync.service

└─2655 /usr/sbin/corosync -f

Jan 05 13:44:31 dell-r730-xd-2 corosync[2655]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] link: host: 1 link: 0 is down

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] host: host: 1 has no active links

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [TOTEM ] Token has not been received in 1237 ms

Jan 05 13:44:35 dell-r730-xd-2 corosync[2655]: [TOTEM ] A processor failed, forming new configuration.

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [TOTEM ] A new membership (2.123) was formed. Members left: 1

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [TOTEM ] Failed to receive the leave message. failed: 1

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [QUORUM] Members[2]: 2 3

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [MAIN ] Completed service synchronization, ready to provide service.

---------------------

pvecm status of node1

---------------------

Cluster information

-------------------

Name: ####

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Jan 11 18:34:05 2021

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 1.128

Quorate: No

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 1

Quorum: 2 Activity blocked

Flags:

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 1.1.1.1 (local)

---------------------

pvecm status of node2

---------------------

Cluster information

-------------------

Name: #####

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Jan 11 18:38:20 2021

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 0x00000002

Ring ID: 2.123

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 2

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000002 1 1.1.1.2 (local)

0x00000003 1 1.1.1.3

---------------------

pvecm status of node3

---------------------

Cluster information

-------------------

Name: #####

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Jan 11 18:38:20 2021

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000002

Ring ID: 2.123

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 2

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000002 1 1.1.1.2

0x00000003 1 1.1.1.3 (local)

After a power outage we are not able to see node1 in node2,3. node 2,3 in node 1.

FIrst I thought it was a time sync issue. But the time is totally in sync.

after logging into 1.1.1.1

after logging into 1.1.1.2

after logging into 1.1.1.3

This is the output of systemctl status pve-cluster corosync -l node1

# systemctl status pve-cluster corosync -l

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2021-01-11 18:05:00 IST; 26min ago

Process: 2522 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 2533 (pmxcfs)

Tasks: 12 (limit: 12287)

Memory: 59.8M

CGroup: /system.slice/pve-cluster.service

└─2533 /usr/bin/pmxcfs

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [dcdb] crit: cpg_initialize failed: 2

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [dcdb] crit: can't initialize service

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [status] crit: cpg_initialize failed: 2

Jan 11 18:04:59 dell-r730-xd-1 pmxcfs[2533]: [status] crit: can't initialize service

Jan 11 18:05:00 dell-r730-xd-1 systemd[1]: Started The Proxmox VE cluster filesystem.

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [status] notice: update cluster info (cluster name ####, version = 3)

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [dcdb] notice: members: 1/2533

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [dcdb] notice: all data is up to date

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [status] notice: members: 1/2533

Jan 11 18:05:05 dell-r730-xd-1 pmxcfs[2533]: [status] notice: all data is up to date

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2021-01-11 18:05:00 IST; 26min ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 2645 (corosync)

Tasks: 9 (limit: 12287)

Memory: 144.7M

CGroup: /system.slice/corosync.service

└─2645 /usr/sbin/corosync -f

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 has no active links

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 (passive) best link: 0 (pri: 1)

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 3 has no active links

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 0)

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [KNET ] host: host: 1 has no active links

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [TOTEM ] A new membership (1.128) was formed. Members joined: 1

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [QUORUM] Members[1]: 1

Jan 11 18:05:00 dell-r730-xd-1 corosync[2645]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 11 18:05:00 dell-r730-xd-1 systemd[1]: Started Corosync Cluster Engine.

This is the output of systemctl status pve-cluster corosync -l node2

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Process: 2513 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 2523 (pmxcfs)

Tasks: 10 (limit: 12287)

Memory: 85.6M

CGroup: /system.slice/pve-cluster.service

└─2523 /usr/bin/pmxcfs

Jan 11 17:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:31 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:07:29 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:26 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:38 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:54 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:55 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:22:21 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:37:22 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 2655 (corosync)

Tasks: 9 (limit: 12287)

Memory: 146.1M

CGroup: /system.slice/corosync.service

└─2655 /usr/sbin/corosync -f

Jan 05 13:44:31 dell-r730-xd-2 corosync[2655]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] link: host: 1 link: 0 is down

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

root@dell-r730-xd-2:~# systemctl status pve-cluster corosync -l

root@dell-r730-xd-2:~# systemctl status pve-cluster corosync -l

● pve-cluster.service - The Proxmox VE cluster filesystem

Loaded: loaded (/lib/systemd/system/pve-cluster.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Process: 2513 ExecStart=/usr/bin/pmxcfs (code=exited, status=0/SUCCESS)

Main PID: 2523 (pmxcfs)

Tasks: 10 (limit: 12287)

Memory: 85.6M

CGroup: /system.slice/pve-cluster.service

└─2523 /usr/bin/pmxcfs

Jan 11 17:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:25 dell-r730-xd-2 pmxcfs[2523]: [dcdb] notice: data verification successful

Jan 11 18:00:31 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:07:29 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:26 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:14:38 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:54 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:15:55 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:22:21 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

Jan 11 18:37:22 dell-r730-xd-2 pmxcfs[2523]: [status] notice: received log

● corosync.service - Corosync Cluster Engine

Loaded: loaded (/lib/systemd/system/corosync.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2020-12-18 20:00:26 IST; 3 weeks 2 days ago

Docs: man:corosync

man:corosync.conf

man:corosync_overview

Main PID: 2655 (corosync)

Tasks: 9 (limit: 12287)

Memory: 146.1M

CGroup: /system.slice/corosync.service

└─2655 /usr/sbin/corosync -f

Jan 05 13:44:31 dell-r730-xd-2 corosync[2655]: [MAIN ] Completed service synchronization, ready to provide service.

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] link: host: 1 link: 0 is down

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] host: host: 1 (passive) best link: 0 (pri: 1)

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [KNET ] host: host: 1 has no active links

Jan 05 13:44:34 dell-r730-xd-2 corosync[2655]: [TOTEM ] Token has not been received in 1237 ms

Jan 05 13:44:35 dell-r730-xd-2 corosync[2655]: [TOTEM ] A processor failed, forming new configuration.

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [TOTEM ] A new membership (2.123) was formed. Members left: 1

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [TOTEM ] Failed to receive the leave message. failed: 1

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [QUORUM] Members[2]: 2 3

Jan 05 13:44:37 dell-r730-xd-2 corosync[2655]: [MAIN ] Completed service synchronization, ready to provide service.

---------------------

pvecm status of node1

---------------------

Cluster information

-------------------

Name: ####

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Jan 11 18:34:05 2021

Quorum provider: corosync_votequorum

Nodes: 1

Node ID: 0x00000001

Ring ID: 1.128

Quorate: No

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 1

Quorum: 2 Activity blocked

Flags:

Membership information

----------------------

Nodeid Votes Name

0x00000001 1 1.1.1.1 (local)

---------------------

pvecm status of node2

---------------------

Cluster information

-------------------

Name: #####

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Jan 11 18:38:20 2021

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 0x00000002

Ring ID: 2.123

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 2

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000002 1 1.1.1.2 (local)

0x00000003 1 1.1.1.3

---------------------

pvecm status of node3

---------------------

Cluster information

-------------------

Name: #####

Config Version: 3

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Mon Jan 11 18:38:20 2021

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000002

Ring ID: 2.123

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 2

Quorum: 2

Flags: Quorate

Membership information

----------------------

Nodeid Votes Name

0x00000002 1 1.1.1.2

0x00000003 1 1.1.1.3 (local)