I have a Proxmox node currently configured with 126 GB RAM, actively running 10 Linux and Windows VMs. Our users were reporting issues with some of these VMs which seemed to coincide with high SWAP usage on the node, even though total RAM usage was below 50%. To ease the SWAP load, I made two changes on the node:

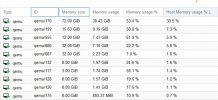

Digging deeper into the memory and SWAP utilization via smem, reveals the following:

This seems crazy to me, given the utilization levels on the rest of the node's resources. I've reviewed the Proxmox PVE Admin documentation, taking particular note of the following sections:

https://www.howtogeek.com/449691/what-is-swapiness-on-linux-and-how-to-change-it/

After all this, I'm still left scratching my head about how to best manage SWAP in the Proxmox environment. The discussion I linked above from 2018-19, indicates that a solution may involve scripting the swappiness to the Control Groups via rc.local, but that was under the PVE 5.3-X kernel and my node is running PVE 6.4-13.

What should I be doing to get my SWAP under control? Thanks!

- I configured the swappiness on the node down to 10 from the default 60

- I added additional swap capacity, increasing from 8GB to 56GB, by progressively adding 8GB swap files on the node's 'local' storage. This practice has worked on some of our other nodes to help reduce what I'll call "swap pressure"

Digging deeper into the memory and SWAP utilization via smem, reveals the following:

Code:

root@node:~# smem -s swap -r | head -n 15

PID User Command Swap USS PSS RSS

11938 root /usr/bin/kvm -id 170 -name 42599700 22674700 23489570 27412972

13235 root /usr/bin/kvm -id 667 -name 25685552 1296276 1611772 7527292

18462 root /usr/bin/kvm -id 666 -name 25408420 2421920 2731459 7814792

21446 root /usr/bin/kvm -id 126 -name 5640456 262200 306394 530272

15016 root /usr/bin/kvm -id 555 -name 2643200 4955380 5481289 6655020

18239 root /usr/bin/kvm -id 117 -name 2168480 610788 773351 1051332

24143 root /usr/bin/kvm -id 175 -name 2144240 362392 384634 468244

2668 root /usr/bin/kvm -id 132 -name 1829140 603496 670910 850020

12291 root /usr/bin/kvm -id 199 -name 550876 9824116 10101969 11293832

5717 root /usr/bin/kvm -id 128 -name 522652 1636084 1681112 1973628

7727 www-data pveproxy worker (shutdown) 133792 344 548 4000

2547 root pvedaemon 104684 644 6995 33196

15452 root pvedaemon worker 90128 12996 20974 48856

5143 root pvedaemon worker 90004 12748 20876 48908This seems crazy to me, given the utilization levels on the rest of the node's resources. I've reviewed the Proxmox PVE Admin documentation, taking particular note of the following sections:

- 3.8.8. SWAP on ZFS

- 11.5.2. Control Groups (based on discussion in Proxmox forum post: https://forum.proxmox.com/threads/swappiness-question.42295/page-2)

https://www.howtogeek.com/449691/what-is-swapiness-on-linux-and-how-to-change-it/

After all this, I'm still left scratching my head about how to best manage SWAP in the Proxmox environment. The discussion I linked above from 2018-19, indicates that a solution may involve scripting the swappiness to the Control Groups via rc.local, but that was under the PVE 5.3-X kernel and my node is running PVE 6.4-13.

What should I be doing to get my SWAP under control? Thanks!