Good Evening all, I hope you're all well!

I am looking for some assistance with an issue I have recently come across, I am finding that one of my Proxmox Nodes is locking up and appears offline but is still running, this is viewable within the summary page. This issue has been occurring when a program (Pterodactyl Wings) performs a backup. I have been able to rule out this being the issue as I have started a Fresh Proxmox Install and virtual machine.

Hardware:

Dell Optiplex SPF 5050 - Node02

Proxmox Version & Others:

Configuration:

Virtual Machine Configuration:

The issue:

Following the introduction, I have recently found myself experiencing a node within my Cluster that seems to lock out, I am unable to access it to reboot it and it does eventually reboot itself and then has to configure storage for a few minutes. Once it has returned the machine works as expected and there are no issues, the machine runs a game server which performs backups at 01:00:00 every day, I have found once this is activated the machine and Node go into a state where it is uncontactable but seems to still be running but in a frozen state.

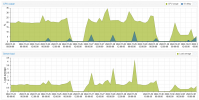

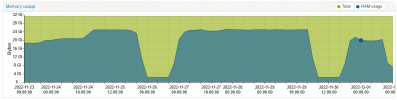

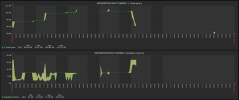

This can also be seen within a Zabix Graph as well where it goes offline and does not come back, it also has Random increase and decrease in Drive Space:

Following this state, I would normally have to start the VM or start the game server, which it would then run without fault, until the next backup and then it would again go into a state where it is still running but can't be reached. I was able to capture the logs before this took place, but they don't indicate anything that was of concern or would indicate an issue:

I have then replicated this by manually running a backup within the VM which takes the host and VM offline, I have not been able to find a way for this to log anything further, I have checked through the logs within /var/logs/ and none of them indicate anything at this time.

Following this issue, I attempted to rebuild the VM and start it again, to which I used SFTP to transfer the old files back to the server, low and behold the same issue when I got the machine to do some work such as moving files or transferring. Transferring from the old machine before I rebuilt it didn't have any issues, but the new one receiving took the host and VM right offline again.

Again I have had no logs whatever for this, so I figured a corruption so I rebuilt the node from scratch, there were no issues at all, up until I transferred the files, it lasted a lot longer, but it did hit this state again, but as I had the machine on my tech bench I was able to hit enter in the window as it said the following:

I have then received RDC errors for ZFS within the local PVE where it says the update must be at least a one-second difference, which can be seen in the attached log.

I have uploaded a file under "2.12.2022.log.txt" with an export of the log, but following this, I was able to get the VM to come back to life and it ran, the Cluster showed the node offline and I could not contact it for a good 5 minutes before it came back online. But it killed my SFTP transfer when it went offline, so it appears this is what allowed it to come back, but I am at a real loss as to why this is taking the entire host offline or freezing and locking.

Would anyone have any suggestions on what I can try, I am unable to really move to the other node I have due to limited resources, but if needed I can have a go, but this node is meant to handle a lot of heavy lifting.

Any help is much appreciated. =)

I am looking for some assistance with an issue I have recently come across, I am finding that one of my Proxmox Nodes is locking up and appears offline but is still running, this is viewable within the summary page. This issue has been occurring when a program (Pterodactyl Wings) performs a backup. I have been able to rule out this being the issue as I have started a Fresh Proxmox Install and virtual machine.

Hardware:

Dell Optiplex SPF 5050 - Node02

- I5 7500

- 32GB of DDR4 @2444Mhz

- 1x 240GB SanDisk SATA SSD

- 1x 500GB WD Blue HDD

- I5 7500

- 16GB of DDR4 @2444Mhz

- 1x 240GB SanDisk SATA SSD

- 1x 500GB WD Blue HDD

Proxmox Version & Others:

- Linux 5.15.74-1-pve #1 SMP PVE 5.15.74-1

- pve-manager/7.3-3/c3928077

- Proxmox 7.3.3

Configuration:

- Storage is set up in a RAID0 ZFS format

- ZFS is configured to a MAX cache of 2GB

- ZFS is configured to a MIN cache of 1GB

- Both Nodes have Been updated to the latest patches

Virtual Machine Configuration:

- Processor: 1 Socket 4 Cores

- Ram: 24GB

- BIOS: SeaBIOS

- Machine: i440fx

- SCSI Controller: ViriO SCSI

- Hard Drive: ZFS 200GB - IOthread1

The issue:

Following the introduction, I have recently found myself experiencing a node within my Cluster that seems to lock out, I am unable to access it to reboot it and it does eventually reboot itself and then has to configure storage for a few minutes. Once it has returned the machine works as expected and there are no issues, the machine runs a game server which performs backups at 01:00:00 every day, I have found once this is activated the machine and Node go into a state where it is uncontactable but seems to still be running but in a frozen state.

This can also be seen within a Zabix Graph as well where it goes offline and does not come back, it also has Random increase and decrease in Drive Space:

Following this state, I would normally have to start the VM or start the game server, which it would then run without fault, until the next backup and then it would again go into a state where it is still running but can't be reached. I was able to capture the logs before this took place, but they don't indicate anything that was of concern or would indicate an issue:

I have then replicated this by manually running a backup within the VM which takes the host and VM offline, I have not been able to find a way for this to log anything further, I have checked through the logs within /var/logs/ and none of them indicate anything at this time.

Following this issue, I attempted to rebuild the VM and start it again, to which I used SFTP to transfer the old files back to the server, low and behold the same issue when I got the machine to do some work such as moving files or transferring. Transferring from the old machine before I rebuilt it didn't have any issues, but the new one receiving took the host and VM right offline again.

Again I have had no logs whatever for this, so I figured a corruption so I rebuilt the node from scratch, there were no issues at all, up until I transferred the files, it lasted a lot longer, but it did hit this state again, but as I had the machine on my tech bench I was able to hit enter in the window as it said the following:

It also had a lot of ZFS errors, which I will paste below:Dec 02 00:32:59 SRVDEBPROXNOD02 systemd[1]: Stopped Journal Service.

Dec 02 00:32:59 SRVDEBPROXNOD02 systemd[1]: Starting Journal Service...

Dec 02 00:32:59 SRVDEBPROXNOD02 systemd-journald[249823]: Journal started

Dec 02 00:32:59 SRVDEBPROXNOD02 systemd-journald[249823]: System Journal (/var/log/journal/df051a9f50f046179f11e7e5158513a6) is 1.4M, max 4.0G, 3.9G free.

Dec 02 00:32:07 SRVDEBPROXNOD02 systemd[1]: systemd-journald.service: Watchdog timeout (limit 3min)!

Dec 02 00:29:20 SRVDEBPROXNOD02 pve-firewall[1375]: firewall update time (14.015 seconds)

Dec 02 00:32:07 SRVDEBPROXNOD02 systemd[1]: systemd-journald.service: Killing process 551 (systemd-journal) with signal SIGABRT.

Dec 02 00:32:58 SRVDEBPROXNOD02 pve-firewall[1375]: firewall update time (217.217 seconds)

Dec 02 00:32:58 SRVDEBPROXNOD02 pveproxy[1474]: proxy detected vanished client connection

Dec 02 00:25:55 SRVDEBPROXNOD02 pvestatd[1381]: status update time (120.726 seconds)

Dec 02 00:25:55 SRVDEBPROXNOD02 pve-firewall[1375]: firewall update time (120.891 seconds)

Dec 02 00:25:56 SRVDEBPROXNOD02 CRON[214726]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Dec 02 00:25:56 SRVDEBPROXNOD02 CRON[214779]: (root) CMD (if [ $(date +%w) -eq 0 ] && [ -x /usr/lib/zfs-linux/trim ]; then /usr/lib/zfs-linux/trim; fi)

Dec 02 00:25:56 SRVDEBPROXNOD02 CRON[214726]: pam_unix(cron:session): session closed for user root

Dec 02 00:25:59 SRVDEBPROXNOD02 pve-ha-crm[1465]: loop take too long (123 seconds)

Dec 02 00:26:00 SRVDEBPROXNOD02 pve-ha-lrm[1479]: loop take too long (128 seconds)

Dec 02 00:26:16 SRVDEBPROXNOD02 pmxcfs[1275]: [dcdb] notice: data verification successful

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: INFO: task zvol:255 blocked for more than 120 seconds.

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: Tainted: P O 5.15.74-1-pve #1

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: task:zvol statestack: 0 pid: 255 ppid: 2 flags:0x00004000

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: Call Trace:

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: <TASK>

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: __schedule+0x34e/0x1740

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: ? kmem_cache_alloc+0x1ab/0x2f0

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: schedule+0x69/0x110

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: io_schedule+0x46/0x80

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: cv_wait_common+0xae/0x140 [spl]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: ? wait_woken+0x70/0x70

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: __cv_wait_io+0x18/0x20 [spl]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: txg_wait_synced_impl+0xda/0x130 [zfs]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: txg_wait_synced+0x10/0x50 [zfs]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: dmu_tx_wait+0x1ee/0x410 [zfs]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: dmu_tx_assign+0x170/0x4f0 [zfs]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: zvol_write+0x184/0x4b0 [zfs]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: zvol_write_task+0x13/0x30 [zfs]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: taskq_thread+0x29c/0x4d0 [spl]

Dec 02 00:28:42 SRVDEBPROXNOD02 kernel: ? wake_up_q+0x90/0x90

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: ? zvol_write+0x4b0/0x4b0 [zfs]

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: ? taskq_thread_spawn+0x60/0x60 [spl]

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: kthread+0x127/0x150

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: ? set_kthread_struct+0x50/0x50

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: ret_from_fork+0x1f/0x30

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: </TASK>

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: INFO: task txg_sync:377 blocked for more than 120 seconds.

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: Tainted: P O 5.15.74-1-pve #1

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: task:txg_sync statestack: 0 pid: 377 ppid: 2 flags:0x00004000

Dec 02 00:28:43 SRVDEBPROXNOD02 kernel: Call Trace:

I have then received RDC errors for ZFS within the local PVE where it says the update must be at least a one-second difference, which can be seen in the attached log.

I have uploaded a file under "2.12.2022.log.txt" with an export of the log, but following this, I was able to get the VM to come back to life and it ran, the Cluster showed the node offline and I could not contact it for a good 5 minutes before it came back online. But it killed my SFTP transfer when it went offline, so it appears this is what allowed it to come back, but I am at a real loss as to why this is taking the entire host offline or freezing and locking.

Would anyone have any suggestions on what I can try, I am unable to really move to the other node I have due to limited resources, but if needed I can have a go, but this node is meant to handle a lot of heavy lifting.

Any help is much appreciated. =)

Last edited: