Hi there,

as

@Osikx already mentioned, the 5-minute-downtime after switching a CARP IP from Master to Backup is a problem caused by Hetzners vSwitch. We did a few tests yesterday after running into exactly the same issue.

I can also confirm that this is caused by some kind of MAC address caching within the vSwitch, if you have servers in different sections of Hetzners datacenter park. The issue basically has nothing to do with proxmox or opnsense, it can also be reproduced with virtual network interface directly at the dedicated server itself.

What we've tried yesterday:

- Host A, B, C and D are connected via Hetzner vSwitch.

- Host A at FSN1-DC16 is configured with IP 10.0.0.1

- Host B at FSN1-DC14 is configured with IP 10.0.0.2 and mac address 00:11:22:33:44:55

- Host C at FSN1-DC4 is not configured

- Host D at FSN1-DC4 is not configured

=> Ping from Host A to 10.0.0.2 (-> B) works perfectly

So we changed the configuration and "moved" the entire virtual network interface (MAC+IP):

- Host B: Disable network interface - not configured anymore

- Host C: Add interface with same configuration previously configured at Host B (10.0.0.2 / MAC 00:11:22:33:44:55)

=> Ping from Host A to 10.0.0.2 (-> C) started working after ~5 minutes (the well-known delay)

=> When we've tried the same with different mac addresses on Host B / C, ping worked after ~2 seconds.

=> Sending GARP packages didn't help either.

=> Different MAC addresses for CARP doesn't seem to be a good solution (and won't work with opnsense)

We've played around a little bit and moved the MAC+IP around a little bit in the same way:

- from Host B to C: 5 min delay

- from Host B to D: 5 min delay

- from Host C to B: 5 min delay

- from Host C to D: ping worked immediately

- from Host D to C: ping worked immediately

- from Host D to B: 5 min delay

=> Moving around MAC+IP at the same building (FSN1-DC4) worked quite good

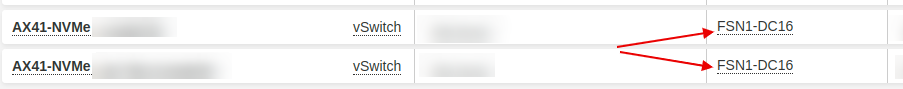

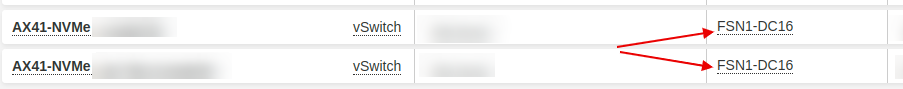

==> With this in knowledge, we have ordered a move of Host B to FSN1-DC16 so that host A and B are in the same DC (costs 39€)

==> It seems that our CARP-IPs working now...

------------------------------------------------------------

Long story short: CARP IPs via Hetzner vSwitch seems to work, if both dedicated hosts in the same datacenter: