Hi,

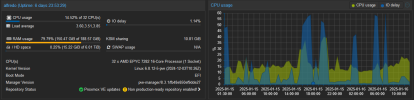

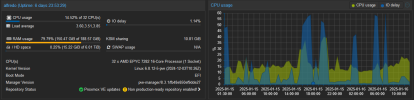

my problem is when i upload large files to nextcloud (AIO) on VM or make copy of VM my I/O jump to 50% and some of VM became unresponsive eg websites stop working on VM on nextcloud, Windows Server stop responding and proxmox interface timeout. Something like coping VM can be understandable (too much i/o on rpool on with proxmox is working on), but uploading a large files doesn't (high i/o for slowpool shouldn't efect VM on rpool or nvme00 pool).

It get 2 time soo lagy that i need to reboot proxmox, and 1 time event couldn't find boot drive for proxmox but after many reboots and trying it figure it out. Still this lag is conserning. Question is what i did wrong and what change to make it go away?

My setup:

Proxmox is on rpool:

Drives for data is on HDD on slowpool:

I recently add more nvme and move most heavy VM on this to freeup some i/o on rpool but it didn't help.

Some screenshots:

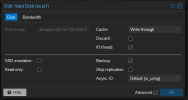

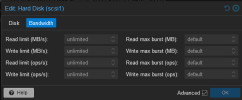

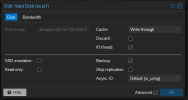

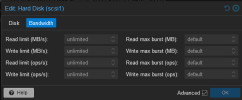

VM 100 with have nextcloud with data store on slowpool disk 0:

Full log file journal from one event:

Suspect detach and atach drives when running?

https://chmura.b24.best-it.pl/s/HZxHH2RBtPsdi5j

my problem is when i upload large files to nextcloud (AIO) on VM or make copy of VM my I/O jump to 50% and some of VM became unresponsive eg websites stop working on VM on nextcloud, Windows Server stop responding and proxmox interface timeout. Something like coping VM can be understandable (too much i/o on rpool on with proxmox is working on), but uploading a large files doesn't (high i/o for slowpool shouldn't efect VM on rpool or nvme00 pool).

It get 2 time soo lagy that i need to reboot proxmox, and 1 time event couldn't find boot drive for proxmox but after many reboots and trying it figure it out. Still this lag is conserning. Question is what i did wrong and what change to make it go away?

My setup:

Rich (BB code):

CPU(s)

32 x AMD EPYC 7282 16-Core Processor (1 Socket)

Kernel Version

Linux 6.8.12-5-pve (2024-12-03T10:26Z)

Boot Mode

EFI

Manager Version

pve-manager/8.3.1/fb48e850ef9dde27

Repository Status

Proxmox VE updates

Non production-ready repository enabled!

Rich (BB code):

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

nvme00 3.48T 519G 2.98T - - 8% 14% 1.00x ONLINE -

rpool 11.8T 1.67T 10.1T - - 70% 14% 1.76x ONLINE -

slowpool 21.8T 9.32T 12.5T - - 46% 42% 1.38x ONLINE -Proxmox is on rpool:

Code:

root@alfredo:~# zpool status rpool

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 02:17:09 with 0 errors on Sun Jan 12 02:41:11 2025

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

ata-Samsung_SSD_870_EVO_4TB_S6BCNX0T306226Y-part3 ONLINE 0 0 0

ata-Samsung_SSD_870_EVO_4TB_S6BCNX0T304731Z-part3 ONLINE 0 0 0

ata-Samsung_SSD_870_EVO_4TB_S6BCNX0T400242Z-part3 ONLINE 0 0 0

special

mirror-1 ONLINE 0 0 0

nvme-Samsung_SSD_970_EVO_Plus_1TB_S6P7NS0T314087Z ONLINE 0 0 0

nvme-Samsung_SSD_970_EVO_Plus_1TB_S6P7NS0T314095M ONLINE 0 0 0

errors: No known data errors

Code:

root@alfredo:~# zfs get all rpool

NAME PROPERTY VALUE SOURCE

rpool type filesystem -

rpool creation Fri Aug 26 16:14 2022 -

rpool used 1.88T -

rpool available 6.00T -

rpool referenced 120K -

rpool compressratio 1.26x -

rpool mounted yes -

rpool quota none default

rpool reservation none default

rpool recordsize 128K default

rpool mountpoint /rpool default

rpool sharenfs off default

rpool checksum on default

rpool compression on local

rpool atime on local

rpool devices on default

rpool exec on default

rpool setuid on default

rpool readonly off default

rpool zoned off default

rpool snapdir hidden default

rpool aclmode discard default

rpool aclinherit restricted default

rpool createtxg 1 -

rpool canmount on default

rpool xattr on default

rpool copies 1 default

rpool version 5 -

rpool utf8only off -

rpool normalization none -

rpool casesensitivity sensitive -

rpool vscan off default

rpool nbmand off default

rpool sharesmb off default

rpool refquota none default

rpool refreservation none default

rpool guid 5222442941902153338 -

rpool primarycache all default

rpool secondarycache all default

rpool usedbysnapshots 0B -

rpool usedbydataset 120K -

rpool usedbychildren 1.88T -

rpool usedbyrefreservation 0B -

rpool logbias latency default

rpool objsetid 54 -

rpool dedup on local

rpool mlslabel none default

rpool sync standard local

rpool dnodesize legacy default

rpool refcompressratio 1.00x -

rpool written 120K -

rpool logicalused 1.85T -

rpool logicalreferenced 46K -

rpool volmode default default

rpool filesystem_limit none default

rpool snapshot_limit none default

rpool filesystem_count none default

rpool snapshot_count none default

rpool snapdev hidden default

rpool acltype off default

rpool context none default

rpool fscontext none default

rpool defcontext none default

rpool rootcontext none default

rpool relatime on local

rpool redundant_metadata all default

rpool overlay on default

rpool encryption off default

rpool keylocation none default

rpool keyformat none default

rpool pbkdf2iters 0 default

rpool special_small_blocks 128K local

rpool prefetch all defaultDrives for data is on HDD on slowpool:

Code:

root@alfredo:~# zpool status slowpool

pool: slowpool

state: ONLINE

scan: scrub repaired 0B in 15:09:45 with 0 errors on Sun Jan 12 15:33:49 2025

config:

NAME STATE READ WRITE CKSUM

slowpool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

ata-ST6000NE000-2KR101_WSD809PN ONLINE 0 0 0

ata-ST6000NE000-2KR101_WSD7V2YP ONLINE 0 0 0

ata-ST6000NE000-2KR101_WSD7ZMFM ONLINE 0 0 0

ata-ST6000NE000-2KR101_WSD82NLF ONLINE 0 0 0

errors: No known data errors

Code:

root@alfredo:~# zfs get all slowpool

NAME PROPERTY VALUE SOURCE

slowpool type filesystem -

slowpool creation Fri Aug 19 11:33 2022 -

slowpool used 5.99T -

slowpool available 5.93T -

slowpool referenced 4.45T -

slowpool compressratio 1.05x -

slowpool mounted yes -

slowpool quota none default

slowpool reservation none default

slowpool recordsize 128K default

slowpool mountpoint /slowpool default

slowpool sharenfs off default

slowpool checksum on default

slowpool compression on local

slowpool atime on default

slowpool devices on default

slowpool exec on default

slowpool setuid on default

slowpool readonly off default

slowpool zoned off default

slowpool snapdir hidden default

slowpool aclmode discard default

slowpool aclinherit restricted default

slowpool createtxg 1 -

slowpool canmount on default

slowpool xattr on default

slowpool copies 1 default

slowpool version 5 -

slowpool utf8only off -

slowpool normalization none -

slowpool casesensitivity sensitive -

slowpool vscan off default

slowpool nbmand off default

slowpool sharesmb off default

slowpool refquota none default

slowpool refreservation none default

slowpool guid 6841581580145990709 -

slowpool primarycache all default

slowpool secondarycache all default

slowpool usedbysnapshots 0B -

slowpool usedbydataset 4.45T -

slowpool usedbychildren 1.55T -

slowpool usedbyrefreservation 0B -

slowpool logbias latency default

slowpool objsetid 54 -

slowpool dedup on local

slowpool mlslabel none default

slowpool sync standard default

slowpool dnodesize legacy default

slowpool refcompressratio 1.03x -

slowpool written 4.45T -

slowpool logicalused 6.12T -

slowpool logicalreferenced 4.59T -

slowpool volmode default default

slowpool filesystem_limit none default

slowpool snapshot_limit none default

slowpool filesystem_count none default

slowpool snapshot_count none default

slowpool snapdev hidden default

slowpool acltype off default

slowpool context none default

slowpool fscontext none default

slowpool defcontext none default

slowpool rootcontext none default

slowpool relatime on default

slowpool redundant_metadata all default

slowpool overlay on default

slowpool encryption off default

slowpool keylocation none default

slowpool keyformat none default

slowpool pbkdf2iters 0 default

slowpool special_small_blocks 0 default

slowpool prefetch all defaultI recently add more nvme and move most heavy VM on this to freeup some i/o on rpool but it didn't help.

Code:

root@alfredo:~# zpool status nvme00

pool: nvme00

state: ONLINE

scan: scrub repaired 0B in 00:19:11 with 0 errors on Sun Jan 12 00:43:12 2025

config:

NAME STATE READ WRITE CKSUM

nvme00 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

nvme-SAMSUNG_MZ1L23T8HBLA-00A07_S667NN0X601658 ONLINE 0 0 0

nvme-SAMSUNG_MZ1L23T8HBLA-00A07_S667NN0X601711 ONLINE 0 0 0

errors: No known data errorsSome screenshots:

VM 100 with have nextcloud with data store on slowpool disk 0:

Full log file journal from one event:

Suspect detach and atach drives when running?

https://chmura.b24.best-it.pl/s/HZxHH2RBtPsdi5j

Last edited: