Hello Proxmox communitiy,

I am currently working on a small proof of concept build to show off Proxmox and its functionality for my organization.

I have the following hardware:

3x Dell R240

Now, I'm aware that installing an OS on a SD card is not recommended overall but I have to work with what I have due to the limitations of the Dell R240 server.

I created a ZFS pool that contains the 4 Dell SSDs and I've created a Directory on that ZFS pool that should be used for ISO storage. When I try to upload a 4.35GB image to my ISO mount (on ZFS), it seems to cache on the Proxmox local storage and it errors out with the following message: Error '0' occured while receiving the document.

After researching this message, I believe that it is due to low available space but I am not sure how I can extend my PVE partition, if possible.

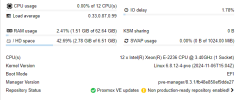

My PVE summary shows the following:

I added /dev/sdf1, which is a USB drive to see if I can store the ISO images on there but it still errors out when trying to upload .ISO images.

Aside from getting larger SD cards, any suggestions if its possible to shrink pve-data a bit?

I don't plan to store anything on the 16GB SD card except for the Proxmox OS install.

I am currently working on a small proof of concept build to show off Proxmox and its functionality for my organization.

I have the following hardware:

3x Dell R240

- E2236 CPU

- 64GB memory

- 4-Port gigabit NIC

- 2-Port Mellanox 40/56GB NIC

- 4xDell Enterprise PM883 960GB per server

- Dell IDSDM for OS installation (2x MicroSD cards - 16GB)

Now, I'm aware that installing an OS on a SD card is not recommended overall but I have to work with what I have due to the limitations of the Dell R240 server.

I created a ZFS pool that contains the 4 Dell SSDs and I've created a Directory on that ZFS pool that should be used for ISO storage. When I try to upload a 4.35GB image to my ISO mount (on ZFS), it seems to cache on the Proxmox local storage and it errors out with the following message: Error '0' occured while receiving the document.

After researching this message, I believe that it is due to low available space but I am not sure how I can extend my PVE partition, if possible.

My PVE summary shows the following:

Code:

root@5079:/var/tmp# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 894.3G 0 disk

├─sda1 8:1 0 894.2G 0 part

└─sda9 8:9 0 8M 0 part

sdb 8:16 0 894.3G 0 disk

├─sdb1 8:17 0 894.2G 0 part

└─sdb9 8:25 0 8M 0 part

sdc 8:32 0 894.3G 0 disk

├─sdc1 8:33 0 894.2G 0 part

└─sdc9 8:41 0 8M 0 part

sdd 8:48 0 894.3G 0 disk

├─sdd1 8:49 0 894.2G 0 part

└─sdd9 8:57 0 8M 0 part

sde 8:64 0 14.9G 0 disk

├─sde1 8:65 0 1007K 0 part

├─sde2 8:66 0 512M 0 part /boot/efi

└─sde3 8:67 0 14.4G 0 part

├─pve-swap 252:0 0 1G 0 lvm [SWAP]

├─pve-root 252:1 0 6.7G 0 lvm /

├─pve-data_tmeta 252:2 0 1G 0 lvm

│ └─pve-data 252:4 0 4.7G 0 lvm

└─pve-data_tdata 252:3 0 4.7G 0 lvm

└─pve-data 252:4 0 4.7G 0 lvm

Code:

root@5079:/var/tmp# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data pve twi-a-tz-- 4.70g 0.00 1.57

[data_tdata] pve Twi-ao---- 4.70g

[data_tmeta] pve ewi-ao---- 1.00g

[lvol0_pmspare] pve ewi------- 1.00g

root pve -wi-ao---- <6.71g

swap pve -wi-ao---- 1.00g

Code:

root@5079:/var/tmp# df -h

Filesystem Size Used Avail Use% Mounted on

udev 32G 0 32G 0% /dev

tmpfs 6.3G 1.5M 6.3G 1% /run

/dev/mapper/pve-root 6.6G 4.6G 1.6G 75% /

tmpfs 32G 49M 32G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

efivarfs 304K 74K 226K 25% /sys/firmware/efi/efivars

/dev/sde2 511M 12M 500M 3% /boot/efi

MPD-PVE-ZFS-01 861G 128K 861G 1% /MPD-PVE-ZFS-01

/dev/fuse 128M 16K 128M 1% /etc/pve

tmpfs 6.3G 0 6.3G 0% /run/user/0

/dev/sdf1 29G 52K 27G 1% /mnt/pve/ISO_USBI added /dev/sdf1, which is a USB drive to see if I can store the ISO images on there but it still errors out when trying to upload .ISO images.

Aside from getting larger SD cards, any suggestions if its possible to shrink pve-data a bit?

I don't plan to store anything on the 16GB SD card except for the Proxmox OS install.

Last edited: