Hello at all,

my plan is to build a 3 node ha cluster including ceph storage.

I have 3 server nodes, each one have 3 network cards 1. 2 x 25GbE / 2. 2 x 2GbE / 3. 2 x 10GbE.

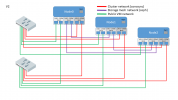

I would like to seperate the cluster, storage and VM network like this:

Should it be ok, or is there a better solution (with my existing hardware)?

And the second question I have is regarding my storage. Each node have a bunch of harddisk attached to a raid controller (I know, that HBA would be better, but I can not change the hardware). The raid controller is in JBOD mode, I hope this is OK for ceph.

Each node have 6 SSD's ( 2 x 240GB, 4 x 1TB) and 12 HDD with 2TB each. Is there a ceph configuration guide how to use/group the disks?

Thank you so much, Osti

my plan is to build a 3 node ha cluster including ceph storage.

I have 3 server nodes, each one have 3 network cards 1. 2 x 25GbE / 2. 2 x 2GbE / 3. 2 x 10GbE.

I would like to seperate the cluster, storage and VM network like this:

Should it be ok, or is there a better solution (with my existing hardware)?

And the second question I have is regarding my storage. Each node have a bunch of harddisk attached to a raid controller (I know, that HBA would be better, but I can not change the hardware). The raid controller is in JBOD mode, I hope this is OK for ceph.

Each node have 6 SSD's ( 2 x 240GB, 4 x 1TB) and 12 HDD with 2TB each. Is there a ceph configuration guide how to use/group the disks?

Thank you so much, Osti

Last edited: