Hi everyone and thanks for this awesome piece of software!

I am running a 2 node Proxmox cluster using a QDevice for quorum. At the same time I am using Linstor for a 2 node DRBD cluster using the QDevice as diskless tiebraker.

While the QDevice (PVEQ) only has a 1G NIC the storage/full nodes (PVE0 and PVE1) have a 1G and a 10G NIC. Traffic between PVE0/1 is preferred to go via 10G and use 1G as backup while all traffic to PVEQ must be on the 1 NICs network.

There is only one issue with this configuration - creation of resources in Linstor at VM creation (or Backup Restore).

When Proxmox tries to create a resource it will do so not knowing about the correct NICs and connection path's to use. Additionally Proxmox "forgets" to create the resource on PVEQ. Which is why every resource creation fails with erros like this:

Linstor

This can be fixed by running

Additionally ressource-connections would need to be set manually after this is done via

My question now is where I can locate the resource creation scripts that are run when using Linstor / DRBD as storage so that I can change them to include these commands into the creation routine?

Thanks everyone

I am running a 2 node Proxmox cluster using a QDevice for quorum. At the same time I am using Linstor for a 2 node DRBD cluster using the QDevice as diskless tiebraker.

While the QDevice (PVEQ) only has a 1G NIC the storage/full nodes (PVE0 and PVE1) have a 1G and a 10G NIC. Traffic between PVE0/1 is preferred to go via 10G and use 1G as backup while all traffic to PVEQ must be on the 1 NICs network.

There is only one issue with this configuration - creation of resources in Linstor at VM creation (or Backup Restore).

When Proxmox tries to create a resource it will do so not knowing about the correct NICs and connection path's to use. Additionally Proxmox "forgets" to create the resource on PVEQ. Which is why every resource creation fails with erros like this:

Code:

NOTICE

Trying to create diskful resource (vm-100-disk-1) on (pve0).

TASK ERROR: unable to create VM 100 - API Return-Code: 500. Message: Could not autoplace resource vm-100-disk-1, because: [{"ret_code":20185089,"message":"Successfully set property key(s): StorPoolName","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.314898812+01:00"},{"ret_code":20185089,"message":"Resource 'vm-100-disk-1' successfully autoplaced on 1 nodes","details":"Used nodes (storage pool name): 'pve1 (pve-storage)'","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.314901104+01:00"},{"ret_code":20185089,"message":"Successfully set property key(s): StorPoolName","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.314901857+01:00"},{"ret_code":4611686018447582995,"message":"Tie breaker resource 'vm-100-disk-1' created on DfltDisklessStorPool","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.314902492+01:00"},{"ret_code":4611686018447582994,"message":"Resource-definition property 'DrbdOptions/Resource/quorum' updated from 'off' to 'majority' by auto-quorum","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.314903161+01:00"},{"ret_code":4611686018447582994,"message":"Resource-definition property 'DrbdOptions/Resource/on-no-quorum' updated from 'off' to 'io-error' by auto-quorum","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.314903809+01:00"},{"ret_code":-4611686018407201818,"message":"(Node: 'pveq') Failed to adjust DRBD resource vm-100-disk-1","error_report_ids":["656DDDDE-B0F21-000069"],"obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.499428374+01:00"},{"ret_code":20185091,"message":"Added peer(s) 'pve1' to resource 'vm-100-disk-1' on 'pve0'","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:54.763360554+01:00"},{"ret_code":20185091,"message":"Created resource 'vm-100-disk-1' on 'pve1'","obj_refs":{"RscDfn":"vm-100-disk-1"},"created_at":"2023-12-06T19:31:58.264267846+01:00"}] at /usr/share/perl5/PVE/Storage/Custom/LINSTORPlugin.pm line 327. PVE::Storage::Custom::LINSTORPlugin::alloc_image("PVE::Storage::Custom::LINSTORPlugin", "pve_storage", HASH(0x56549af981e0), 100, "raw", undef, 33554432) called at /usr/share/perl5/PVE/Storage.pm line 994 eval {...} called at /usr/share/perl5/PVE/Storage.pm line 994 PVE::Storage::__ANON__() called at /usr/share/perl5/PVE/Cluster.pm line 648 eval {...} called at /usr/share/perl5/PVE/Cluster.pm line 614 PVE::Cluster::__ANON__("storage-pve_storage", undef, CODE(0x56549af73a58)) called at /usr/share/perl5/PVE/Cluster.pm line 693 PVE::Cluster::cfs_lock_storage("pve_storage", undef, CODE(0x56549af73a58)) called at /usr/share/perl5/PVE/Storage/Plugin.pm line 606 PVE::Storage::Plugin::cluster_lock_storage("PVE::Storage::Custom::LINSTORPlugin", "pve_storage", 1, undef, CODE(0x56549af73a58)) called at /usr/share/perl5/PVE/Storage.pm line 999 PVE::Storage::vdisk_alloc(HASH(0x56549b0b57c0), "pve_storage", 100, "raw", undef, 33554432) called at /usr/share/perl5/PVE/API2/Qemu.pm line 428 PVE::API2::Qemu::__ANON__("scsi0", HASH(0x565494dec580)) called at /usr/share/perl5/PVE/API2/Qemu.pm line 85 PVE::API2::Qemu::__ANON__(HASH(0x56549afa3ba8), CODE(0x56549b05cb28)) called at /usr/share/perl5/PVE/API2/Qemu.pm line 466 eval {...} called at /usr/share/perl5/PVE/API2/Qemu.pm line 466 PVE::API2::Qemu::__ANON__(PVE::RPCEnvironment=HASH(0x5654954e20d0), "root\@pam", HASH(0x56549afa3ba8), "x86_64", HASH(0x56549b0b57c0), 100, undef, HASH(0x56549afa3ba8), ...) called at /usr/share/perl5/PVE/API2/Qemu.pm line 1022 eval {...} called at /usr/share/perl5/PVE/API2/Qemu.pm line 1021 PVE::API2::Qemu::__ANON__() called at /usr/share/perl5/PVE/AbstractConfig.pm line 299 PVE::AbstractConfig::__ANON__() called at /usr/share/perl5/PVE/Tools.pm line 259 eval {...} called at /usr/share/perl5/PVE/Tools.pm line 259 PVE::Tools::lock_file_full("/var/lock/qemu-server/lock-100.conf", 1, 0, CODE(0x56549aedad58)) called at /usr/share/perl5/PVE/AbstractConfig.pm line 302 PVE::AbstractConfig::__ANON__("PVE::QemuConfig", 100, 1, 0, CODE(0x5654938aaaf0)) called at /usr/share/perl5/PVE/AbstractConfig.pm line 322 PVE::AbstractConfig::lock_config_full("PVE::QemuConfig", 100, 1, CODE(0x5654938aaaf0)) called at /usr/share/perl5/PVE/API2/Qemu.pm line 1078 PVE::API2::Qemu::__ANON__() called at /usr/share/perl5/PVE/API2/Qemu.pm line 1108 eval {...} called at /usr/share/perl5/PVE/API2/Qemu.pm line 1108 PVE::API2::Qemu::__ANON__("UPID:pve0:002CECB3:065036C9:6570BE13:qmcreate:100:root\@pam:") called at /usr/share/perl5/PVE/RESTEnvironment.pm line 620 eval {...} called at /usr/share/perl5/PVE/RESTEnvironment.pm line 611 PVE::RESTEnvironment::fork_worker(PVE::RPCEnvironment=HASH(0x5654954e20d0), "qmcreate", 100, "root\@pam", CODE(0x56549aecca50)) called at /usr/share/perl5/PVE/API2/Qemu.pm line 1120 PVE::API2::Qemu::__ANON__(HASH(0x56549afa3ba8)) called at /usr/share/perl5/PVE/RESTHandler.pm line 499 PVE::RESTHandler::handle("PVE::API2::Qemu", HASH(0x565499023828), HASH(0x56549afa3ba8)) called at /usr/share/perl5/PVE/HTTPServer.pm line 180 eval {...} called at /usr/share/perl5/PVE/HTTPServer.pm line 141 PVE::HTTPServer::rest_handler(PVE::HTTPServer=HASH(0x565492fd5978), "::ffff:192.168.177.5", "POST", "/nodes/pve0/qemu", HASH(0x56549af6b680), HASH(0x56549af6b9f8), "extjs") called at /usr/share/perl5/PVE/APIServer/AnyEvent.pm line 923 eval {...} called at /usr/share/perl5/PVE/APIServer/AnyEvent.pm line 897 PVE::APIServer::AnyEvent::handle_api2_request(PVE::HTTPServer=HASH(0x565492fd5978), HASH(0x56549af733f8), HASH(0x56549af6b680), "POST", "/api2/extjs/nodes/pve0/qemu") called at /usr/share/perl5/PVE/APIServer/AnyEvent.pm line 1147 eval {...} called at /usr/share/perl5/PVE/APIServer/AnyEvent.pm line 1139 PVE::APIServer::AnyEvent::handle_request(PVE::HTTPServer=HASH(0x565492fd5978), HASH(0x56549af733f8), HASH(0x56549af6b680), "POST", "/api2/extjs/nodes/pve0/qemu") called at /usr/share/perl5/PVE/APIServer/AnyEvent.pm line 1606 PVE::APIServer::AnyEvent::__ANON__(AnyEvent::Handle=HASH(0x56549af6b578), "ostype=l26&scsi0=pve_storage%3A32%2Ciothread%3Don&net0=virtio"...) called at /usr/lib/x86_64-linux-gnu/perl5/5.36/AnyEvent/Handle.pm line 1505 AnyEvent::Handle::__ANON__(AnyEvent::Handle=HASH(0x56549af6b578)) called at /usr/lib/x86_64-linux-gnu/perl5/5.36/AnyEvent/Handle.pm line 1315 AnyEvent::Handle::_drain_rbuf(AnyEvent::Handle=HASH(0x56549af6b578)) called at /usr/lib/x86_64-linux-gnu/perl5/5.36/AnyEvent/Handle.pm line 2015 AnyEvent::Handle::__ANON__() called at /usr/lib/x86_64-linux-gnu/perl5/5.36/AnyEvent/Loop.pm line 248 AnyEvent::Loop::one_event() called at /usr/lib/x86_64-linux-gnu/perl5/5.36/AnyEvent/Impl/Perl.pm line 46 AnyEvent::CondVar::Base::_wait(AnyEvent::CondVar=HASH(0x565495518b80)) called at /usr/lib/x86_64-linux-gnu/perl5/5.36/AnyEvent.pm line 2034 AnyEvent::CondVar::Base::recv(AnyEvent::CondVar=HASH(0x565495518b80)) called at /usr/share/perl5/PVE/APIServer/AnyEvent.pm line 1920 PVE::APIServer::AnyEvent::run(PVE::HTTPServer=HASH(0x565492fd5978)) called at /usr/share/perl5/PVE/Service/pvedaemon.pm line 52 PVE::Service::pvedaemon::run(PVE::Service::pvedaemon=HASH(0x56549a9df180)) called at /usr/share/perl5/PVE/Daemon.pm line 171 eval {...} called at /usr/share/perl5/PVE/Daemon.pm line 171 PVE::Daemon::__ANON__(PVE::Service::pvedaemon=HASH(0x56549a9df180)) called at /usr/share/perl5/PVE/Daemon.pm line 390 eval {...} called at /usr/share/perl5/PVE/Daemon.pm line 379 PVE::Daemon::__ANON__(PVE::Service::pvedaemon=HASH(0x56549a9df180), undef) called at /usr/share/perl5/PVE/Daemon.pm line 551 eval {...} called at /usr/share/perl5/PVE/Daemon.pm line 549 PVE::Daemon::start(PVE::Service::pvedaemon=HASH(0x56549a9df180), undef) called at /usr/share/perl5/PVE/Daemon.pm line 659 PVE::Daemon::__ANON__(HASH(0x565492fce4b0)) called at /usr/share/perl5/PVE/RESTHandler.pm line 499 PVE::RESTHandler::handle("PVE::Service::pvedaemon", HASH(0x56549a9df4c8), HASH(0x565492fce4b0), 1) called at /usr/share/perl5/PVE/RESTHandler.pm line 985 eval {...} called at /usr/share/perl5/PVE/RESTHandler.pm line 968 PVE::RESTHandler::cli_handler("PVE::Service::pvedaemon", "pvedaemon start", "start", ARRAY(0x565492ff5380), ARRAY(0x565492fee368), undef, undef, undef) called at /usr/share/perl5/PVE/CLIHandler.pm line 594 PVE::CLIHandler::__ANON__(ARRAY(0x565492fce678), CODE(0x5654933e0868), undef) called at /usr/share/perl5/PVE/CLIHandler.pm line 673 PVE::CLIHandler::run_cli_handler("PVE::Service::pvedaemon", "prepare", CODE(0x5654933e0868)) called at /usr/bin/pvedaemon line 27Linstor

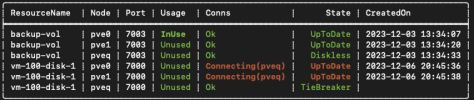

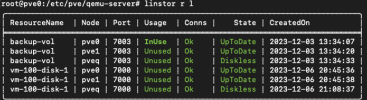

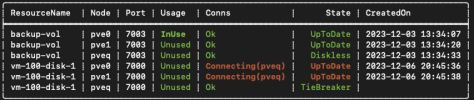

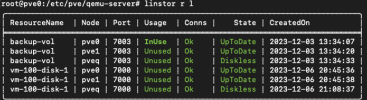

linstor r l command will look like this afterwards:

This can be fixed by running

linstor r create pveq vm-100-disk-1 --diskless

Additionally ressource-connections would need to be set manually after this is done via

linstor rc path create pve0 pve1 vm-100-disk-1 path1 tg_nic tg_nic

linstor rc path create pve0 pve1 vm-100-disk-1 path2 g_nic g_nic

linstor rc path create pve0 pveq vm-100-disk-1 path1 g_nic g_nic

linstor rc path create pve1 pveq vm-100-disk-1 path1 g_nic g_nicMy question now is where I can locate the resource creation scripts that are run when using Linstor / DRBD as storage so that I can change them to include these commands into the creation routine?

Thanks everyone