Hello everybody,

Proxmox somehow constantly crashes, I have setup everything multiple times.

After the crash often times the pve is corrupted, and even though firstly It can be repaired with lvconvert --repair pve/data, eventually it turns into a persistant corruption and I have to setup everything all over again.

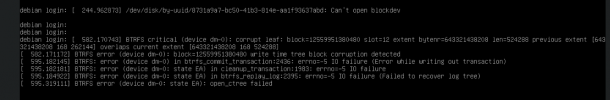

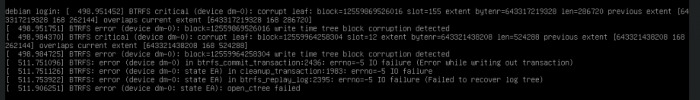

Can anybody make sense out of the logs, or tell me how to troubleshoot below issues and the crashes?

Thank you in advance

dmesg

https://pastebin.com/HnP5SF3v

journal

https://pastebin.com/ghGvbLjU

root@Snake:~# vgchange -a y pve

Check of pool pve/data failed (status:1). Manual repair required!

2 logical volume(s) in volume group "pve" now active

root@Snake:~# lvchange -a y pve/data

Check of pool pve/data failed (status:1). Manual repair required!

root@Snake:~# lvconvert --repair pve/data

Child 4234 exited abnormally

Repair of thin metadata volume of thin pool pve/data failed (status:-1). Manual repair required!

Proxmox somehow constantly crashes, I have setup everything multiple times.

After the crash often times the pve is corrupted, and even though firstly It can be repaired with lvconvert --repair pve/data, eventually it turns into a persistant corruption and I have to setup everything all over again.

Can anybody make sense out of the logs, or tell me how to troubleshoot below issues and the crashes?

Thank you in advance

dmesg

https://pastebin.com/HnP5SF3v

journal

https://pastebin.com/ghGvbLjU

root@Snake:~# vgchange -a y pve

Check of pool pve/data failed (status:1). Manual repair required!

2 logical volume(s) in volume group "pve" now active

root@Snake:~# lvchange -a y pve/data

Check of pool pve/data failed (status:1). Manual repair required!

root@Snake:~# lvconvert --repair pve/data

Child 4234 exited abnormally

Repair of thin metadata volume of thin pool pve/data failed (status:-1). Manual repair required!