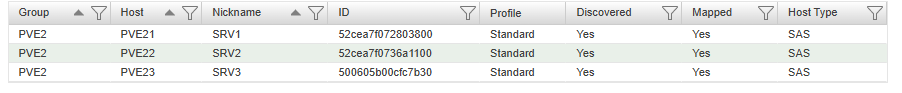

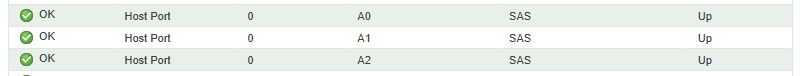

My setup:

2x DELL R640 and 1x DELL R630

1X DELL ME4024

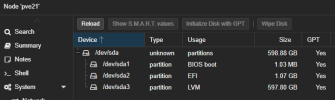

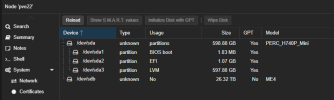

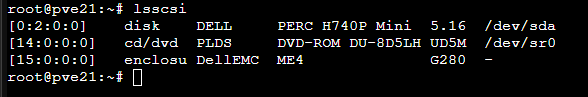

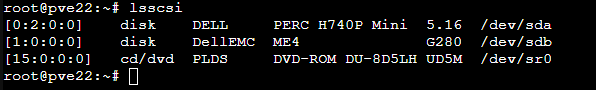

All servers are connected to ME4024 using one SAS cable per node. I have PVE Cluster with 3 servers but only one can see ME4024.

2x DELL R640 and 1x DELL R630

1X DELL ME4024

All servers are connected to ME4024 using one SAS cable per node. I have PVE Cluster with 3 servers but only one can see ME4024.

Attachments

-

Zrzut ekranu 2025-03-29 002107.png22.2 KB · Views: 15

Zrzut ekranu 2025-03-29 002107.png22.2 KB · Views: 15 -

Zrzut ekranu 2025-03-29 002129.png29.1 KB · Views: 14

Zrzut ekranu 2025-03-29 002129.png29.1 KB · Views: 14 -

Zrzut ekranu 2025-03-29 002212.png7.4 KB · Views: 14

Zrzut ekranu 2025-03-29 002212.png7.4 KB · Views: 14 -

Zrzut ekranu 2025-03-29 002237.png7.2 KB · Views: 14

Zrzut ekranu 2025-03-29 002237.png7.2 KB · Views: 14 -

Zrzut ekranu 2025-03-29 002410.png13.6 KB · Views: 11

Zrzut ekranu 2025-03-29 002410.png13.6 KB · Views: 11 -

Zrzut ekranu 2025-03-29 002509.png6.4 KB · Views: 10

Zrzut ekranu 2025-03-29 002509.png6.4 KB · Views: 10 -

Zrzut ekranu 2025-03-29 002532.png9.3 KB · Views: 13

Zrzut ekranu 2025-03-29 002532.png9.3 KB · Views: 13