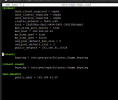

root@pmox03-scan-hq:~# ceph -s

cluster:

id: 7363a620-944a-4321-ad70-d12dd688bac7

health: HEALTH_WARN

clock skew detected on mon.pmox01-scan-hq

1/3 mons down, quorum pmox03-scan-hq,pmox01-scan-hq

Degraded data redundancy: 2/6 objects degraded (33.333%), 1 pg degraded, 74 pgs undersized

30053 slow ops, oldest one blocked for 89745 sec, mon.pmox01-scan-hq has slow ops

services:

mon: 3 daemons, quorum pmox03-scan-hq,pmox01-scan-hq (age 47m), out of quorum: pmox02-scan-hq

mgr: pmox03-scan-hq(active, since 49m), standbys: pmox02-scan-hq

osd: 4 osds: 3 up (since 17m), 3 in (since 7m); 56 remapped pgs

data:

pools: 2 pools, 129 pgs

objects: 2 objects, 2.0 MiB

usage: 90 MiB used, 5.2 TiB / 5.2 TiB avail

pgs: 2/6 objects degraded (33.333%)

73 active+undersized

49 active+clean+remapped

6 active+clean

1 active+undersized+degraded