Hello all,

I have a Proxmox server (ZFS 2 way mirrored) and a Proxmox Backup server (ZFS 3 way mirrored) that I'm using for backup and recovery.

It is the second time this year that when playing arround with a VM (backup, modify VM files like qcow2 compression and so on, restore) that I get a corrupted VM.

I use VMs mostly for separated development environments because of convenience, no need to delve into the whys, the thing is that I run licensed software (say Embarcadero Delphi for example) that have some miserable licensing traits (like, limited number of installations that you can run before you have to go begging of them to increase the limit when you need to recreate your dev environment).

I had to go through all the hoops and loops because the Proxmox Backup server is screwing my backups apparently.

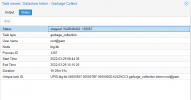

I also noticed this huge "missing chunk" thing when GC is doing it's thing... and funny enough it's on the exact same VM this time also! Funny thing, I also do my back-ups locally on the same server and this time I got around with an older backup and I didn't had to go beg again.

Why is this happening? I attached the output of the GC. I have no errors on the drives and also... ZFS 3 way mirrored, scrubbed weekly and so on it should be super duper safe! Why do I have missing chunks?!

Worth noting that I do my backups weekly, I power up the back-up server 4 - 6 H before the backup event and power it off the next day (6 - 8 H after the backup is completed).

For me this completely ruins my trust in PBS... I used to have a simple Linux with SAMBA as a backup solution. I never had problems with SAMBA except that if the backup server is offline Proxmox would just dump stuff on / which is rather small thus causing other complications. PBS is at least detected and not mounted in some folder but accessed through it's API and this makes it better when the back-up server is not always online. Also the incremental backup is of tremendous help!

If I don't understand the problem so I can avoid it I will just have to do my back-ups locally and rsync them to NFS/SAMBA weekly and drop PBS altogether... I would very much like NOT to do this because I love Proxmox!

Thank you all in advance for any thoughts!

P.S.: Regarding Proxmox server itself... I've been using it since 6.x and constantly upgraded my hardware and software on the go since then without any problems! ZFS is as always rock solid, never lost one bit of info! It is completely baffling for me that I have this problem with PBS and it makes me think that I cannot trust my backups. It's completely wrong to do a backup, receive "all green" then when restoring it to have your VM thrashed out just because of this round trip...

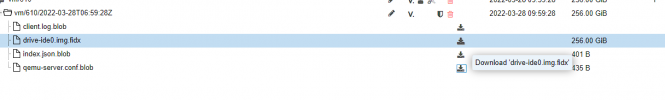

Restoring looks like this on that specific VM:

I have a Proxmox server (ZFS 2 way mirrored) and a Proxmox Backup server (ZFS 3 way mirrored) that I'm using for backup and recovery.

It is the second time this year that when playing arround with a VM (backup, modify VM files like qcow2 compression and so on, restore) that I get a corrupted VM.

I use VMs mostly for separated development environments because of convenience, no need to delve into the whys, the thing is that I run licensed software (say Embarcadero Delphi for example) that have some miserable licensing traits (like, limited number of installations that you can run before you have to go begging of them to increase the limit when you need to recreate your dev environment).

I had to go through all the hoops and loops because the Proxmox Backup server is screwing my backups apparently.

I also noticed this huge "missing chunk" thing when GC is doing it's thing... and funny enough it's on the exact same VM this time also! Funny thing, I also do my back-ups locally on the same server and this time I got around with an older backup and I didn't had to go beg again.

Why is this happening? I attached the output of the GC. I have no errors on the drives and also... ZFS 3 way mirrored, scrubbed weekly and so on it should be super duper safe! Why do I have missing chunks?!

Worth noting that I do my backups weekly, I power up the back-up server 4 - 6 H before the backup event and power it off the next day (6 - 8 H after the backup is completed).

For me this completely ruins my trust in PBS... I used to have a simple Linux with SAMBA as a backup solution. I never had problems with SAMBA except that if the backup server is offline Proxmox would just dump stuff on / which is rather small thus causing other complications. PBS is at least detected and not mounted in some folder but accessed through it's API and this makes it better when the back-up server is not always online. Also the incremental backup is of tremendous help!

If I don't understand the problem so I can avoid it I will just have to do my back-ups locally and rsync them to NFS/SAMBA weekly and drop PBS altogether... I would very much like NOT to do this because I love Proxmox!

Thank you all in advance for any thoughts!

P.S.: Regarding Proxmox server itself... I've been using it since 6.x and constantly upgraded my hardware and software on the go since then without any problems! ZFS is as always rock solid, never lost one bit of info! It is completely baffling for me that I have this problem with PBS and it makes me think that I cannot trust my backups. It's completely wrong to do a backup, receive "all green" then when restoring it to have your VM thrashed out just because of this round trip...

Restoring looks like this on that specific VM:

Code:

ProxmoxBackup Server 2.1-5

()

2022-03-29T00:23:49+03:00: starting new backup reader datastore 'totem': "/rpool/totem"

2022-03-29T00:23:49+03:00: protocol upgrade done

2022-03-29T00:23:49+03:00: GET /download

2022-03-29T00:23:49+03:00: download "/rpool/totem/vm/610/2022-03-28T06:59:28Z/index.json.blob"

2022-03-29T00:23:49+03:00: GET /download

2022-03-29T00:23:49+03:00: download "/rpool/totem/vm/610/2022-03-28T06:59:28Z/drive-ide0.img.fidx"

2022-03-29T00:23:49+03:00: register chunks in 'drive-ide0.img.fidx' as downloadable.

2022-03-29T00:23:49+03:00: GET /chunk

2022-03-29T00:23:49+03:00: download chunk "/rpool/totem/.chunks/43bc/43bcce985c008a4a83d1baaa545913cfad887a28e8512e2430085310b12dd6af"

2022-03-29T00:23:49+03:00: GET /chunk

2022-03-29T00:23:49+03:00: download chunk "/rpool/totem/.chunks/1897/1897243719042b8f1915458acf3fe0b32b9a1e7c3fd961c17f17e168bfbb0b59"

2022-03-29T00:23:49+03:00: GET /chunk: 400 Bad Request: reading file "/rpool/totem/.chunks/1897/1897243719042b8f1915458acf3fe0b32b9a1e7c3fd961c17f17e168bfbb0b59" failed: No such file or directory (os error 2)

2022-03-29T00:23:49+03:00: reader finished successfully

2022-03-29T00:23:49+03:00: TASK OKAttachments

Last edited: