Hey guys,

Been using NFS for storage for years with Proxmox and just been playing with iSCSI for the first time and running FIO to benchmark and its Way way faster!

Running 5 PVE's all connected to the Truenas NFS & iSCSI but trying to work out how to add the disks for iSCSI correctly.

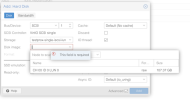

Initially I added Datacenter -> Storage -> Add -> iSCSI (left the "Use LUNs Directly") while testing fio. But when I went to try migrate a VM to it, was getting error "can't allocate space in iscsi storage".

I then discovered some forum posts that suggested to untick "Use LUNs Directly" and then add a LVM with the LUN and this worked, I got my first VM migrated and working.

My second VM I added another Extent to the Target and an Associated target LUN 1, I go to add the LVM and it adds but every time I go to put a VM on it and it shows 0 available space and just cant get it to work.

Do I need to create a new target for every single VM?

any help would be greatly appreciated.

Been using NFS for storage for years with Proxmox and just been playing with iSCSI for the first time and running FIO to benchmark and its Way way faster!

Running 5 PVE's all connected to the Truenas NFS & iSCSI but trying to work out how to add the disks for iSCSI correctly.

Initially I added Datacenter -> Storage -> Add -> iSCSI (left the "Use LUNs Directly") while testing fio. But when I went to try migrate a VM to it, was getting error "can't allocate space in iscsi storage".

I then discovered some forum posts that suggested to untick "Use LUNs Directly" and then add a LVM with the LUN and this worked, I got my first VM migrated and working.

My second VM I added another Extent to the Target and an Associated target LUN 1, I go to add the LVM and it adds but every time I go to put a VM on it and it shows 0 available space and just cant get it to work.

Do I need to create a new target for every single VM?

any help would be greatly appreciated.