Hello,

I have a 4 node cluster, each cluster has a thin lvm pool with 4 drives each.

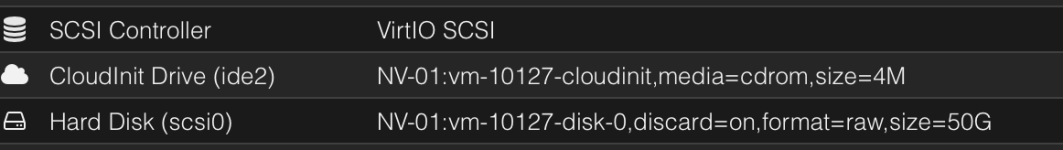

Whenever I create a new VM, the space is not pre-allocated (which is great), but when I migrate a VM from one node to another node the full disk size gets used up on the target node. Is pre-allocation done by default when migrating, if yes, how do I avoid this?

TIA!

I have a 4 node cluster, each cluster has a thin lvm pool with 4 drives each.

Whenever I create a new VM, the space is not pre-allocated (which is great), but when I migrate a VM from one node to another node the full disk size gets used up on the target node. Is pre-allocation done by default when migrating, if yes, how do I avoid this?

TIA!