Hi everyone,

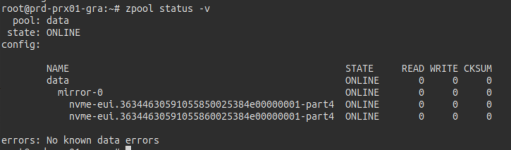

We’ve been deploying several new Proxmox 9 nodes using ZFS as the primary storage, and we’re encountering issues where virtual machines become I/O locked.

When it happens, the VMs are paused with an I/O error. We’re aware this can occur when a host runs out of disk space, but in our case there is plenty of free storage available.

We’ve seen this behavior across multiple hosts, different clusters, and different hardware platforms.

Furthermore, we’ve been running ZFS on Proxmox 8 without any issues, but since these problems started with our Proxmox 9 installations, we’re hesitant to upgrade our existing nodes.

Do you have a checklist or common causes to investigate for this type of I/O error with ZFS on Proxmox?

Thanks in advance.

-

Eliott

We’ve been deploying several new Proxmox 9 nodes using ZFS as the primary storage, and we’re encountering issues where virtual machines become I/O locked.

When it happens, the VMs are paused with an I/O error. We’re aware this can occur when a host runs out of disk space, but in our case there is plenty of free storage available.

We’ve seen this behavior across multiple hosts, different clusters, and different hardware platforms.

Furthermore, we’ve been running ZFS on Proxmox 8 without any issues, but since these problems started with our Proxmox 9 installations, we’re hesitant to upgrade our existing nodes.

Do you have a checklist or common causes to investigate for this type of I/O error with ZFS on Proxmox?

Thanks in advance.

-

Eliott