Hello,

Has anyone successfully implemented a professional Proxmox setup with Fibre Channel (FC) SAN storage? I am not referring to IPSAN, but specifically FC-based SAN.

In a clustered environment, I am experiencing significant issues, particularly during cloning, wipe disk operations. The lock mechanisms appear problematic, and Proxmox seems unable to handle them reliably. In my test environment, when I attempt to delete or move disks simultaneously from different nodes, the system begins to encounter errors.

My current setup is as follows:

If anyone has managed to run this configuration stably, could you share documentation or insights on how you achieved it? The storage lock issues are proving to be a major challenge.

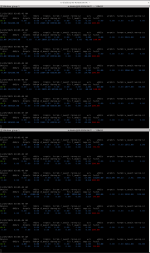

Nov 26 11:59:38 PVE1 pvedaemon[94779]: lvremove 'STR-5TB-HUAWEI-NVME-045/vm-103-disk-0' error: 'storage-STR-5TB-HUAWEI-NVME-045'-locked command timed out - aborting

Nov 26 11:59:38 PVE1 pvedaemon[72268]: <root@pam> end task UPID VE1:0001723B:000F6884:6926C13E:imgdel:103@STR-5TB-HUAWEI-NVME-045:root@pam: lvremove 'STR-5TB-HUAWEI-NVME-045/vm-103-disk-0' error: 'storage-STR-5TB-HUAWEI-NVME-045'-locked command timed out - aborting

VE1:0001723B:000F6884:6926C13E:imgdel:103@STR-5TB-HUAWEI-NVME-045:root@pam: lvremove 'STR-5TB-HUAWEI-NVME-045/vm-103-disk-0' error: 'storage-STR-5TB-HUAWEI-NVME-045'-locked command timed out - aborting

Has anyone successfully implemented a professional Proxmox setup with Fibre Channel (FC) SAN storage? I am not referring to IPSAN, but specifically FC-based SAN.

In a clustered environment, I am experiencing significant issues, particularly during cloning, wipe disk operations. The lock mechanisms appear problematic, and Proxmox seems unable to handle them reliably. In my test environment, when I attempt to delete or move disks simultaneously from different nodes, the system begins to encounter errors.

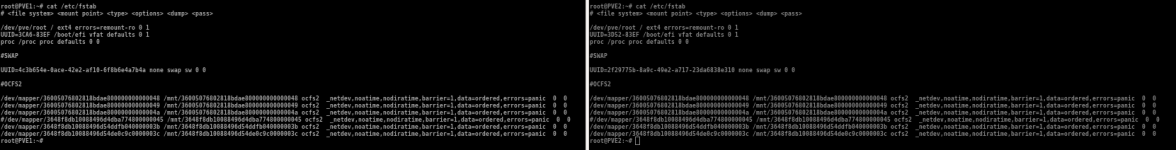

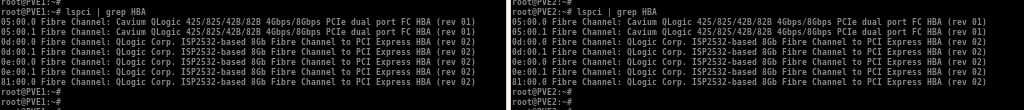

My current setup is as follows:

- Proxmox 9.1.1

- QCOW2 disk format

- Huawei 5000v3 SAN Storage → HBA → Linux Multipath → LVM → Proxmox (2 node cluster with qdevice)

If anyone has managed to run this configuration stably, could you share documentation or insights on how you achieved it? The storage lock issues are proving to be a major challenge.

Nov 26 11:59:38 PVE1 pvedaemon[94779]: lvremove 'STR-5TB-HUAWEI-NVME-045/vm-103-disk-0' error: 'storage-STR-5TB-HUAWEI-NVME-045'-locked command timed out - aborting

Nov 26 11:59:38 PVE1 pvedaemon[72268]: <root@pam> end task UPID