Yeah, yeah, I know. EOL CPU.

Ceph was working fine under Proxmox 7 using the same CPU.

I did pve7to8 upgrade and a clean install of Proxmox 8.

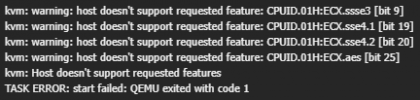

Both situations got the 'Caught signal (illegal instruction)' when attempting to start up a Ceph monitor.

It's either pointing to a bad binary or re-compile.

I've already posted my findings in the Proxmox VE 8.0 released! thread.

Has anyone done a clean install of Proxmox 8 Ceph on any other CPU?

Ceph was working fine under Proxmox 7 using the same CPU.

I did pve7to8 upgrade and a clean install of Proxmox 8.

Both situations got the 'Caught signal (illegal instruction)' when attempting to start up a Ceph monitor.

It's either pointing to a bad binary or re-compile.

I've already posted my findings in the Proxmox VE 8.0 released! thread.

Has anyone done a clean install of Proxmox 8 Ceph on any other CPU?

Last edited: