Hello

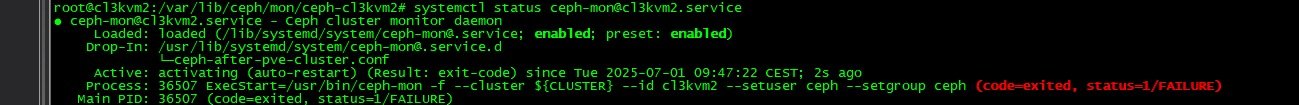

today one on my node crash due to a SAS controller fault.

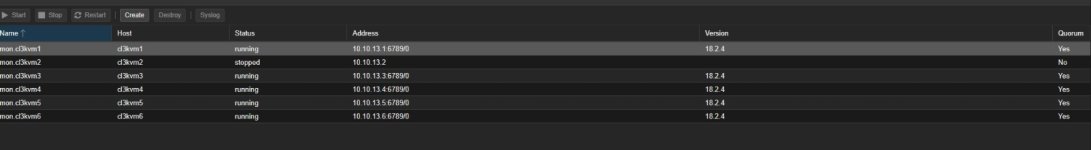

we have replace the controller but the ceph mon on this nod is "STOPPED".

we have destroy and recreate it, reboot several time

but nothing changes.

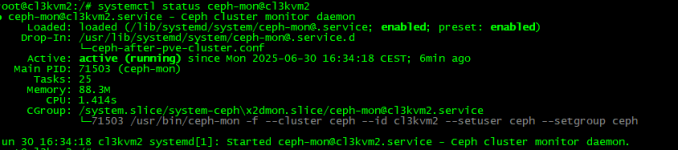

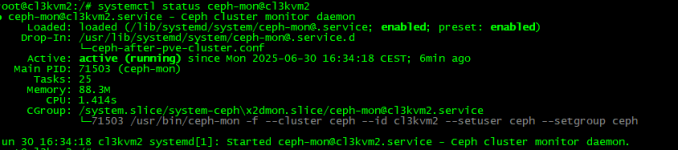

in advance on systemctl status the mon is runnin on that node:

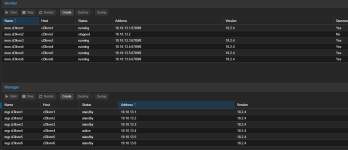

how i can bring back running this mon on proxmox console?

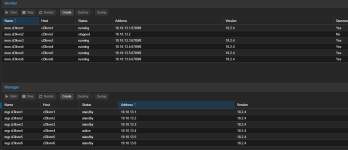

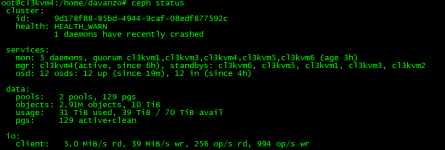

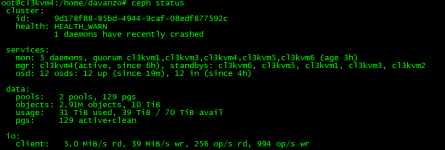

also from ceph status on all node:

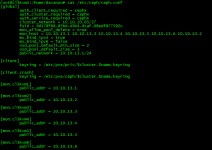

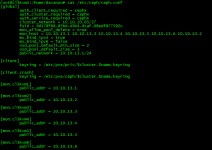

but i see on ceph.conf:

Thanks for the help

today one on my node crash due to a SAS controller fault.

we have replace the controller but the ceph mon on this nod is "STOPPED".

we have destroy and recreate it, reboot several time

but nothing changes.

in advance on systemctl status the mon is runnin on that node:

how i can bring back running this mon on proxmox console?

also from ceph status on all node:

but i see on ceph.conf:

Thanks for the help