Hello everyone, for a week now I have been trying to solve a problem on a server running proxmox 7.3 !

On December 6th, I found my server completely crashed in Kernel panic, that day I only managed to take one screenshot in IMPI.

Since then, the server rebooted once every 24 hours more or less at random times, nothing precise.

I did a full hardware test with the vendor's tools (OVHCloud) CPU, RAM + Disk and nothing seems to come from hardware but from software, because the server reboots itself. No power outage or manual shutdown.

The server runs on a :

(HARD)

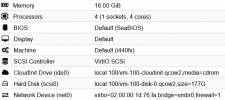

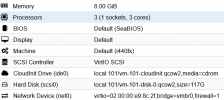

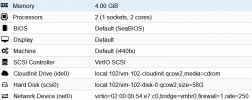

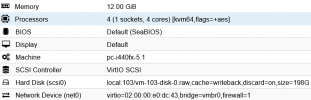

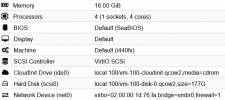

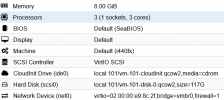

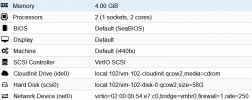

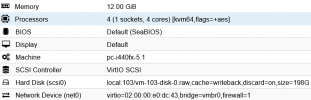

On this server I have 4 virtual machines only, here is their configuration:

The server load was low during the reboots, as we can see on this graph :

As said above, on December 6th, I could only take one screenshot of the crash, I have no idea what this means:

Since two days (on 10 December), I installed auditd and set the log configuration to permanent, I was able to catch a few more errors :

But for example, this morning, December 12, the server did not generate an error, however, it rebooted itself without explanation:

If anyone understands what is going on, or at least where I could try to correct this problem.

I have already seen on other posts, that it was possible to activate the AES instructions for Windows, to disconnect the unused ISOs, I even tried to install version 5.19 of the kernel but the server keeps rebooting.

Thank you for your reading time

On December 6th, I found my server completely crashed in Kernel panic, that day I only managed to take one screenshot in IMPI.

Since then, the server rebooted once every 24 hours more or less at random times, nothing precise.

I did a full hardware test with the vendor's tools (OVHCloud) CPU, RAM + Disk and nothing seems to come from hardware but from software, because the server reboots itself. No power outage or manual shutdown.

The server runs on a :

(HARD)

- AMD Ryzen 5 3600X - 6c/12t - 3.8 GHz/4.4 GHz

- 64 Go ECC 2666 MHz

- 2×500 Go SSD NVMe

Code:

proxmox-ve: 7.3-1 (running kernel: 5.19.17-1-pve)

pve-manager: 7.3-3 (running version: 7.3-3/c3928077)

pve-kernel-5.15: 7.2-14

pve-kernel-5.19: 7.2-14

pve-kernel-helper: 7.2-14

pve-kernel-5.19.17-1-pve: 5.19.17-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

ceph-fuse: 14.2.21-1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-1

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.1-1

proxmox-backup-file-restore: 2.3.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-1

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.5-6

pve-ha-manager: 3.5.1

pve-i18n: 2.8-1

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-1

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.6-pve1On this server I have 4 virtual machines only, here is their configuration:

The server load was low during the reboots, as we can see on this graph :

As said above, on December 6th, I could only take one screenshot of the crash, I have no idea what this means:

Since two days (on 10 December), I installed auditd and set the log configuration to permanent, I was able to catch a few more errors :

Code:

Dec 10 12:03:01 jupiter pvestatd[1499]: VM 102 qmp command failed - VM 102 qmp command 'query-proxmox-support' failed - got timeout

Dec 10 12:03:01 jupiter pvestatd[1499]: status update time (8.023 seconds)

Dec 10 12:03:11 jupiter pvestatd[1499]: VM 102 qmp command failed - VM 102 qmp command 'query-proxmox-support' failed - unable to connect to VM 102 qmp socket - timeout after 51 retries

Dec 10 12:03:11 jupiter pvestatd[1499]: status update time (8.032 seconds)

Dec 10 12:03:17 jupiter kernel: BUG: unable to handle page fault for address: 00000000fffff000

Dec 10 12:03:17 jupiter kernel: #PF: supervisor instruction fetch in kernel mode

Dec 10 12:03:17 jupiter kernel: #PF: error_code(0x0010) - not-present page

Dec 10 12:03:17 jupiter kernel: PGD 0 P4D 0

Dec 10 12:03:17 jupiter kernel: Oops: 0010 [#1] SMP NOPTI

Dec 10 12:03:17 jupiter kernel: CPU: 8 PID: 6303 Comm: CPU 0/KVM Tainted: P O 5.15.74-1-pve #1

Dec 10 12:03:17 jupiter kernel: Hardware name: To Be Filled By O.E.M. To Be Filled By O.E.M./X470D4U2-2T, BIOS L4.03G 12/12/2012

Dec 10 12:03:17 jupiter kernel: RIP: 0010:svm_vcpu_run+0x212/0x8f0 [kvm_amd]

Dec 10 12:03:17 jupiter kernel: Code: 01 0f 87 f6 cb 00 00 41 83 e6 01 75 12 b8 f0 0f ff ff 49 39 85 68 05 00 00 0f 85 8d 05 00 00 0f 01 dd 4c 89 e7 e8 ee 3b f2 ff <4c> 89 e7 e8 06 39 f4 ff 0f 1f 44 00 00 4c 8>

Dec 10 12:03:17 jupiter kernel: RSP: 0018:ffffbbd8d108fc88 EFLAGS: 00010046

Dec 10 12:03:17 jupiter kernel: RAX: 00000000ffff0ff0 RBX: 0000000000033028 RCX: ffff9f1cc12a9540

Dec 10 12:03:17 jupiter kernel: RDX: 000000c001275000 RSI: 0000000000000008 RDI: ffff9f1d2b96cde0

Dec 10 12:03:17 jupiter kernel: RBP: ffffbbd8d108fcc0 R08: 0000000000000000 R09: 0000000000000000

Dec 10 12:03:17 jupiter kernel: R10: 0000000000000000 R11: 0000000000000000 R12: ffff9f1d2b96cde0

Dec 10 12:03:17 jupiter kernel: R13: ffff9f1cccc21000 R14: 0000000000000000 R15: 0000000000000008

Dec 10 12:03:17 jupiter kernel: FS: 00007f4023d65700(0000) GS:ffff9f2bbec00000(0000) knlGS:0000000000000000

Dec 10 12:03:17 jupiter kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Dec 10 12:03:17 jupiter kernel: CR2: 00000000fffff000 CR3: 00000001b6dc8000 CR4: 0000000000350ee0

Dec 10 12:03:17 jupiter kernel: Call Trace:

Dec 10 12:03:17 jupiter kernel: <TASK>

Dec 10 12:03:17 jupiter kernel: kvm_arch_vcpu_ioctl_run+0xc77/0x1730 [kvm]

Dec 10 12:03:17 jupiter kernel: ? vcpu_put+0x1b/0x40 [kvm]

Dec 10 12:03:17 jupiter kernel: ? vcpu_put+0x1b/0x40 [kvm]

Dec 10 12:03:17 jupiter kernel: kvm_vcpu_ioctl+0x252/0x6b0 [kvm]

Dec 10 12:03:17 jupiter kernel: ? kvm_vcpu_ioctl+0x2bb/0x6b0 [kvm]

Dec 10 12:03:17 jupiter kernel: ? __fget_files+0x86/0xc0

Dec 10 12:03:17 jupiter kernel: __x64_sys_ioctl+0x95/0xd0

Dec 10 12:03:17 jupiter kernel: do_syscall_64+0x5c/0xc0

Dec 10 12:03:17 jupiter kernel: ? syscall_exit_to_user_mode+0x27/0x50

Dec 10 12:03:17 jupiter kernel: ? do_syscall_64+0x69/0xc0

Dec 10 12:03:17 jupiter kernel: ? syscall_exit_to_user_mode+0x27/0x50

Dec 10 12:03:17 jupiter kernel: ? do_syscall_64+0x69/0xc0

Dec 10 12:03:17 jupiter kernel: ? exit_to_user_mode_prepare+0x37/0x1b0

Dec 10 12:03:17 jupiter kernel: ? syscall_exit_to_user_mode+0x27/0x50

Dec 10 12:03:17 jupiter kernel: ? do_syscall_64+0x69/0xc0

Dec 10 12:03:17 jupiter kernel: ? do_syscall_64+0x69/0xc0

Dec 10 12:03:17 jupiter kernel: ? do_syscall_64+0x69/0xc0

Dec 10 12:03:17 jupiter kernel: entry_SYSCALL_64_after_hwframe+0x61/0xcb

Dec 10 12:03:17 jupiter kernel: RIP: 0033:0x7f402f3dc5f7

Dec 10 12:03:17 jupiter kernel: Code: 00 00 00 48 8b 05 99 c8 0d 00 64 c7 00 26 00 00 00 48 c7 c0 ff ff ff ff c3 66 2e 0f 1f 84 00 00 00 00 00 b8 10 00 00 00 0f 05 <48> 3d 01 f0 ff ff 73 01 c3 48 8b 0d 69 c8 0>

Dec 10 12:03:17 jupiter kernel: RSP: 002b:00007f4023d60408 EFLAGS: 00000246 ORIG_RAX: 0000000000000010

Dec 10 12:03:17 jupiter kernel: RAX: ffffffffffffffda RBX: 000000000000ae80 RCX: 00007f402f3dc5f7

Dec 10 12:03:17 jupiter kernel: RDX: 0000000000000000 RSI: 000000000000ae80 RDI: 0000000000000019

Dec 10 12:03:17 jupiter kernel: RBP: 000055acddbf0e10 R08: 000055acdc156250 R09: 000000000000ffff

Dec 10 12:03:17 jupiter kernel: R10: 0000000000000001 R11: 0000000000000246 R12: 0000000000000000

Dec 10 12:03:17 jupiter kernel: R13: 000055acdc83b140 R14: 0000000000000002 R15: 0000000000000000

Dec 10 12:03:17 jupiter kernel: </TASK>But for example, this morning, December 12, the server did not generate an error, however, it rebooted itself without explanation:

Code:

Dec 12 10:34:56 jupiter audit: PROCTITLE proctitle="ebtables-restore"

Dec 12 10:35:01 jupiter audit[215881]: USER_ACCT pid=215881 uid=0 auid=4294967295 ses=4294967295 subj=unconfined msg='op=PAM:accounting grantors=pam_permit acct="root" exe="/usr/sbin/cron" hostname=? addr=? terminal=cron res=success'

Dec 12 10:35:01 jupiter audit[215881]: CRED_ACQ pid=215881 uid=0 auid=4294967295 ses=4294967295 subj=unconfined msg='op=PAM:setcred grantors=pam_permit acct="root" exe="/usr/sbin/cron" hostname=? addr=? terminal=cron res=success'

Dec 12 10:35:01 jupiter audit[215881]: SYSCALL arch=c000003e syscall=1 success=yes exit=1 a0=7 a1=7ffda28aa2c0 a2=1 a3=7ffda28a9fd7 items=0 ppid=1487 pid=215881 auid=0 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=(none) ses=161 comm="cron" exe="/usr/sbin/cron" subj=unconfined key=(null)

Dec 12 10:35:01 jupiter audit: PROCTITLE proctitle=2F7573722F7362696E2F43524F4E002D66

Dec 12 10:35:01 jupiter audit[215881]: USER_START pid=215881 uid=0 auid=0 ses=161 subj=unconfined msg='op=PAM:session_open grantors=pam_loginuid,pam_env,pam_env,pam_permit,pam_unix,pam_limits acct="root" exe="/usr/sbin/cron" hostname=? addr=? terminal=cron res=success'

Dec 12 10:35:01 jupiter CRON[215881]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Dec 12 10:35:01 jupiter CRON[215882]: (root) CMD (command -v debian-sa1 > /dev/null && debian-sa1 1 1)

Dec 12 10:35:01 jupiter audit[215881]: CRED_DISP pid=215881 uid=0 auid=0 ses=161 subj=unconfined msg='op=PAM:setcred grantors=pam_permit acct="root" exe="/usr/sbin/cron" hostname=? addr=? terminal=cron res=success'

Dec 12 10:35:01 jupiter audit[215881]: USER_END pid=215881 uid=0 auid=0 ses=161 subj=unconfined msg='op=PAM:session_close grantors=pam_loginuid,pam_env,pam_env,pam_permit,pam_unix,pam_limits acct="root" exe="/usr/sbin/cron" hostname=? addr=? terminal=cron res=success'

Dec 12 10:35:01 jupiter CRON[215881]: pam_unix(cron:session): session closed for user root

Dec 12 10:35:06 jupiter audit[215900]: NETFILTER_CFG table=filter family=7 entries=0 op=xt_replace pid=215900 subj=unconfined comm="ebtables-restor"

Dec 12 10:35:06 jupiter audit[215900]: SYSCALL arch=c000003e syscall=54 success=yes exit=0 a0=3 a1=0 a2=80 a3=56478e812e60 items=0 ppid=1494 pid=215900 auid=4294967295 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=(none) ses=4294967295 comm="ebtables-restor" exe="/usr/sbin/ebtables-legacy-restore" subj=unconfined key=(null)

Dec 12 10:35:06 jupiter audit: PROCTITLE proctitle="ebtables-restore"

-- Reboot --

Dec 12 10:37:03 jupiter kernel: Linux version 5.19.17-1-pve (build@proxmox) (gcc (Debian 10.2.1-6) 10.2.1 20210110, GNU ld (GNU Binutils for Debian) 2.35.2) #1 SMP PREEMPT_DYNAMIC PVE 5.19.17-1 (Mon, 14 Nov 2022 20:25:12 ()

Dec 12 10:37:03 jupiter kernel: Command line: BOOT_IMAGE=/vmlinuz-5.19.17-1-pve root=UUID=4f67024b-6e50-43af-be0d-19d5384743ad ro nomodeset iommu=pt quietIf anyone understands what is going on, or at least where I could try to correct this problem.

I have already seen on other posts, that it was possible to activate the AES instructions for Windows, to disconnect the unused ISOs, I even tried to install version 5.19 of the kernel but the server keeps rebooting.

Thank you for your reading time