Greetings!

We had to restore a (net) ~75GB directory using file-level restore.

Why? We have a fileserver that is ~2.5TB big and a full restore would have take a long time.

Restore:

That throughput seems pretty low and we would like to know how to optimize restore speeds for worst-case scenarios.

Full-restores seem to be as fast as we would expect them to be. (bottleneck = 1Gbit/s network)

I guess it is because the decryption process is done on the proxmox server (as far as I know..) and the backup-files need to be decrypted before file-access is available.

Is it recommended to use multiple backup-jobs for multiple vm-disks so we could (non file-level) restore them separatly?

This is our setup:

Client:

Proxmox Backup Server:

I would really love to know what is the bottleneck in this process.

If some more infos are needed - I'm glad to provide them.

Greetings

- Rath

We had to restore a (net) ~75GB directory using file-level restore.

Why? We have a fileserver that is ~2.5TB big and a full restore would have take a long time.

Restore:

Start time: 0915

Finish time: 1145

Size: 45GB zipped

Calculated throughput: 45.000MB / 9.000sec = 5MB/s

That throughput seems pretty low and we would like to know how to optimize restore speeds for worst-case scenarios.

Full-restores seem to be as fast as we would expect them to be. (bottleneck = 1Gbit/s network)

I guess it is because the decryption process is done on the proxmox server (as far as I know..) and the backup-files need to be decrypted before file-access is available.

Is it recommended to use multiple backup-jobs for multiple vm-disks so we could (non file-level) restore them separatly?

This is our setup:

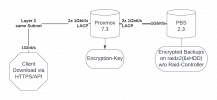

Client and Proxmox Server are on the same Layer 2 network.

Connection from proxmox to pbs is through a firewall.

Backups are stored encrypted.

Client:

- KDE neon 5.26 / Ubuntu 22.04

- Network: Dell Dock WD19TB - 1Gbit/s

No other networking issues. Normal 100MB/s file transfer speed to fileserver. - Google Chrome 108.0.5359.124 (Official Build) (64-bit)

Proxmox Backup Server:

- Versions:

PBS: 2.3.2-1

Linux PBS 5.15.85-1-pve #1 SMP PVE 5.15.85-1 (2023-02-01T00:00Z) x86_64 GNU/Linux

- CPU:

32 x Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz (2 Sockets)

- RAM:

192GB

- Disks:

6x Western Digital 3,5" 16TB SATA 6Gb/s 7.2k RPM

raidz2 without RAID-controller

Bash:root@PBS:~# zpool status pool: DATA state: ONLINE scan: scrub repaired 0B in 2 days 13:37:41 with 0 errors on Tue Jan 10 14:01:42 2023 config: NAME STATE READ WRITE CKSUM DATA ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 ata-WDC_WUH721816ALE6L4_*** ONLINE 0 0 0 ata-WDC_WUH721816ALE6L4_*** ONLINE 0 0 0 ata-WDC_WUH721816ALE6L4_*** ONLINE 0 0 0 ata-WDC_WUH721816ALE6L4_*** ONLINE 0 0 0 ata-WDC_WUH721816ALE6L4_*** ONLINE 0 0 0 ata-WDC_WUH721816ALE6L4_*** ONLINE 0 0 0 logs mirror-1 ONLINE 0 0 0 sda1 ONLINE 0 0 0 sdb1 ONLINE 0 0 0 errors: No known data errors root@PBS:~# zpool iostat -v capacity operations bandwidth pool alloc free read write read write ------------------------------------------------- ----- ----- ----- ----- ----- ----- DATA 44.9T 42.5T 56 189 797K 16.5M raidz2-0 44.9T 42.5T 56 188 797K 16.3M ata-WDC_WUH721816ALE6L4_*** - - 10 30 140K 2.72M ata-WDC_WUH721816ALE6L4_*** - - 9 31 134K 2.72M ata-WDC_WUH721816ALE6L4_*** - - 8 32 127K 2.72M ata-WDC_WUH721816ALE6L4_*** - - 10 30 138K 2.72M ata-WDC_WUH721816ALE6L4_*** - - 9 31 131K 2.72M ata-WDC_WUH721816ALE6L4_*** - - 8 31 126K 2.72M logs - - - - - - mirror-1 640K 448G 0 1 11 166K sda1 - - 0 0 5 83.0K sdb1 - - 0 0 5 83.0K root@PBS:~# zfs get all DATA NAME PROPERTY VALUE SOURCE DATA type filesystem - DATA creation Mon Jan 3 14:01 2022 - DATA used 29.9T - DATA available 28.2T - DATA referenced 288K - DATA compressratio 1.08x - DATA mounted yes - DATA recordsize 1M local DATA mountpoint /mnt/datastore/DATA local DATA sharenfs off default DATA checksum on default DATA compression on local DATA atime off local DATA devices on default DATA exec on default DATA setuid on default DATA readonly off default DATA zoned off default DATA snapdir hidden default DATA aclmode discard default DATA aclinherit restricted default DATA createtxg 1 - DATA canmount on default DATA xattr on default DATA copies 1 default DATA version 5 - DATA utf8only off - DATA normalization none - DATA casesensitivity sensitive - DATA vscan off default DATA nbmand off default DATA sharesmb off default DATA refquota none default DATA refreservation none default DATA primarycache all default DATA secondarycache all default DATA usedbysnapshots 0B - DATA usedbydataset 288K - DATA usedbychildren 29.9T - DATA usedbyrefreservation 0B - DATA logbias latency default DATA objsetid 54 - DATA dedup off default DATA mlslabel none default DATA sync standard default DATA dnodesize legacy default DATA refcompressratio 1.00x - DATA written 288K - DATA logicalused 32.2T - DATA logicalreferenced 63K - DATA volmode default default DATA filesystem_limit none default DATA snapshot_limit none default DATA filesystem_count none default DATA snapshot_count none default DATA snapdev hidden default DATA acltype off default DATA context none default DATA fscontext none default DATA defcontext none default DATA rootcontext none default DATA relatime off default DATA redundant_metadata all default - Network:

Intel Corporation I350 Gigabit Network

Bash:vmbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 # bridge-ports enp3s0f0 RX packets 23112614 bytes 144696929257 (134.7 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 63763992 bytes 440974757980 (410.6 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 enp3s0f0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 RX packets 440900875 bytes 618959995723 (576.4 GiB) RX errors 0 dropped 26539 overruns 23364 frame 0 TX packets 355860144 bytes 460253104060 (428.6 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

- Versions:

Proxmox: 7.3-4

Linux prox 5.15.83-1-pve #1 SMP PVE 5.15.83-1 (2022-12-15T00:00Z) x86_64 GNU/Linux

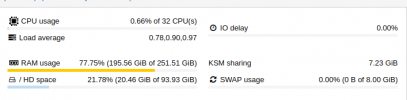

- Load:

- CPU:

32 x AMD EPYC 7302P 16-Core Processor (1 Socket)

- RAM:

256GB RAM

- Network:

2x Intel Corporation I210 Gigabit Network

Bash:bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500 RX packets 743712487 bytes 566949300718 (528.0 GiB) RX errors 0 dropped 29806 overruns 0 frame 0 TX packets 1000839911 bytes 1261282300328 (1.1 TiB) TX errors 0 dropped 40 overruns 0 carrier 0 collisions 0 eno1: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 RX packets 154612760 bytes 58827206698 (54.7 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 777239716 bytes 1090377102252 (1015.4 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 eno2: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 RX packets 589162106 bytes 508138692762 (473.2 GiB) RX errors 0 dropped 1 overruns 0 frame 0 TX packets 223670590 bytes 170947175225 (159.2 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

I would really love to know what is the bottleneck in this process.

If some more infos are needed - I'm glad to provide them.

Greetings

- Rath

Attachments

Last edited: