Hello everyone!

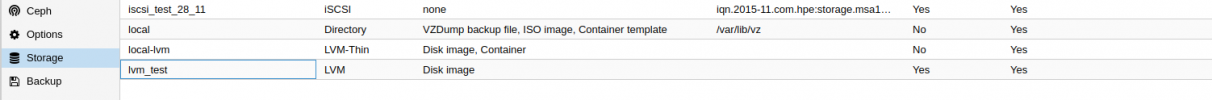

I encounter problem with setting iscsi and chap on my proxmox server.

I had a disk bay HPE MSA1060 where a set a record for chap authentication:

initiatorname : the iqn find on the proxmox server in /etc/iscsi/initiatorname.iscsi

password: test1234test

on the proxmox server, in /etc/iscsi/iscsi.conf i set :

when i try a lsscsi, it dit not find my hpe msa.

when i try a iscsiadm -m node --portal "192.168.0.1" --login it returns:

(i change the ip for the post, and change the iqn)

so, i dont know how to make it works...

Someone can help me ?

When i try to unset chap on my hpe msa, and comment all the line in /etc/iscsi/iscsi.conf for the chap auth, lsscsi find the volumes from my hpe msa, and the iscsiadm command return no error.

So, there is something i missed for chap, but i dont know what...

I encounter problem with setting iscsi and chap on my proxmox server.

I had a disk bay HPE MSA1060 where a set a record for chap authentication:

initiatorname : the iqn find on the proxmox server in /etc/iscsi/initiatorname.iscsi

password: test1234test

on the proxmox server, in /etc/iscsi/iscsi.conf i set :

# To enable CHAP authentication set node.session.auth.authmethod

# to CHAP. The default is None.

node.session.auth.authmethod = CHAP

# To configure which CHAP algorithms to enable set

# node.session.auth.chap_algs to a comma seperated list.

# The algorithms should be listen with most prefered first.

# Valid values are MD5, SHA1, SHA256, and SHA3-256.

# The default is MD5.

#node.session.auth.chap_algs = SHA3-256,SHA256,SHA1,MD5

# To set a CHAP username and password for initiator

# authentication by the target(s), uncomment the following lines:

node.session.auth.username = username

node.session.auth.password = test1234test

# To set a CHAP username and password for target(s)

# authentication by the initiator, uncomment the following lines:

#node.session.auth.username_in = username_in

#node.session.auth.password_in = password_in

# To enable CHAP authentication for a discovery session to the target

# set discovery.sendtargets.auth.authmethod to CHAP. The default is None.

discovery.sendtargets.auth.authmethod = CHAP

# To set a discovery session CHAP username and password for the initiator

# authentication by the target(s), uncomment the following lines:

discovery.sendtargets.auth.username = username

discovery.sendtargets.auth.password = test1234testwhen i try a lsscsi, it dit not find my hpe msa.

when i try a iscsiadm -m node --portal "192.168.0.1" --login it returns:

Logging in to [iface: default, target: iqn.xxx.hpe:storage.msa1060.xxxxxxx, portal: 192.168.0.1,3260]

iscsiadm: Could not login to [iface: default, target: iqn.xxxxx.hpe:storage.msa1060.xxxxxxxx, portal: 192.168.0.1,3260].

iscsiadm: initiator reported error (24 - iSCSI login failed due to authorization failure)

iscsiadm: Could not log into all portals(i change the ip for the post, and change the iqn)

so, i dont know how to make it works...

Someone can help me ?

When i try to unset chap on my hpe msa, and comment all the line in /etc/iscsi/iscsi.conf for the chap auth, lsscsi find the volumes from my hpe msa, and the iscsiadm command return no error.

So, there is something i missed for chap, but i dont know what...