@rickygm

on-site just now!!

as you requested me, going to retrieve infos.....

---------------------------------------------------------

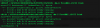

dpkg -s multipath-tools | grep Version

returns:

Version: 0.5.0-6+deb8u2

---------------------------------------------------------

multipath -ll

returns:

nothing!!!

---------------------------------------------------------

multipsth -d

returns:

create: mpatha (3600508b1001030393632423838300700) undef HP,LOGICAL VOLUME

size=80G features='0' hwhandler='0' wp=undef

`-+- policy='round-robin 0' prio=1 status=undef

`- 3:1:0:0 sda 8:0 undef ready running

create: mpathb (3600508b1001030393632423838300800) undef HP,LOGICAL VOLUME

size=193G features='0' hwhandler='0' wp=undef

`-+- policy='round-robin 0' prio=1 status=undef

`- 3:1:0:1 sdb 8:16 undef ready running

create: mpath0 (3600c0ff000271f5d02ff105701000000) undef HP,MSA 2040 SAN

size=558G features='0' hwhandler='0' wp=undef

`-+- policy='round-robin 0' prio=1 status=undef

|- 2:0:0:0 sdc 8:32 undef ready running

`- 4:0:0:0 sde 8:64 undef ready running

create: mpath1 (3600c0ff00027217d28ff105701000000) undef HP,MSA 2040 SAN

size=4.1T features='0' hwhandler='0' wp=undef

`-+- policy='round-robin 0' prio=1 status=undef

|- 2:0:0:1 sdd 8:48 undef ready running

`- 4:0:0:1 sdf 8:80 undef ready running

---------------------------------------------------------

here is our multipath.conf

cat /etc/multipath.conf

defaults {

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

uid_attribute ID_SERIAL

rr_min_io 100

failback immediate

no_path_retry queue

user_friendly_names yes

}

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^(td|hd)[a-z]"

devnode "^dcssblk[0-9]*"

devnode "^cciss!c[0-9]d[0-9]*"

device {

vendor "DGC"

product "LUNZ"

}

device {

vendor "EMC"

product "LUNZ"

}

device {

vendor "IBM"

product "Universal Xport"

}

device {

vendor "IBM"

product "S/390.*"

}

device {

vendor "DELL"

product "Universal Xport"

}

device {

vendor "SGI"

product "Universal Xport"

}

device {

vendor "STK"

product "Universal Xport"

}

device {

vendor "SUN"

product "Universal Xport"

}

device {

vendor "(NETAPP|LSI|ENGENIO)"

product "Universal Xport"

}

}

blacklist_exceptions {

wwid "3600c0ff000271f5d02ff105701000000"

wwid "3600c0ff00027217d28ff105701000000"

}

multipaths {

multipath {

wwid "3600c0ff000271f5d02ff105701000000"

alias mpath0

}

multipath {

wwid "3600c0ff00027217d28ff105701000000"

alias mpath1

}

}

---------------------------------------------------------

i've seen inside multipath dry run that local, single path devices are listed as mpatha and mpathb,

i think modifying the second row into blacklist section as follows may solve this discrepancy

devnode "^(td|hd|sd)[a-z]"

waiting your ideas about our config!!

regards,

francesco