Hi there,

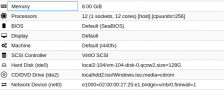

Proxmox UI shows memory usage of 98.07%, and htop shows indeed usage of about 95% memory.

I have 32 GB total memory, and the following VMs :

vm1 : 6GB used of 8GB

vm2 : 1GB used of 2GB

vm3 : 2GB used of 4GB

and Containers :

ct1 : 1GB used of 2GB

ct2 : 0.5GB used of 2GB

ct3 : 2GB used of 4GB

The problem is : how it is possible that my proxmox server use all the ram, regarding containers and VMs usage and declaration ?

When I shutdown a given container, the total RAM amount don't change. When I shutdown a VM, the RAM is freedup accordingly. But it doesn't explain why the +95% memory usage..

Do you have some pointers please ?

Q. M.

Proxmox UI shows memory usage of 98.07%, and htop shows indeed usage of about 95% memory.

I have 32 GB total memory, and the following VMs :

vm1 : 6GB used of 8GB

vm2 : 1GB used of 2GB

vm3 : 2GB used of 4GB

and Containers :

ct1 : 1GB used of 2GB

ct2 : 0.5GB used of 2GB

ct3 : 2GB used of 4GB

- max memory usage for containers and VMS : 8+2+4+2+2+4 = 22GB MAX

- current memory usage for containers and VMS : 6+1+2+1+0.5+2 = 12.5 GB

The problem is : how it is possible that my proxmox server use all the ram, regarding containers and VMs usage and declaration ?

When I shutdown a given container, the total RAM amount don't change. When I shutdown a VM, the RAM is freedup accordingly. But it doesn't explain why the +95% memory usage..

Do you have some pointers please ?

Q. M.