Hi!

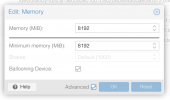

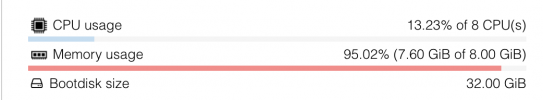

I'm using Proxmox 7.0. It reports that my openmediavault VM uses 95.32% (7.63 GiB of 8.00 GiB) or RAM, where OMV claims to be using 229M/7.77G.

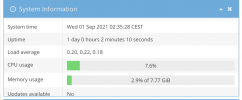

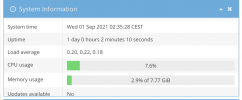

OMV reports:

This is confirmed by:

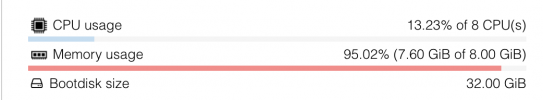

However, proxmox claims that the memory is running out:

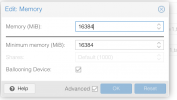

With the other VM, running ubuntu-server there's also misalignment: proxmox reports 20.38% (3.26 GiB of 16.00 GiB), where OS claims 2.1Gi - which is still worrisome, albeit not as much.

What's going on? How can I diagnose the issue?

I'm using Proxmox 7.0. It reports that my openmediavault VM uses 95.32% (7.63 GiB of 8.00 GiB) or RAM, where OMV claims to be using 229M/7.77G.

OMV reports:

This is confirmed by:

Code:

# free -h

total used free shared buff/cache available

Mem: 7.8Gi 213Mi 151Mi 13Mi 7.4Gi 7.3Gi

Swap: 9Gi 0.0Ki 9GiHowever, proxmox claims that the memory is running out:

With the other VM, running ubuntu-server there's also misalignment: proxmox reports 20.38% (3.26 GiB of 16.00 GiB), where OS claims 2.1Gi - which is still worrisome, albeit not as much.

What's going on? How can I diagnose the issue?