Hi all,

I've big problems on replication of all vms since the last proxmox update. I've deleted all replication schedules and the replicated volumes.

My ZFS pool is not on heavy load, all time between 0 and 10%, but no replication is ending successfully.

Another question is, why are the replication logs in the gui are always ending after 2 minutes???

E.g. there is a Winserver 2019 VM with 3 Volumes, 200, 600 und 1500 GB. The replication starts with the first volume and with zfs list I can see after a while, that the base volume is replicated well, but the replication snapshot will not find its way to the destination pool.

No heavy pool load and if I set the network throttle in the replication job to 25 MB/s, it's not changing anything.

For over a year now the process ran perfectly, with no errors, but since the last update it's going wrong.

I'm running Linux 5.13.19-1-pve #1 SMP PVE 5.13.19-2 (Tue, 09 Nov 2021 12:59:38 +0100) on both cluster nodes.

2021-11-26 13:42:00 100-0: start replication job

2021-11-26 13:42:00 100-0: guest => VM 100, running => 5041

2021-11-26 13:42:00 100-0: volumes => local-zfs:vm-100-disk-0,local-zfs:vm-100-disk-1,local-zfs:vm-100-disk-3

2021-11-26 13:42:01 100-0: freeze guest filesystem

2021-11-26 13:42:10 100-0: create snapshot '__replicate_100-0_1637930520__' on local-zfs:vm-100-disk-0

2021-11-26 13:42:10 100-0: create snapshot '__replicate_100-0_1637930520__' on local-zfs:vm-100-disk-1

2021-11-26 13:42:11 100-0: create snapshot '__replicate_100-0_1637930520__' on local-zfs:vm-100-disk-3

2021-11-26 13:42:11 100-0: thaw guest filesystem

2021-11-26 13:42:12 100-0: using secure transmission, rate limit: 25 MByte/s

2021-11-26 13:42:12 100-0: full sync 'local-zfs:vm-100-disk-0' (__replicate_100-0_1637930520__)

2021-11-26 13:42:12 100-0: using a bandwidth limit of 25000000 bps for transferring 'local-zfs:vm-100-disk-0'

2021-11-26 13:42:13 100-0: full send of rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__ estimated size is 111G

2021-11-26 13:42:13 100-0: total estimated size is 111G

2021-11-26 13:42:14 100-0: TIME SENT SNAPSHOT rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:14 100-0: 13:42:14 38.3M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:15 100-0: 13:42:15 62.2M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:16 100-0: 13:42:16 86.0M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:17 100-0: 13:42:17 110M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:18 100-0: 13:42:18 134M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:19 100-0: 13:42:19 158M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:20 100-0: 13:42:20 181M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:21 100-0: 13:42:21 201M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:22 100-0: 13:42:22 220M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:23 100-0: 13:42:23 237M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:24 100-0: 13:42:24 248M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:25 100-0: 13:42:25 262M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:26 100-0: 13:42:26 322M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:27 100-0: 13:42:27 328M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:28 100-0: 13:42:28 355M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:29 100-0: 13:42:29 374M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:30 100-0: 13:42:30 383M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:31 100-0: 13:42:31 396M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:32 100-0: 13:42:32 429M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:33 100-0: 13:42:33 437M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:34 100-0: 13:42:34 447M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:35 100-0: 13:42:35 482M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:36 100-0: 13:42:36 500M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:37 100-0: 13:42:37 519M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:38 100-0: 13:42:38 541M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:39 100-0: 13:42:39 556M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:40 100-0: 13:42:40 572M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:41 100-0: 13:42:41 575M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:42 100-0: 13:42:42 591M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:43 100-0: 13:42:43 609M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:44 100-0: 13:42:44 610M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:45 100-0: 13:42:45 627M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:46 100-0: 13:42:46 649M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:47 100-0: 13:42:47 666M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:48 100-0: 13:42:48 743M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:49 100-0: 13:42:49 768M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:50 100-0: 13:42:50 812M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:51 100-0: 13:42:51 837M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:52 100-0: 13:42:52 887M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:53 100-0: 13:42:53 957M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:54 100-0: 13:42:54 977M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:55 100-0: 13:42:55 994M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:56 100-0: 13:42:56 1.01G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:57 100-0: 13:42:57 1.02G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:58 100-0: 13:42:58 1.04G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:59 100-0: 13:42:59 1.05G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:00 100-0: 13:43:00 1.10G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:01 100-0: 13:43:01 1.13G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:02 100-0: 13:43:02 1.16G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:03 100-0: 13:43:03 1.18G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:04 100-0: 13:43:04 1.20G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:05 100-0: 13:43:05 1.22G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:06 100-0: 13:43:06 1.25G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:07 100-0: 13:43:07 1.27G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:08 100-0: 13:43:08 1.29G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:09 100-0: 13:43:09 1.32G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:10 100-0: 13:43:10 1.32G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:11 100-0: 13:43:11 1.33G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:12 100-0: 13:43:12 1.36G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:13 100-0: 13:43:13 1.37G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:14 100-0: 13:43:14 1.40G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:15 100-0: 13:43:15 1.41G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:16 100-0: 13:43:16 1.42G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:17 100-0: 13:43:17 1.42G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:18 100-0: 13:43:18 1.44G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:19 100-0: 13:43:19 1.44G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:20 100-0: 13:43:20 1.47G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:21 100-0: 13:43:21 1.48G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:22 100-0: 13:43:22 1.49G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:23 100-0: 13:43:23 1.52G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:24 100-0: 13:43:24 1.53G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:25 100-0: 13:43:25 1.54G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:26 100-0: 13:43:26 1.56G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:27 100-0: 13:43:27 1.57G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:28 100-0: 13:43:28 1.59G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:29 100-0: 13:43:29 1.60G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:30 100-0: 13:43:30 1.62G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:31 100-0: 13:43:31 1.65G rpool/

log is always stopping here after 2 minutes.

In Proxmox 6 I was able to run the same replication job every 15 minutes without a network throttle and all was fine.

What is happenig here? Why is the job ending in nowhere after the sync of the the first base volume of three?

What are your jobs doing after upgrade to proxmox 7.1 and especially to the version Linux 5.13.19-1-pve #1 SMP PVE 5.13.19-2 (Tue, 09 Nov 2021 12:59:38 +0100)?

Do you have any ideas, how to examine the process better? Or it's a mistake on my side?

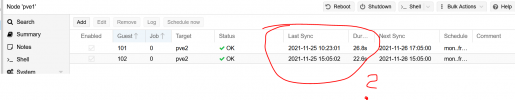

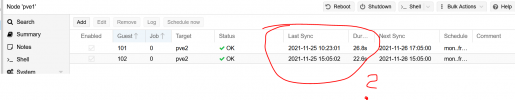

The other node in the same cluster, shows these entries in replication, that it's all o.k. but the last replication was days before, it's triggered to replicate every 3 hours in a defined time window.

but the log tells me, e.g. for VM 101, that the job has run 26.11.2021, again on this machine.... the log stops after 2 minutes.

For now I can't thrust the replication process any more. I think it's a very buggy feature since the last upgrade.

2021-11-26 14:05:00 101-0: start replication job

2021-11-26 14:05:00 101-0: guest => VM 101, running => 30559

2021-11-26 14:05:00 101-0: volumes => local-zfs:vm-101-disk-0,local-zfs:vm-101-disk-2

2021-11-26 14:05:00 101-0: delete stale replication snapshot '__replicate_101-0_1637921106__' on local-zfs:vm-101-disk-0

2021-11-26 14:05:01 101-0: delete stale replication snapshot '__replicate_101-0_1637921106__' on local-zfs:vm-101-disk-2

2021-11-26 14:05:01 101-0: (remote_prepare_local_job) delete stale replication snapshot '__replicate_101-0_1637921106__' on local-zfs:vm-101-disk-0

2021-11-26 14:05:02 101-0: freeze guest filesystem

2021-11-26 14:05:23 101-0: create snapshot '__replicate_101-0_1637931900__' on local-zfs:vm-101-disk-0

2021-11-26 14:05:23 101-0: create snapshot '__replicate_101-0_1637931900__' on local-zfs:vm-101-disk-2

2021-11-26 14:05:23 101-0: thaw guest filesystem

2021-11-26 14:05:24 101-0: using secure transmission, rate limit: 25 MByte/s

2021-11-26 14:05:24 101-0: incremental sync 'local-zfs:vm-101-disk-0' (__replicate_101-0_1637832181__ => __replicate_101-0_1637931900__)

2021-11-26 14:05:24 101-0: using a bandwidth limit of 25000000 bps for transferring 'local-zfs:vm-101-disk-0'

2021-11-26 14:05:25 101-0: send from @__replicate_101-0_1637832181__ to rpool/data/vm-101-disk-0@__replicate_101-0_1637931900__ estimated size is 7.97G

2021-11-26 14:05:25 101-0: total estimated size is 7.97G

2021-11-26 14:05:26 101-0: TIME SENT SNAPSHOT rpool/data/vm-101-disk-0@__replicate_101-0_1637931900__

I've big problems on replication of all vms since the last proxmox update. I've deleted all replication schedules and the replicated volumes.

My ZFS pool is not on heavy load, all time between 0 and 10%, but no replication is ending successfully.

Another question is, why are the replication logs in the gui are always ending after 2 minutes???

E.g. there is a Winserver 2019 VM with 3 Volumes, 200, 600 und 1500 GB. The replication starts with the first volume and with zfs list I can see after a while, that the base volume is replicated well, but the replication snapshot will not find its way to the destination pool.

No heavy pool load and if I set the network throttle in the replication job to 25 MB/s, it's not changing anything.

For over a year now the process ran perfectly, with no errors, but since the last update it's going wrong.

I'm running Linux 5.13.19-1-pve #1 SMP PVE 5.13.19-2 (Tue, 09 Nov 2021 12:59:38 +0100) on both cluster nodes.

2021-11-26 13:42:00 100-0: start replication job

2021-11-26 13:42:00 100-0: guest => VM 100, running => 5041

2021-11-26 13:42:00 100-0: volumes => local-zfs:vm-100-disk-0,local-zfs:vm-100-disk-1,local-zfs:vm-100-disk-3

2021-11-26 13:42:01 100-0: freeze guest filesystem

2021-11-26 13:42:10 100-0: create snapshot '__replicate_100-0_1637930520__' on local-zfs:vm-100-disk-0

2021-11-26 13:42:10 100-0: create snapshot '__replicate_100-0_1637930520__' on local-zfs:vm-100-disk-1

2021-11-26 13:42:11 100-0: create snapshot '__replicate_100-0_1637930520__' on local-zfs:vm-100-disk-3

2021-11-26 13:42:11 100-0: thaw guest filesystem

2021-11-26 13:42:12 100-0: using secure transmission, rate limit: 25 MByte/s

2021-11-26 13:42:12 100-0: full sync 'local-zfs:vm-100-disk-0' (__replicate_100-0_1637930520__)

2021-11-26 13:42:12 100-0: using a bandwidth limit of 25000000 bps for transferring 'local-zfs:vm-100-disk-0'

2021-11-26 13:42:13 100-0: full send of rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__ estimated size is 111G

2021-11-26 13:42:13 100-0: total estimated size is 111G

2021-11-26 13:42:14 100-0: TIME SENT SNAPSHOT rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:14 100-0: 13:42:14 38.3M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:15 100-0: 13:42:15 62.2M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:16 100-0: 13:42:16 86.0M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:17 100-0: 13:42:17 110M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:18 100-0: 13:42:18 134M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:19 100-0: 13:42:19 158M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:20 100-0: 13:42:20 181M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:21 100-0: 13:42:21 201M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:22 100-0: 13:42:22 220M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:23 100-0: 13:42:23 237M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:24 100-0: 13:42:24 248M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:25 100-0: 13:42:25 262M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:26 100-0: 13:42:26 322M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:27 100-0: 13:42:27 328M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:28 100-0: 13:42:28 355M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:29 100-0: 13:42:29 374M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:30 100-0: 13:42:30 383M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:31 100-0: 13:42:31 396M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:32 100-0: 13:42:32 429M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:33 100-0: 13:42:33 437M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:34 100-0: 13:42:34 447M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:35 100-0: 13:42:35 482M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:36 100-0: 13:42:36 500M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:37 100-0: 13:42:37 519M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:38 100-0: 13:42:38 541M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:39 100-0: 13:42:39 556M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:40 100-0: 13:42:40 572M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:41 100-0: 13:42:41 575M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:42 100-0: 13:42:42 591M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:43 100-0: 13:42:43 609M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:44 100-0: 13:42:44 610M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:45 100-0: 13:42:45 627M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:46 100-0: 13:42:46 649M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:47 100-0: 13:42:47 666M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:48 100-0: 13:42:48 743M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:49 100-0: 13:42:49 768M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:50 100-0: 13:42:50 812M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:51 100-0: 13:42:51 837M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:52 100-0: 13:42:52 887M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:53 100-0: 13:42:53 957M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:54 100-0: 13:42:54 977M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:55 100-0: 13:42:55 994M rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:56 100-0: 13:42:56 1.01G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:57 100-0: 13:42:57 1.02G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:58 100-0: 13:42:58 1.04G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:42:59 100-0: 13:42:59 1.05G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:00 100-0: 13:43:00 1.10G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:01 100-0: 13:43:01 1.13G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:02 100-0: 13:43:02 1.16G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:03 100-0: 13:43:03 1.18G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:04 100-0: 13:43:04 1.20G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:05 100-0: 13:43:05 1.22G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:06 100-0: 13:43:06 1.25G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:07 100-0: 13:43:07 1.27G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:08 100-0: 13:43:08 1.29G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:09 100-0: 13:43:09 1.32G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:10 100-0: 13:43:10 1.32G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:11 100-0: 13:43:11 1.33G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:12 100-0: 13:43:12 1.36G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:13 100-0: 13:43:13 1.37G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:14 100-0: 13:43:14 1.40G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:15 100-0: 13:43:15 1.41G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:16 100-0: 13:43:16 1.42G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:17 100-0: 13:43:17 1.42G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:18 100-0: 13:43:18 1.44G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:19 100-0: 13:43:19 1.44G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:20 100-0: 13:43:20 1.47G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:21 100-0: 13:43:21 1.48G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:22 100-0: 13:43:22 1.49G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:23 100-0: 13:43:23 1.52G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:24 100-0: 13:43:24 1.53G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:25 100-0: 13:43:25 1.54G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:26 100-0: 13:43:26 1.56G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:27 100-0: 13:43:27 1.57G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:28 100-0: 13:43:28 1.59G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:29 100-0: 13:43:29 1.60G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:30 100-0: 13:43:30 1.62G rpool/data/vm-100-disk-0@__replicate_100-0_1637930520__

2021-11-26 13:43:31 100-0: 13:43:31 1.65G rpool/

log is always stopping here after 2 minutes.

In Proxmox 6 I was able to run the same replication job every 15 minutes without a network throttle and all was fine.

What is happenig here? Why is the job ending in nowhere after the sync of the the first base volume of three?

What are your jobs doing after upgrade to proxmox 7.1 and especially to the version Linux 5.13.19-1-pve #1 SMP PVE 5.13.19-2 (Tue, 09 Nov 2021 12:59:38 +0100)?

Do you have any ideas, how to examine the process better? Or it's a mistake on my side?

The other node in the same cluster, shows these entries in replication, that it's all o.k. but the last replication was days before, it's triggered to replicate every 3 hours in a defined time window.

but the log tells me, e.g. for VM 101, that the job has run 26.11.2021, again on this machine.... the log stops after 2 minutes.

For now I can't thrust the replication process any more. I think it's a very buggy feature since the last upgrade.

2021-11-26 14:05:00 101-0: start replication job

2021-11-26 14:05:00 101-0: guest => VM 101, running => 30559

2021-11-26 14:05:00 101-0: volumes => local-zfs:vm-101-disk-0,local-zfs:vm-101-disk-2

2021-11-26 14:05:00 101-0: delete stale replication snapshot '__replicate_101-0_1637921106__' on local-zfs:vm-101-disk-0

2021-11-26 14:05:01 101-0: delete stale replication snapshot '__replicate_101-0_1637921106__' on local-zfs:vm-101-disk-2

2021-11-26 14:05:01 101-0: (remote_prepare_local_job) delete stale replication snapshot '__replicate_101-0_1637921106__' on local-zfs:vm-101-disk-0

2021-11-26 14:05:02 101-0: freeze guest filesystem

2021-11-26 14:05:23 101-0: create snapshot '__replicate_101-0_1637931900__' on local-zfs:vm-101-disk-0

2021-11-26 14:05:23 101-0: create snapshot '__replicate_101-0_1637931900__' on local-zfs:vm-101-disk-2

2021-11-26 14:05:23 101-0: thaw guest filesystem

2021-11-26 14:05:24 101-0: using secure transmission, rate limit: 25 MByte/s

2021-11-26 14:05:24 101-0: incremental sync 'local-zfs:vm-101-disk-0' (__replicate_101-0_1637832181__ => __replicate_101-0_1637931900__)

2021-11-26 14:05:24 101-0: using a bandwidth limit of 25000000 bps for transferring 'local-zfs:vm-101-disk-0'

2021-11-26 14:05:25 101-0: send from @__replicate_101-0_1637832181__ to rpool/data/vm-101-disk-0@__replicate_101-0_1637931900__ estimated size is 7.97G

2021-11-26 14:05:25 101-0: total estimated size is 7.97G

2021-11-26 14:05:26 101-0: TIME SENT SNAPSHOT rpool/data/vm-101-disk-0@__replicate_101-0_1637931900__

Last edited: