Hi all! This is my first post to the forum, though I've been a lurker for years as I sharpen my Proxmox skills.

I'm currently working on getting MPIO and ALUA working with a TrueNAS Enterprise HA unit. I'm using TheGrandWazoo's freenas-proxmox plugin for WebGUI integration with TrueNAS.

Proxmox Implementation

ver 6.4-13

I created OVSInt vlan interfaces for each of the 2 iSCSI networks, and have working networking between all interfaces of the TrueNAS unit (2 interfaces per TrueNAS node).

I've followed instructions at Storage:_ZFS_over_iSCSI and do have ZFS-over-iSCSI working successfully, pre MPIO + ALUA implementation.

I've also followed instructions at iSCSI_Multipath and at freenas-proxmox multipath. The iSCSI sessions login at boot correctly.

Output of

My current multipath.conf is

Output of

This was actually a huge feat. Figuring out how to get the active/passive TrueNAS nodes in their own ALUA TPGs took forever, and I only realized how to do that when I saw this random CEPH documentation where they use

Output of

TrueNAS Implementation

ver 12.0-U6

NOTE: TrueNAS (at least this unit) does HA as Active/Passive

ZFS-over-iSCSI works, so I'm going to skip config documentation about datasets and zvols

Target Global Config: defaults, except that "Enable iSCSI ALUA" is checked

Portals: 1 configured portal for all iSCSI interfaces (2 on each TrueNAS node)

Initiator groups: configured for my proxmox nodes. Again, ZFS-over-iSCSI works so this shouldn't matter

Authorized Access: unconfigured

Targets: target is created, mapping the portal group to the initiator group

Extends + associated targets: 1 extend + associated target is created per VM disk via the freenas-proxmox plugin

Behavior:

VM disks can be created and destroyed, but that's because freenas-proxmox uses the FreeNAS API to create/destroy zvols/extends/associated-targets

Pre-config of multipathd, iSCSI-over-ZFS works to run a VM. The problem is that as far as I can tell, Proxmox only uses the path of the single portal IP you give it in the WebGUI

Once multipathd is installed and configured, several things start occuring:

1 - multipathd seems to affect cluster health. The node I'm working on will occasionally register as "?" in the WebGUI. Corosync can still communicate, and

2 - The WebGUI for the dev node get's very slow. It slows down my entire browser. Process utilization on my laptop and on the dev node is low. Maybe this is low dev node I/O, since this dev node is nested, but no VMs are running on the dev node

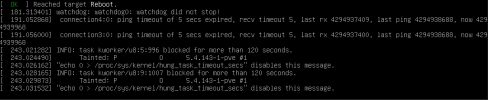

3 - with multipathd running, sometimes the node fails to do a graceful reboot, see attached screenshot

4 - syslog gets flooded with

5 - MPIO seems to work, but ALUA has issues.

The output of

With the above posted output of

and the rescanning takes 10 minutes

If I rollback the node and re-configure iscsi to only login to the active TrueNAS node, and

VMs turn on quickly and without fail. I can even drop a path by doing

and VMs will still run.

This seems to be some kind of bad ALUA negotiation between the Proxmox dev node and the TrueNAS unit, but for the life of me I can't figure out what to do about it. Can anyone offer some guidance?

I haven't tried migration or cloning, yet. Figured this should be 1 step at a time

Happy to post any further info or logs.

I'm currently working on getting MPIO and ALUA working with a TrueNAS Enterprise HA unit. I'm using TheGrandWazoo's freenas-proxmox plugin for WebGUI integration with TrueNAS.

Proxmox Implementation

ver 6.4-13

I created OVSInt vlan interfaces for each of the 2 iSCSI networks, and have working networking between all interfaces of the TrueNAS unit (2 interfaces per TrueNAS node).

I've followed instructions at Storage:_ZFS_over_iSCSI and do have ZFS-over-iSCSI working successfully, pre MPIO + ALUA implementation.

I've also followed instructions at iSCSI_Multipath and at freenas-proxmox multipath. The iSCSI sessions login at boot correctly.

Output of

iscsiadm -m session

Code:

tcp: [1] 172.16.9.252:3260,1 iqn.2021-10.org.truenas.ctl:proxmox-vms-target (non-flash)

tcp: [2] 172.16.10.252:3260,1 iqn.2021-10.org.truenas.ctl:proxmox-vms-target (non-flash)

tcp: [3] 172.16.10.253:3260,32769 iqn.2021-10.org.truenas.ctl:proxmox-vms-target (non-flash)

tcp: [4] 172.16.9.253:3260,32769 iqn.2021-10.org.truenas.ctl:proxmox-vms-target (non-flash)My current multipath.conf is

Code:

defaults {

polling_interval 2

#path_selector "round-robin 0" # tried this until I made the device stanza

path_grouping_policy group_by_prio

uid_attribute ID_SERIAL

#rr_min_io 100

failback immediate

no_path_retry 1 # I've tried this as queue as well

user_friendly_names yes

#features "0" # I've tried this on and off

find_multipaths off # I've tried on and off

prio "alua"

detect_prio yes

#verbosity 1

log_checker_err once # I used this to try to stop my logs being flooded with tur checker errors

}

blacklist {

#devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^(ram|raw|loop|fd|md|sr|scd|st)[0-9]*"

devnode "^hd[a-z][[0-9]*]"

devnode "^sda[[0-9]*]"

devnode "^cciss!c[0-9]d[0-9]*"

devnode "pve-swap"

devnode "pve-data"

devnode "sda"

}

devices {

device {

vendor "TrueNAS"

product "iSCSI Disk"

path_grouping_policy group_by_prio # I've also tried group_by_node_name, multibus, and failover

path_selector "queue-length 0"

hardware_handler "1 alua"

rr_weight priorities

prio_args exclusive_pref_bit # this is supposed to force paths in the same TPG to always be in the same path

}

}Output of

multipath -ll

Code:

mpathb (36589cfc000000a896cf1f2ed9f2f239e) dm-6 TrueNAS,iSCSI Disk

size=32G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 3:0:0:1 sdf 8:80 active ready running

| `- 4:0:0:1 sdg 8:96 active ready running

`-+- policy='service-time 0' prio=0 status=enabled

|- 6:0:0:1 sdh 8:112 failed faulty running

`- 5:0:0:1 sdi 8:128 failed faulty running

mpatha (36589cfc0000000f0e3747c8d6cc45c31) dm-5 TrueNAS,iSCSI Disk

size=32G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 4:0:0:0 sdc 8:32 active ready running

| `- 3:0:0:0 sdb 8:16 active ready running

`-+- policy='service-time 0' prio=0 status=enabled

|- 6:0:0:0 sde 8:64 failed faulty running

`- 5:0:0:0 sdd 8:48 failed faulty runningprio_args exclusive_pref_bitOutput of

multipath -t

Code:

defaults {

verbosity 2

polling_interval 2

max_polling_interval 8

reassign_maps "no"

multipath_dir "//lib/multipath"

path_selector "service-time 0"

path_grouping_policy "group_by_prio"

uid_attribute "ID_SERIAL"

prio "alua"

prio_args ""

features "0"

path_checker "tur"

alias_prefix "mpath"

failback "immediate"

rr_min_io 1000

rr_min_io_rq 1

max_fds "max"

rr_weight "uniform"

no_path_retry 1

queue_without_daemon "no"

flush_on_last_del "no"

user_friendly_names "yes"

fast_io_fail_tmo 5

bindings_file "/etc/multipath/bindings"

wwids_file "/etc/multipath/wwids"

prkeys_file "/etc/multipath/prkeys"

log_checker_err once

all_tg_pt "no"

retain_attached_hw_handler "yes"

detect_prio "yes"

detect_checker "yes"

force_sync "no"

strict_timing "no"

deferred_remove "no"

config_dir "/etc/multipath/conf.d"

delay_watch_checks "no"

delay_wait_checks "no"

marginal_path_err_sample_time "no"

marginal_path_err_rate_threshold "no"

marginal_path_err_recheck_gap_time "no"

marginal_path_double_failed_time "no"

find_multipaths "on"

uxsock_timeout 4000

retrigger_tries 0

retrigger_delay 10

missing_uev_wait_timeout 30

skip_kpartx "no"

disable_changed_wwids "yes"

remove_retries 0

ghost_delay "no"

find_multipaths_timeout -10

}

blacklist {

devnode "^(ram|raw|loop|fd|md|sr|scd|st)[0-9]*"

devnode "^hd[a-z][[0-9]*]"

devnode "^sda[[0-9]*]"

devnode "^cciss!c[0-9]d[0-9]*"

devnode "pve-swap"

devnode "pve-data"

devnode "sda"

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st|dcssblk)[0-9]"

devnode "^(td|hd|vd)[a-z]"

device {

vendor "SGI"

product "Universal Xport"

}

device {

vendor "^DGC"

product "LUNZ"

}

device {

vendor "EMC"

product "LUNZ"

}

device {

vendor "DELL"

product "Universal Xport"

}

device {

vendor "IBM"

product "Universal Xport"

}

device {

vendor "IBM"

product "S/390"

}

device {

vendor "(NETAPP|LSI|ENGENIO)"

product "Universal Xport"

}

device {

vendor "STK"

product "Universal Xport"

}

device {

vendor "SUN"

product "Universal Xport"

}

device {

vendor "(Intel|INTEL)"

product "VTrak V-LUN"

}

device {

vendor "Promise"

product "VTrak V-LUN"

}

device {

vendor "Promise"

product "Vess V-LUN"

}

}

blacklist_exceptions {

property "(SCSI_IDENT_|ID_WWN)"

}

devices {

#a bunch of device that don't matter went here

device {

vendor "TrueNAS"

product "iSCSI Dsk"

path_grouping_policy "group_by_prio"

hardware_handler "1 alua"

prio_args "exclusive_pref_bit"

rr_weight "priorities"

}

}

overrides {

}TrueNAS Implementation

ver 12.0-U6

NOTE: TrueNAS (at least this unit) does HA as Active/Passive

ZFS-over-iSCSI works, so I'm going to skip config documentation about datasets and zvols

Target Global Config: defaults, except that "Enable iSCSI ALUA" is checked

Portals: 1 configured portal for all iSCSI interfaces (2 on each TrueNAS node)

Initiator groups: configured for my proxmox nodes. Again, ZFS-over-iSCSI works so this shouldn't matter

Authorized Access: unconfigured

Targets: target is created, mapping the portal group to the initiator group

Extends + associated targets: 1 extend + associated target is created per VM disk via the freenas-proxmox plugin

Behavior:

VM disks can be created and destroyed, but that's because freenas-proxmox uses the FreeNAS API to create/destroy zvols/extends/associated-targets

Pre-config of multipathd, iSCSI-over-ZFS works to run a VM. The problem is that as far as I can tell, Proxmox only uses the path of the single portal IP you give it in the WebGUI

Once multipathd is installed and configured, several things start occuring:

1 - multipathd seems to affect cluster health. The node I'm working on will occasionally register as "?" in the WebGUI. Corosync can still communicate, and

pvecm status shows a healthy cluster2 - The WebGUI for the dev node get's very slow. It slows down my entire browser. Process utilization on my laptop and on the dev node is low. Maybe this is low dev node I/O, since this dev node is nested, but no VMs are running on the dev node

3 - with multipathd running, sometimes the node fails to do a graceful reboot, see attached screenshot

4 - syslog gets flooded with

Code:

Nov 18 10:59:28 pxmx-test-1 kernel: .

Nov 18 10:59:28 pxmx-test-1 kernel: .

Nov 18 10:59:28 pxmx-test-1 kernel: .

Nov 18 10:59:28 pxmx-test-1 kernel: .

Nov 18 10:59:30 pxmx-test-1 kernel: .

Nov 18 10:59:30 pxmx-test-1 kernel: .

Nov 18 10:59:30 pxmx-test-1 kernel: .

Nov 18 10:59:30 pxmx-test-1 kernel: .5 - MPIO seems to work, but ALUA has issues.

The output of

multipath -ll is what I would expect (finally). The active paths go to the active TrueNAS node, and the failed paths go to the passive TrueNAS node. Until I started using prio_args exclusive_pref_bit the paths would either be in the same TPG, or there would be a single path per TPG (i think from group_by_node_name), or there would be a TPG with 2 active paths + 1 faulty, and a TPG with a faulty path.With the above posted output of

multipath -ll, VMs sometimes turn on, but take forever. Or never turn on. If I double click the start task in the "Tasks" log, it looks like Proxmox has to rescan the iSCSI targets several times

Code:

Rescanning session [sid: 1, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.9.252,3260]

Rescanning session [sid: 2, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.10.252,3260]

Rescanning session [sid: 3, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.10.253,3260]

Rescanning session [sid: 4, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.9.253,3260]

Rescanning session [sid: 1, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.9.252,3260]

Rescanning session [sid: 2, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.10.252,3260]

Rescanning session [sid: 4, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.9.253,3260]

Rescanning session [sid: 3, target: iqn.2021-10.org.truenas.ctl:proxmox-vms-target, portal: 172.16.10.253,3260]If I rollback the node and re-configure iscsi to only login to the active TrueNAS node, and

multipath -ll looks like

Code:

mpathb (36589cfc000000a896cf1f2ed9f2f239e) dm-6 TrueNAS,iSCSI Disk

size=32G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 3:0:0:1 sdf 8:80 active ready running

| `- 4:0:0:1 sdg 8:96 active ready running

mpatha (36589cfc0000000f0e3747c8d6cc45c31) dm-5 TrueNAS,iSCSI Disk

size=32G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 4:0:0:0 sdc 8:32 active ready running

| `- 3:0:0:0 sdb 8:16 active ready runningifdown vlan10, whence multipath -ll will show

Code:

mpathb (36589cfc000000a896cf1f2ed9f2f239e) dm-6 TrueNAS,iSCSI Disk

size=32G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 3:0:0:1 sdf 8:80 active ready running

| `- 4:0:0:1 sdg 8:96 failed faulty running

mpatha (36589cfc0000000f0e3747c8d6cc45c31) dm-5 TrueNAS,iSCSI Disk

size=32G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 4:0:0:0 sdc 8:32 active ready running

| `- 3:0:0:0 sdb 8:16 failed faulty runningThis seems to be some kind of bad ALUA negotiation between the Proxmox dev node and the TrueNAS unit, but for the life of me I can't figure out what to do about it. Can anyone offer some guidance?

I haven't tried migration or cloning, yet. Figured this should be 1 step at a time

Happy to post any further info or logs.