In the first place let me disclosure that I've searched the forum for this error but I didn't saw a "solution" to this problem that applies in my case.

Second, this is consumer hardware because I just want to try Proxmox and use it in my homelab setting.

Going to the issue...

I bought some new hardware and installed the latest Proxmox (8.4-1). Around 20m with the system running I get a

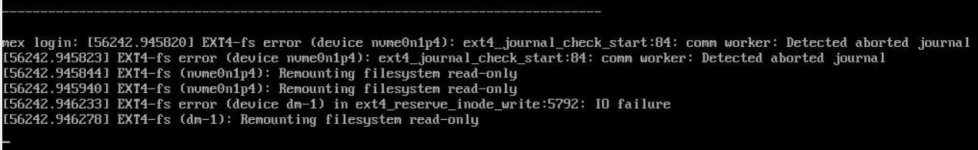

"EXT4-fs error (device dm-1): ext4_journal_check_start:84:"

"EXT4-fs (dm-1): Remounting filesystems read-only"

The disk in question is a brand new WD Black SN770 NVME 1TB.

I did the following tests to see if I could bypass the error:

I saw in other posts here that the general opinion is that "the disk is dying" or that "you should use enterprise hardware".

It's hard to believe that both NVME disks are about to die... seems a lot of coincidence to me. Regarding enterprise hardware... I get that it must be more stable and all of that. But I'm talking here that the system just running without any VMs or containers crashes... the only thing I did was uploading an ISO image...

Anyway... do you see any thing that I could try or any indication of some problem? In my ignorance looking at this I can only think that either Proxmox has some issue with NVME disks or there is some setting in my motherboard for NVME disks that I don't understand.

Second, this is consumer hardware because I just want to try Proxmox and use it in my homelab setting.

Going to the issue...

I bought some new hardware and installed the latest Proxmox (8.4-1). Around 20m with the system running I get a

"EXT4-fs error (device dm-1): ext4_journal_check_start:84:"

"EXT4-fs (dm-1): Remounting filesystems read-only"

The disk in question is a brand new WD Black SN770 NVME 1TB.

I did the following tests to see if I could bypass the error:

- Tried the disk in the other M2 slot, same problem;

- Tried adding the following to the /etc/default/grub file: GRUB_CMDLINE_LINUX_DEFAULT="quiet nvme_core.default_ps_max_latency_us=0 pcie_aspm=off" - same problem

- Tried with an used Samsung SSD 840 Pro - with this one I got the system to run all night without problems and even running a VM.

- Tried with an used WD Black SN750 NVMW 500GB - same error as the other NVME;

- I went through my BIOS (Gigabyte b550 aorus elite v2) and everything looks ok - but to be honest I don't know what to look for in particular.

- "EXT4-fs error (device dm-1) in ext4_reserve_inode_write:5792: IO failure"

- "EXT4-fs error (device dm-1) in ext4_reserve_inode_write:5792: Journal has aborted"

- "EXT4-fs error (device dm-1) ext4_do_writepages: jbd2_start: 10181 pages, ino 6032890; err -5"

- "EXT4-fs (dm-1): Remounting filesystems read-only"

- "EXT4-fs (dm-1): Remounting filesystems read-only"

- "EXT4-fs error (device dm-1) in ext4_reserve_inode_write:5792: Journal has aborted"

- "EXT4-fs error (device dm-1): __ext4_find_entry:1683: inode #2500000: comm sh: reading directory Iblock 0"

- "EXT4-fs (dm-1): Remounting filesystems read-only"

- "EXT4-fs (dm-1): Remounting filesystems read-only"

I saw in other posts here that the general opinion is that "the disk is dying" or that "you should use enterprise hardware".

It's hard to believe that both NVME disks are about to die... seems a lot of coincidence to me. Regarding enterprise hardware... I get that it must be more stable and all of that. But I'm talking here that the system just running without any VMs or containers crashes... the only thing I did was uploading an ISO image...

Anyway... do you see any thing that I could try or any indication of some problem? In my ignorance looking at this I can only think that either Proxmox has some issue with NVME disks or there is some setting in my motherboard for NVME disks that I don't understand.