Hi all

I upgraded my cluster from version 7.4.19 to 8.3.0

to do this I followed the ceph update guide ( Ceph pacific to quincy ) and then the node update guide ( Proxmox 7 to 8 ).

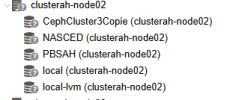

I ran the guide on 4 nodes and everything went well, node 2 instead shows the image below upon reboot

I tried to rebuild both the ceph manager and the ceph monitor, but the problem still doesn't resolve itself, how can I get it back up and running without having to format it?

before start the upgrade i have passed all test on the script "pve7to8 --full" and don't received no warning

Thank you so much

I upgraded my cluster from version 7.4.19 to 8.3.0

to do this I followed the ceph update guide ( Ceph pacific to quincy ) and then the node update guide ( Proxmox 7 to 8 ).

I ran the guide on 4 nodes and everything went well, node 2 instead shows the image below upon reboot

I tried to rebuild both the ceph manager and the ceph monitor, but the problem still doesn't resolve itself, how can I get it back up and running without having to format it?

before start the upgrade i have passed all test on the script "pve7to8 --full" and don't received no warning

Thank you so much

Last edited: