Hi All,

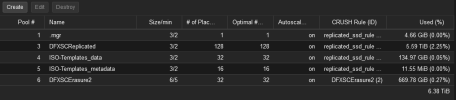

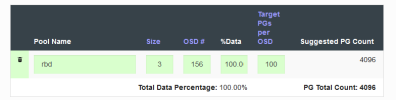

I have a 6 x Dell R740xd Cluster with Proxmox 8.2.4 / Ceph 18.2.2, 26 x OSD (a mix of Toshiba and Intel enterprise-grade SSD 1.92TB drives), and a dedicated 10G network (4 x uplinks in a bond) for both the public and cluster networks, rbd pool replica x3 and EC: 5+1

Question:

We've started creating new WinServer 2022/2019 servers and have noticed it is extremely slow to work with, installing the OS takes a lot and once you are inside the VM it is very laggy.

Here is the VM config:

The performance in the Replicated x 3 pool is also terrible.

I have tested deploying the VMs onto the local-lvm and the performance is good.

We've done iperf tests ( CEPH is on MTU 9000) and results are as expected:

I've followed this guide to test rdb and I don't see anything unusual, ( I might be wrong but you guys can correct me)

https://docs.redhat.com/en/document...ark#benchmarking-ceph-block-performance_admin

I also did the block performance:

Hope you guys can help me identify where the issue could be,

Regards

JP

I have a 6 x Dell R740xd Cluster with Proxmox 8.2.4 / Ceph 18.2.2, 26 x OSD (a mix of Toshiba and Intel enterprise-grade SSD 1.92TB drives), and a dedicated 10G network (4 x uplinks in a bond) for both the public and cluster networks, rbd pool replica x3 and EC: 5+1

Question:

We've started creating new WinServer 2022/2019 servers and have noticed it is extremely slow to work with, installing the OS takes a lot and once you are inside the VM it is very laggy.

Here is the VM config:

Code:

# qm config 115

agent: 1

bios: ovmf

boot: order=scsi0;ide0;ide2;net0

cores: 5

cpu: host

efidisk0: DFXSCErasureStorage:vm-115-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

ide0: ISO-Templates:iso/virtio-win-0.1.248.iso,media=cdrom,size=715188K

ide2: ISO-Templates:iso/Win_Server_STD_CORE_2022_2108.36_X23-80100.ISO,media=cdrom,size=5827918K

machine: pc-q35-9.0

memory: 16384

meta: creation-qemu=9.0.0,ctime=1729083494

name: DFX.CE.UT.US.VM.NAPPS.TST.ROZ001.00001

net0: virtio=BC:24:11:1E:72:6C,bridge=ROZ001,firewall=1

numa: 0

ostype: win11

scsi0: DFXSCErasureStorage:vm-115-disk-1,cache=writeback,discard=on,size=60G

scsihw: virtio-scsi-pci

smbios1: uuid=1e9a06f3-e68d-472b-a104-0aced268e32c

sockets: 2

tpmstate0: DFXSCErasureStorage:vm-115-disk-2,size=4M,version=v2.0

vmgenid: a0b30c55-3760-4598-9ed6-d02b7d669441The performance in the Replicated x 3 pool is also terrible.

I have tested deploying the VMs onto the local-lvm and the performance is good.

We've done iperf tests ( CEPH is on MTU 9000) and results are as expected:

Code:

# iperf -c 172.21.8.22 -e -i 1

------------------------------------------------------------

Client connecting to 172.21.8.22, TCP port 5001 with pid 1431026 (1 flows)

Write buffer size: 131072 Byte

TOS set to 0x0 (Nagle on)

TCP window size: 16.0 KByte (default)

------------------------------------------------------------

[ 1] local 172.21.8.21%ceph port 50206 connected with 172.21.8.22 port 5001 (sock=3) (icwnd/mss/irtt=87/8948/254) (ct=0.33 ms) on 2024-10-29 12:26:09 (MDT)

[ ID] Interval Transfer Bandwidth Write/Err Rtry Cwnd/RTT(var) NetPwr

[ 1] 0.0000-1.0000 sec 1.15 GBytes 9.88 Gbits/sec 9418/0 22 1773K/1081(44) us 1141939

[ 1] 1.0000-2.0000 sec 1.15 GBytes 9.88 Gbits/sec 9424/0 0 1773K/1214(12) us 1017481

[ 1] 2.0000-3.0000 sec 1.15 GBytes 9.88 Gbits/sec 9421/0 14 1616K/1105(26) us 1117493

[ 1] 3.0000-4.0000 sec 1.15 GBytes 9.88 Gbits/sec 9425/0 4 1756K/1274(26) us 969665

[ 1] 4.0000-5.0000 sec 1.15 GBytes 9.89 Gbits/sec 9429/0 18 1555K/1061(33) us 1164824

[ 1] 5.0000-6.0000 sec 1.15 GBytes 9.88 Gbits/sec 9424/0 8 1747K/984(36) us 1255307

[ 1] 6.0000-7.0000 sec 1.15 GBytes 9.90 Gbits/sec 9439/0 0 1747K/963(47) us 1284723

[ 1] 7.0000-8.0000 sec 1.15 GBytes 9.88 Gbits/sec 9425/0 0 1747K/1271(32) us 971954

[ 1] 8.0000-9.0000 sec 1.15 GBytes 9.88 Gbits/sec 9424/0 5 1669K/1156(52) us 1068532

[ 1] 9.0000-10.0000 sec 1.15 GBytes 9.88 Gbits/sec 9426/0 4 1747K/993(51) us 1244194

[ 1] 0.0000-10.0165 sec 11.5 GBytes 9.87 Gbits/sec 94257/0 75 1747K/953(56) us 1294234I've followed this guide to test rdb and I don't see anything unusual, ( I might be wrong but you guys can correct me)

https://docs.redhat.com/en/document...ark#benchmarking-ceph-block-performance_admin

Code:

~# ceph osd pool create testbench 100 100

pool 'testbench' created

~# rados bench -p testbench 10 write --no-cleanup

hints = 1

Maintaining 16 concurrent writes of 4194304 bytes to objects of size 4194304 for up to 10 seconds or 0 objects

Object prefix: benchmark_data_DFX-VH-UT-US-VHOST0001_1431976

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 162 146 583.854 584 0.0428713 0.0871647

2 16 307 291 581.872 580 0.0404318 0.104417

3 16 490 474 631.875 732 0.0289956 0.093098

4 16 630 614 613.878 560 0.0407406 0.0884081

5 16 709 693 554.29 316 0.0440478 0.0855576

6 16 806 790 526.561 388 0.036311 0.0813596

7 16 965 949 542.176 636 0.0533337 0.115398

8 16 1123 1107 553.385 632 0.0285588 0.107204

9 16 1217 1201 533.667 376 0.0357533 0.103373

10 16 1276 1260 503.896 236 0.169554 0.100711

11 14 1276 1262 458.814 8 0.0396088 0.100616

12 14 1276 1262 420.58 0 - 0.100616

13 14 1276 1262 388.228 0 - 0.100616

14 14 1276 1262 360.498 0 - 0.100616

15 14 1276 1262 336.465 0 - 0.100616

Total time run: 15.1013

Total writes made: 1276

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 337.985

Stddev Bandwidth: 277.684

Max bandwidth (MB/sec): 732

Min bandwidth (MB/sec): 0

Average IOPS: 84

Stddev IOPS: 69.4209

Max IOPS: 183

Min IOPS: 0

Average Latency(s): 0.181353

Stddev Latency(s): 0.824122

Max latency(s): 8.89345

Min latency(s): 0.0274122

~# rados bench -p testbench 10 seq

hints = 1

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 16 339 323 1291.57 1292 0.0423749 0.0464794

2 16 654 638 1275.7 1260 0.0396979 0.0396754

3 16 987 971 1294.38 1332 0.0914942 0.0481208

Total time run: 3.94605

Total reads made: 1276

Read size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 1293.45

Average IOPS: 323

Stddev IOPS: 9.0185

Max IOPS: 333

Min IOPS: 315

Average Latency(s): 0.04759

Max latency(s): 1.25676

Min latency(s): 0.0138378

rados bench -p testbench 10 rand

hints = 1

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 15 345 330 1319.3 1320 0.0220504 0.0458886

2 16 682 666 1331.51 1344 0.0484838 0.0460847

3 15 1022 1007 1342.18 1364 0.0657176 0.0463005

4 16 1363 1347 1346.58 1360 0.0272982 0.0463732

5 16 1711 1695 1355.61 1392 0.024731 0.0458902

6 16 2060 2044 1362.3 1396 0.0249081 0.0459957

7 16 2404 2388 1364.22 1376 0.0217204 0.0458798

8 16 2740 2724 1361.66 1344 0.0646662 0.0459278

9 16 3093 3077 1367.22 1412 0.026175 0.0458007

10 14 3445 3431 1372.07 1416 0.0401794 0.0456587

Total time run: 10.0358

Total reads made: 3445

Read size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 1373.08

Average IOPS: 343

Stddev IOPS: 7.9085

Max IOPS: 354

Min IOPS: 330

Average Latency(s): 0.045714

Max latency(s): 0.353993

Min latency(s): 0.00458857

rados bench -p testbench 10 write -t 4 --run-name client1

hints = 1

Maintaining 4 concurrent writes of 4194304 bytes to objects of size 4194304 for up to 10 seconds or 0 objects

Object prefix: benchmark_data_DFX-VH-UT-US-VHOST0001_1433239

sec Cur ops started finished avg MB/s cur MB/s last lat(s) avg lat(s)

0 0 0 0 0 0 - 0

1 4 66 62 247.969 248 0.043376 0.0457508

2 4 123 119 237.962 228 0.0537846 0.0655272

3 4 135 131 174.637 48 0.0417172 0.0630803

4 4 187 183 182.966 208 0.0413069 0.0817256

5 4 254 250 199.962 268 0.0373117 0.079732

6 4 320 316 210.627 264 0.0535278 0.0758297

7 4 366 362 206.819 184 0.0402602 0.0771097

8 4 449 445 222.459 332 0.0395271 0.0709675

9 4 456 452 200.852 28 1.20324 0.0733661

10 4 520 516 206.361 256 0.034081 0.0771441

Total time run: 10.1

Total writes made: 520

Write size: 4194304

Object size: 4194304

Bandwidth (MB/sec): 205.942

Stddev Bandwidth: 97.1633

Max bandwidth (MB/sec): 332

Min bandwidth (MB/sec): 28

Average IOPS: 51

Stddev IOPS: 24.2908

Max IOPS: 83

Min IOPS: 7

Average Latency(s): 0.0773108

Stddev Latency(s): 0.156676

Max latency(s): 1.35768

Min latency(s): 0.0264381

Cleaning up (deleting benchmark objects)

Removed 520 objects

Clean up completed and total clean up time :0.800697I also did the block performance:

Code:

~# rbd bench --io-type write image01 --pool=testbench

bench type write io_size 4096 io_threads 16 bytes 1073741824 pattern sequential

SEC OPS OPS/SEC BYTES/SEC

1 101360 101780 398 MiB/s

2 182256 91315.4 357 MiB/s

3 254448 84931.6 332 MiB/s

elapsed: 3 ops: 262144 ops/sec: 84017.6 bytes/sec: 328 MiB/sHope you guys can help me identify where the issue could be,

Regards

JP

Last edited: