Hi,

I have a problema and no loca

My config:

My configuration is:

3 x Tiny Lenovo i5 8 series

Every tiny with 16Gb ram ( Tuesday amp to 64Gb)

1 SSD install the PVE

1 NVME 1TB (planned CEPH)

3 x LAN USB 2.5Gb ( 1 x tiny)

Switch Zyxel Multigibagit L2

My config LAN is

1GB Nic for Admin PVE

2.5GB Nic USB for VM/CT and CLUSTER/CEPH

Ip: 192.168.55.xx every admin tiny in 1Gb Nic.

IP: 10.100.100.xx every tiny in cluster and CEPH in 2.5Gb

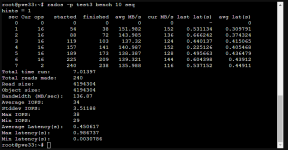

I test perfomance disk with rado and no pass that 110Mb!!

What is the problem? all traffic discs OSD is for lan 2,5Gb the minimum transfer should be.

Any idea??

Thx

I have a problema and no loca

My config:

My configuration is:

3 x Tiny Lenovo i5 8 series

Every tiny with 16Gb ram ( Tuesday amp to 64Gb)

1 SSD install the PVE

1 NVME 1TB (planned CEPH)

3 x LAN USB 2.5Gb ( 1 x tiny)

Switch Zyxel Multigibagit L2

My config LAN is

1GB Nic for Admin PVE

2.5GB Nic USB for VM/CT and CLUSTER/CEPH

Ip: 192.168.55.xx every admin tiny in 1Gb Nic.

IP: 10.100.100.xx every tiny in cluster and CEPH in 2.5Gb

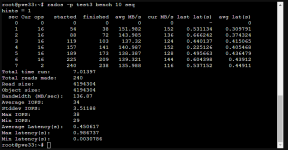

I test perfomance disk with rado and no pass that 110Mb!!

What is the problem? all traffic discs OSD is for lan 2,5Gb the minimum transfer should be.

Any idea??

Thx