Hi There

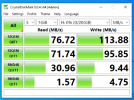

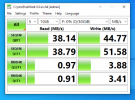

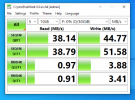

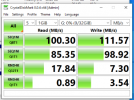

I am hoping someone can help me here, I have tried all sorts of storage options now for Proxmox all setup as shared storage. However I cant get my head round why they are all so terrible speed wise. I have just setup an SMB share on a windows box which running crystal disk mark on gets the below speeds:

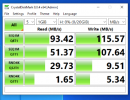

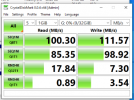

However if i add this SMB share to proxmox then give another windows VM a Virtual hard disk on this storage it gets this speed:

I have now tried several things, including NFS, CIFS/SMB, iSCSI. iSCSI and CIFS/SMB from both Freenas, truenas and openmediavault.

Can anyone else why the disk gets so terrible after adding it to the Proxmox cluster. (added via Datacentre > storage menu)

FYI all the storage types above all yield little to no performance, similar to the speeds I am seeing in the 2nd screenshot.

Current proxmox version running is 7.0-8

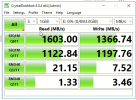

There is also a 4GB LAGG setup on the switch for both the storage box and the Proxmox Host 2nd photo is over a 1G Link

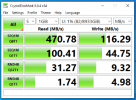

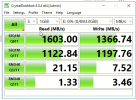

This is over a 4G Link (not quite4 times the 1G either which is strange):

I am hoping someone can help me here, I have tried all sorts of storage options now for Proxmox all setup as shared storage. However I cant get my head round why they are all so terrible speed wise. I have just setup an SMB share on a windows box which running crystal disk mark on gets the below speeds:

However if i add this SMB share to proxmox then give another windows VM a Virtual hard disk on this storage it gets this speed:

I have now tried several things, including NFS, CIFS/SMB, iSCSI. iSCSI and CIFS/SMB from both Freenas, truenas and openmediavault.

Can anyone else why the disk gets so terrible after adding it to the Proxmox cluster. (added via Datacentre > storage menu)

FYI all the storage types above all yield little to no performance, similar to the speeds I am seeing in the 2nd screenshot.

Current proxmox version running is 7.0-8

There is also a 4GB LAGG setup on the switch for both the storage box and the Proxmox Host 2nd photo is over a 1G Link

This is over a 4G Link (not quite4 times the 1G either which is strange):

Last edited: