We have migrated multiple Windows VMs from Windows hosts to Proxmox (same hardware).

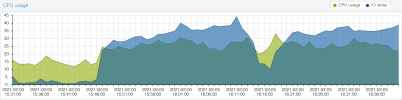

There were no any performance problems on Windows hosts, but same hardware running Proxmox gives very poor performance while extensive disk IO in VMs or on host.

We have tried different configurations:

1. ZFS on SSD.

2. ZFS on HDD

3. LVM on HDD

Tried different settings for Windows guests (Writeback/No cahce, etc)

Tried changing dirty_background_bytes and dirty_bytes on host

Tried moving VMs between hosts

Tried moving VMs and disks between storages (ZFS, LVM)

Tried movind VMs and disks between different disk types (SSD/HDD).

Nothing helps. This problem appears on all hosts when any extensive disk operation is performed, i.e:

1. copy large file in a VM

2. clone VM

3. create VM backup

4. replication

This is really a problem for us. We like Proxmox for functionality, but may be forced to go back to Windows hosts due to the problem.

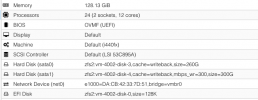

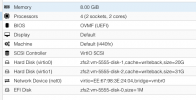

Example server config:

CPU(s) 48 x Intel(R) Xeon(R) CPU E5-2690 v3 @ 2.60GHz (2 Sockets)

Kernel Version Linux 5.4.73-1-pve #1 SMP PVE 5.4.73-1

PVE Manager Version pve-manager/6.2-15/48bd51b

RAM: 188Gb

Any clues?

There were no any performance problems on Windows hosts, but same hardware running Proxmox gives very poor performance while extensive disk IO in VMs or on host.

We have tried different configurations:

1. ZFS on SSD.

2. ZFS on HDD

3. LVM on HDD

Tried different settings for Windows guests (Writeback/No cahce, etc)

Tried changing dirty_background_bytes and dirty_bytes on host

Tried moving VMs between hosts

Tried moving VMs and disks between storages (ZFS, LVM)

Tried movind VMs and disks between different disk types (SSD/HDD).

Nothing helps. This problem appears on all hosts when any extensive disk operation is performed, i.e:

1. copy large file in a VM

2. clone VM

3. create VM backup

4. replication

This is really a problem for us. We like Proxmox for functionality, but may be forced to go back to Windows hosts due to the problem.

Example server config:

CPU(s) 48 x Intel(R) Xeon(R) CPU E5-2690 v3 @ 2.60GHz (2 Sockets)

Kernel Version Linux 5.4.73-1-pve #1 SMP PVE 5.4.73-1

PVE Manager Version pve-manager/6.2-15/48bd51b

RAM: 188Gb

Any clues?