tenho um sistema com 3 nós e 1 OSD em cada nó.

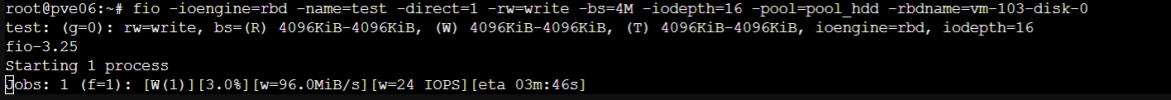

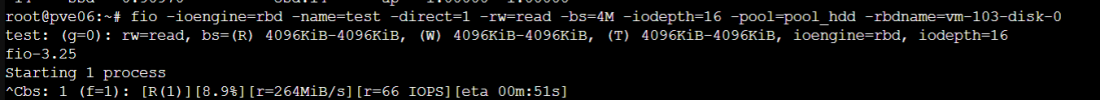

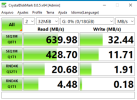

Velocidade muito lenta, principalmente escrita

CrystalDiskMask ~600MBs leitura / ~30MBs escrita.

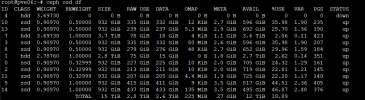

Coloquei DB Disk com 2% e WAL Disk com 10%

No Pool_HDD em questão, tem apenas uma VM de teste.

O problema também acontece no pool_ssd. Porém tem VMs nela.

CPU e RAM do PVE - OK

Versão PVE 7.4

CEPH 16.2.15

Sugestões?

Velocidade muito lenta, principalmente escrita

CrystalDiskMask ~600MBs leitura / ~30MBs escrita.

Coloquei DB Disk com 2% e WAL Disk com 10%

No Pool_HDD em questão, tem apenas uma VM de teste.

O problema também acontece no pool_ssd. Porém tem VMs nela.

CPU e RAM do PVE - OK

Versão PVE 7.4

CEPH 16.2.15

Sugestões?

Bash:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.0.5.2/28

fsid = 7556b799-7b87-40b3-b282-9d4ec7d248a3

mon_allow_pool_delete = true

mon_host = 10.0.5.3 10.0.5.5 10.0.5.4 10.0.5.2 10.0.5.13

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_deep_scrub_interval = 1209600

osd_disk_threads = 128

osd_max_write_size = 1024MB

osd_op_threads = 256

osd_pool_default_min_size = 2

osd_pool_default_size = 3

osd_recovery_max_active = 120

public_network = 10.0.5.2/28

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.pve01]

public_addr = 10.0.5.2

[mon.pve02]

public_addr = 10.0.5.3

[mon.pve03]

public_addr = 10.0.5.5

[mon.pve05]

public_addr = 10.0.5.4

[mon.pve06]

public_addr = 10.0.5.13

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable chooseleaf_stable 1

tunable straw_calc_version 1

tunable allowed_bucket_algs 54

# devices

device 0 osd.0 class ssd

device 1 osd.1 class hdd

device 2 osd.2 class ssd

device 3 osd.3 class ssd

device 4 osd.4 class hdd

device 5 osd.5 class ssd

device 7 osd.7 class hdd

device 8 osd.8 class ssd

device 10 osd.10 class ssd

device 11 osd.11 class ssd

device 13 osd.13 class ssd

device 14 osd.14 class ssd

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 zone

type 10 region

type 11 root

# buckets

host pve02 {

id -3 # do not change unnecessarily

id -4 class hdd # do not change unnecessarily

id -7 class ssd # do not change unnecessarily

# weight 5.517

alg straw2

hash 0 # rjenkins1

item osd.3 weight 0.910

item osd.8 weight 0.910

item osd.7 weight 3.697

}

host pve {

id -5 # do not change unnecessarily

id -6 class hdd # do not change unnecessarily

id -8 class ssd # do not change unnecessarily

# weight 0.000

alg straw2

hash 0 # rjenkins1

}

host pve03 {

id -10 # do not change unnecessarily

id -11 class hdd # do not change unnecessarily

id -12 class ssd # do not change unnecessarily

# weight 0.000

alg straw2

hash 0 # rjenkins1

}

host pve05 {

id -13 # do not change unnecessarily

id -14 class hdd # do not change unnecessarily

id -15 class ssd # do not change unnecessarily

# weight 5.517

alg straw2

hash 0 # rjenkins1

item osd.0 weight 0.910

item osd.2 weight 0.910

item osd.11 weight 0.910

item osd.1 weight 2.788

}

host pve01 {

id -16 # do not change unnecessarily

id -17 class hdd # do not change unnecessarily

id -18 class ssd # do not change unnecessarily

# weight 5.517

alg straw2

hash 0 # rjenkins1

item osd.10 weight 0.910

item osd.13 weight 0.910

item osd.4 weight 3.697

}

host pve06 {

id -19 # do not change unnecessarily

id -20 class hdd # do not change unnecessarily

id -21 class ssd # do not change unnecessarily

# weight 1.819

alg straw2

hash 0 # rjenkins1

item osd.5 weight 0.910

item osd.14 weight 0.910

}

root default {

id -1 # do not change unnecessarily

id -2 class hdd # do not change unnecessarily

id -9 class ssd # do not change unnecessarily

# weight 18.369

alg straw2

hash 0 # rjenkins1

item pve02 weight 5.517

item pve weight 0.000

item pve03 weight 0.000

item pve05 weight 5.517

item pve01 weight 5.517

item pve06 weight 1.819

}

# rules

rule replicated_rule {

id 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule replicated_ssd {

id 1

type replicated

min_size 1

max_size 10

step take default class ssd

step chooseleaf firstn 0 type host

step emit

}

# end crush map

BALANCE MODE : UPMAP