Hello

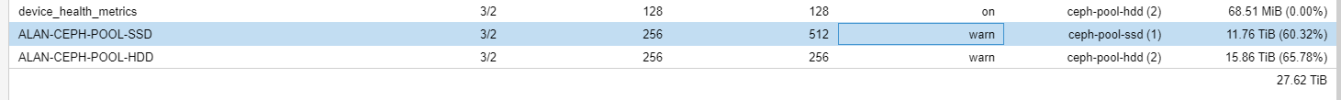

on a CEPH pool it is optimal to set the PGs to 512 (now set to 256). See screenshot.

The free space on that pool is about 40%.

If I set it to 512 is there a risk that the pool will fill up?

Is it recommended to do this during the weekend?

Thank you

on a CEPH pool it is optimal to set the PGs to 512 (now set to 256). See screenshot.

The free space on that pool is about 40%.

If I set it to 512 is there a risk that the pool will fill up?

Is it recommended to do this during the weekend?

Thank you