I have had pfsense working fine on this same HW for years. Proxmox version has been (since released) the same as it is now, and pfSense had been (for months) the pfSense+ version 22.x (and before that pfSense CE). The one thing that changed is now Proxmox is installed on ZFS (native proxmox install) intead of RAID 1. pfSense got a fresh install (I did not move the old VM over to the new hypervisor install)

Since I started running on this new install with production traffic, pfSense would stop relaying traffic to/from the hypervisor every few minutes. I'd IPMI into the hypervisor and see nothing in the logs. But the hypervisor couldn't ping pfSense over the LAN, much less access the WAN.

I'd SSH into pfSense, nothing in the logs. pfSense could access the WAN just fine, nothing in the logs, but it also could not ping the host, albeit with a more informative message: "ping: sendto: No buffer space available". If I did an ifconfig down then up on the vtnet1, the LAN adapter, pfSense would be able to ping the hypervisor again, as mentioned in the Netgate docs, but the host still couldn't use pfsense to connect to the WAN.

The fix every time was just rebooting pfSense. As soon as it was back up, things would work for another few minutes. So I switched the adapter from VirtiIO to E1000, and the problem went away. But I can't keep using E1000, I have a gigabit connection and it maxes out at 180MB/s.

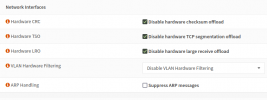

Please help me get VirtIO working again. Maybe I need to disable hardware checksum offload and hardware TCP segmentation offload on the physical linux (=proxmox) side as well? Netgate also says it is a maybe should do, I didn't in the previous install, which worked fine for years in this same HW (the previous install did not have the QEMU Guest Agent installed via this method). Why would this be needed now? But if there is no performance penalty, I'd do it.

pve-manager/7.2-11/b76d3178 (running kernel: 5.15.60-2-pve)

22.05-RELEASE (amd64) - FreeBSD 12.3-STABLE, and of course, all of these are disabled already (always been)

Happy to supply

for whatever adapters needed, but I don't believe they are running modified in any way, unless proxmox set them so.

201.conf in the previous install:

I put a link to this issue in the pfSense forum, since the issue is most likely in Proxmox not the other way around: https://forum.netgate.com/topic/175377

Since I started running on this new install with production traffic, pfSense would stop relaying traffic to/from the hypervisor every few minutes. I'd IPMI into the hypervisor and see nothing in the logs. But the hypervisor couldn't ping pfSense over the LAN, much less access the WAN.

I'd SSH into pfSense, nothing in the logs. pfSense could access the WAN just fine, nothing in the logs, but it also could not ping the host, albeit with a more informative message: "ping: sendto: No buffer space available". If I did an ifconfig down then up on the vtnet1, the LAN adapter, pfSense would be able to ping the hypervisor again, as mentioned in the Netgate docs, but the host still couldn't use pfsense to connect to the WAN.

The fix every time was just rebooting pfSense. As soon as it was back up, things would work for another few minutes. So I switched the adapter from VirtiIO to E1000, and the problem went away. But I can't keep using E1000, I have a gigabit connection and it maxes out at 180MB/s.

Please help me get VirtIO working again. Maybe I need to disable hardware checksum offload and hardware TCP segmentation offload on the physical linux (=proxmox) side as well? Netgate also says it is a maybe should do, I didn't in the previous install, which worked fine for years in this same HW (the previous install did not have the QEMU Guest Agent installed via this method). Why would this be needed now? But if there is no performance penalty, I'd do it.

pve-manager/7.2-11/b76d3178 (running kernel: 5.15.60-2-pve)

22.05-RELEASE (amd64) - FreeBSD 12.3-STABLE, and of course, all of these are disabled already (always been)

Code:

source /etc/network/interfaces.d/*

auto lo

iface lo inet loopback

iface eno1 inet manual

iface eno2 inet manual

auto vmbr0

iface vmbr0 inet manual

bridge-ports eno1

bridge-stp off

bridge-fd 0

auto vmbr1

iface vmbr1 inet static

address 10.10.10.10/24

gateway 10.10.10.1

bridge-ports none

bridge-stp off

bridge-fd 0Happy to supply

Code:

ethtool -k

Code:

# cat /etc/pve/qemu-server/201.conf

agent: 1

boot: order=ide0

cores: 4

cpu: host

ide0: local-zfs:vm-201-disk-0,size=12G

memory: 4096

meta: creation-qemu=7.0.0,ctime=1665785801

name: pfsense

net0: e1000=3A:...,bridge=vmbr0

net1: e1000=E6:...,bridge=vmbr1

numa: 0

onboot: 1

ostype: other

protection: 1

scsihw: virtio-scsi-single # switched from virtio-scsi-pci during tshooting

serial0: socket

serial1: socket

smbios1: uuid=d...

sockets: 1

startup: order=1,up=10201.conf in the previous install:

Code:

agent: 0

boot: cd

bootdisk: virtio0

cores: 4

cpu: host

memory: 8192

name: pfsense

net0: virtio=62:1C:04:B6:E1:BF,bridge=vmbr0

net1: virtio=E6:80:45:08:75:96,bridge=vmbr1

numa: 0

onboot: 1

ostype: other

protection: 1

scsihw: virtio-scsi-pci

serial0: socket

serial1: socket

smbios1: uuid=7f48a867-a2cd-4023-80d8-5a12f5f1988f

sockets: 1

startup: order=1,up=90

vga: qxl

virtio0: zfs-storage-id:vm-201-disk-0,cache=writeback,size=8G

vmgenid: ea0d1691-b450-4392-8edd-785a67c573d6I put a link to this issue in the pfSense forum, since the issue is most likely in Proxmox not the other way around: https://forum.netgate.com/topic/175377

Last edited: