Hello

I have a Proxmox cluster with 2 nodes.

Node 1 runs VMs and node 2 has around 80 CTs on it.

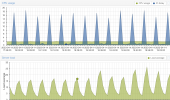

I’m facing an issue on node 2, the I/O Delay jumps to 50% - 80% exactly every 5 minutes.

The storage is as follows.

Boot Disk/local-lvm : 1 TB Samsung EVO SSD (CTs are NOT stored on this, only used for boot)

5x 8TB Enterprise HDD 7200 RPM (12Gigbit SAS) in RAID 5 controlled by DELL Hardware Raid.

The VD is partitioned into 2x 14TB xfs and one of the partition is mounted at /mnt/pve/DATA

This directory is then added as storage “as type directory” and CT disks are stored on it. The second partition is left unused/unmounted at the moment.

I tried to look for any CT which might be overusing I/O, but couldn’t find a CT that had a usage graph that co-relates with the rise in I/O Delay.

Also looked at iotop, the disk usage remains mostly the same during the period of increased I/O delay compared to when the I/O Delay is not there.

The spike happens exactly every 5 minutes.

Any help would be highly appreciated

I have a Proxmox cluster with 2 nodes.

Node 1 runs VMs and node 2 has around 80 CTs on it.

I’m facing an issue on node 2, the I/O Delay jumps to 50% - 80% exactly every 5 minutes.

The storage is as follows.

Boot Disk/local-lvm : 1 TB Samsung EVO SSD (CTs are NOT stored on this, only used for boot)

5x 8TB Enterprise HDD 7200 RPM (12Gigbit SAS) in RAID 5 controlled by DELL Hardware Raid.

The VD is partitioned into 2x 14TB xfs and one of the partition is mounted at /mnt/pve/DATA

This directory is then added as storage “as type directory” and CT disks are stored on it. The second partition is left unused/unmounted at the moment.

I tried to look for any CT which might be overusing I/O, but couldn’t find a CT that had a usage graph that co-relates with the rise in I/O Delay.

Also looked at iotop, the disk usage remains mostly the same during the period of increased I/O delay compared to when the I/O Delay is not there.

The spike happens exactly every 5 minutes.

Any help would be highly appreciated