hello guys

its really a huge problem now. i run 20 VMs on one cluster and its starting to lag (i am not sure the disk are the problem, but this is the only issue i can see right now)

following is the case:

i upgraded ceph pasific to quincy (no improvments)

the speed on Proxmox remains slow - 130 MB/s instead of 900MB read and 6000 mb write. which is the numbers of the nvme drive

i installed the cluster exactly like the video on proxmox site.

what is very strange: the speed in the VM is what i expect - 900 mb write and read 6gb). however, the speed test: rodos bench ... shows 130mb, a copy from one osd to the other (in the same server) shows 8.4 gb in 1 minute, which is 130 MB/s. that is absolutely not acceptable.

the config is nothing out of ordinary

ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.10.10.10/24

fsid = a8939e3c-7fee-484c-826f-29875927cf43

mon_allow_pool_delete = true

mon_host = 10.10.11.10 10.10.11.11 10.10.11.12 10.10.11.13

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.10.11.10/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.hvirt01]

public_addr = 10.10.11.10

[mon.hvirt02]

public_addr = 10.10.11.11

[mon.hvirt03]

public_addr = 10.10.11.12

[mon.hvirt04]

public_addr = 10.10.11.13

root@hvirt01:~# ceph status

cluster:

id: a8939e3c-7fee-484c-826f-29875927cf43

health: HEALTH_OK

services:

mon: 4 daemons, quorum hvirt01,hvirt02,hvirt03,hvirt04 (age 24m)

mgr: hvirt01(active, since 23m), standbys: hvirt02, hvirt04, hvirt03

osd: 8 osds: 8 up (since 20m), 8 in (since 8d)

data:

pools: 3 pools, 65 pgs

objects: 102.42k objects, 377 GiB

usage: 1.1 TiB used, 27 TiB / 28 TiB avail

pgs: 65 active+clean

io:

client: 0 B/s rd, 181 KiB/s wr, 0 op/s rd, 17 op/s wr

i dont understand why this number changes all the time - pgs: 65 active+clean. i entered, like in the video 254 (as i remember).

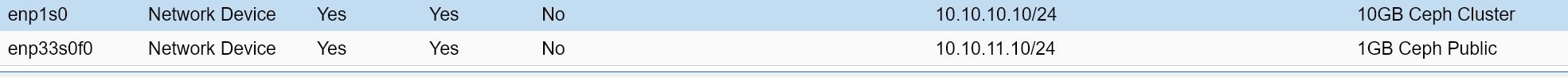

the ceph cluster is connected to 10gb and ceph public to 1gb (but it should not matter, i guess)

in the ceph logs is nothing special to see

22-10-09T00:02:45.454941+0200 mgr.hvirt01 (mgr.2654568) 1071 : cluster [DBG] pgmap v846: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 179 KiB/s wr, 19 op/s

2022-10-09T00:02:47.455200+0200 mgr.hvirt01 (mgr.2654568) 1073 : cluster [DBG] pgmap v847: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 145 KiB/s wr, 18 op/s

2022-10-09T00:02:49.455464+0200 mgr.hvirt01 (mgr.2654568) 1074 : cluster [DBG] pgmap v848: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 131 KiB/s wr, 17 op/s

2022-10-09T00:02:51.455786+0200 mgr.hvirt01 (mgr.2654568) 1076 : cluster [DBG] pgmap v849: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 135 KiB/s wr, 18 op/s

2022-10-09T00:02:53.455985+0200 mgr.hvirt01 (mgr.2654568) 1078 : cluster [DBG] pgmap v850: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 116 KiB/s wr, 16 op/s

2022-10-09T00:02:55.456249+0200 mgr.hvirt01 (mgr.2654568) 1079 : cluster [DBG] pgmap v851: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 149 KiB/s wr, 19 op/s

2022-10-09T00:02:57.456490+0200 mgr.hvirt01 (mgr.2654568) 1081 : cluster [DBG] pgmap v852: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 118 KiB/s wr, 12 op/s

2022-10-09T00:02:59.456755+0200 mgr.hvirt01 (mgr.2654568) 1083 : cluster [DBG] pgmap v853: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 115 KiB/s wr, 9 op/s

2022-10-09T00:03:01.457058+0200 mgr.hvirt01 (mgr.2654568) 1084 : cluster [DBG] pgmap v854: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 139 KiB/s wr, 10 op/s

2022-10-09T00:03:03.457255+0200 mgr.hvirt01 (mgr.2654568) 1086 : cluster [DBG] pgmap v855: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 120 KiB/s wr, 8 op/s

2022-10-09T00:03:05.457539+0200 mgr.hvirt01 (mgr.2654568) 1087 : cluster [DBG] pgmap v856: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 137 KiB/s wr, 10 op/s

2022-

its getting seriously the issue. the performance is more as shit.

any suggestion ?

(i couldnt find any proper post about it)

plz let me know if you need more datas.

thx

its really a huge problem now. i run 20 VMs on one cluster and its starting to lag (i am not sure the disk are the problem, but this is the only issue i can see right now)

following is the case:

i upgraded ceph pasific to quincy (no improvments)

the speed on Proxmox remains slow - 130 MB/s instead of 900MB read and 6000 mb write. which is the numbers of the nvme drive

i installed the cluster exactly like the video on proxmox site.

what is very strange: the speed in the VM is what i expect - 900 mb write and read 6gb). however, the speed test: rodos bench ... shows 130mb, a copy from one osd to the other (in the same server) shows 8.4 gb in 1 minute, which is 130 MB/s. that is absolutely not acceptable.

the config is nothing out of ordinary

ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.10.10.10/24

fsid = a8939e3c-7fee-484c-826f-29875927cf43

mon_allow_pool_delete = true

mon_host = 10.10.11.10 10.10.11.11 10.10.11.12 10.10.11.13

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.10.11.10/24

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[mon.hvirt01]

public_addr = 10.10.11.10

[mon.hvirt02]

public_addr = 10.10.11.11

[mon.hvirt03]

public_addr = 10.10.11.12

[mon.hvirt04]

public_addr = 10.10.11.13

root@hvirt01:~# ceph status

cluster:

id: a8939e3c-7fee-484c-826f-29875927cf43

health: HEALTH_OK

services:

mon: 4 daemons, quorum hvirt01,hvirt02,hvirt03,hvirt04 (age 24m)

mgr: hvirt01(active, since 23m), standbys: hvirt02, hvirt04, hvirt03

osd: 8 osds: 8 up (since 20m), 8 in (since 8d)

data:

pools: 3 pools, 65 pgs

objects: 102.42k objects, 377 GiB

usage: 1.1 TiB used, 27 TiB / 28 TiB avail

pgs: 65 active+clean

io:

client: 0 B/s rd, 181 KiB/s wr, 0 op/s rd, 17 op/s wr

i dont understand why this number changes all the time - pgs: 65 active+clean. i entered, like in the video 254 (as i remember).

the ceph cluster is connected to 10gb and ceph public to 1gb (but it should not matter, i guess)

in the ceph logs is nothing special to see

22-10-09T00:02:45.454941+0200 mgr.hvirt01 (mgr.2654568) 1071 : cluster [DBG] pgmap v846: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 179 KiB/s wr, 19 op/s

2022-10-09T00:02:47.455200+0200 mgr.hvirt01 (mgr.2654568) 1073 : cluster [DBG] pgmap v847: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 145 KiB/s wr, 18 op/s

2022-10-09T00:02:49.455464+0200 mgr.hvirt01 (mgr.2654568) 1074 : cluster [DBG] pgmap v848: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 131 KiB/s wr, 17 op/s

2022-10-09T00:02:51.455786+0200 mgr.hvirt01 (mgr.2654568) 1076 : cluster [DBG] pgmap v849: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 135 KiB/s wr, 18 op/s

2022-10-09T00:02:53.455985+0200 mgr.hvirt01 (mgr.2654568) 1078 : cluster [DBG] pgmap v850: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 116 KiB/s wr, 16 op/s

2022-10-09T00:02:55.456249+0200 mgr.hvirt01 (mgr.2654568) 1079 : cluster [DBG] pgmap v851: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 149 KiB/s wr, 19 op/s

2022-10-09T00:02:57.456490+0200 mgr.hvirt01 (mgr.2654568) 1081 : cluster [DBG] pgmap v852: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 0 B/s rd, 118 KiB/s wr, 12 op/s

2022-10-09T00:02:59.456755+0200 mgr.hvirt01 (mgr.2654568) 1083 : cluster [DBG] pgmap v853: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 115 KiB/s wr, 9 op/s

2022-10-09T00:03:01.457058+0200 mgr.hvirt01 (mgr.2654568) 1084 : cluster [DBG] pgmap v854: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 139 KiB/s wr, 10 op/s

2022-10-09T00:03:03.457255+0200 mgr.hvirt01 (mgr.2654568) 1086 : cluster [DBG] pgmap v855: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 120 KiB/s wr, 8 op/s

2022-10-09T00:03:05.457539+0200 mgr.hvirt01 (mgr.2654568) 1087 : cluster [DBG] pgmap v856: 65 pgs: 65 active+clean; 377 GiB data, 1.1 TiB used, 27 TiB / 28 TiB avail; 2.7 KiB/s rd, 137 KiB/s wr, 10 op/s

2022-

its getting seriously the issue. the performance is more as shit.

any suggestion ?

(i couldnt find any proper post about it)

plz let me know if you need more datas.

thx