Hello Community,

I am currently in the process of migrating from a Windows Server-based host to Proxmox on my 2009 Intel Xeon X5560-based server. While setting up a TrueNAS VM, i've noticed some strange behavior regarding PCI passthrough.

System specs:

- Intel S5520HC motherboard

- 128GByte DDR3 ECC memory

- 2x Xeon X5560 CPU

- 125GByte SATA SSD to boot Proxmox from

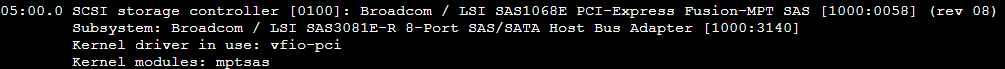

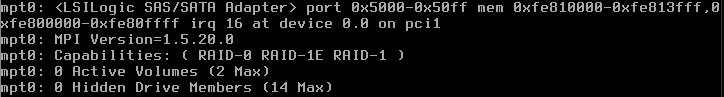

I run a LSI SAS1068E PCI-Express Fusion-MPT Storage Controller as HBA with 6 disks attached. The plan is to pass this controller card directly into a TrueNAS VM. The controller is recognized in Proxmox in its own IOMMU group (nr. 30, not shown here):

All attached disks are also visible in Proxmox when the controller is not passed to a VM, so the device seems to work fine here.

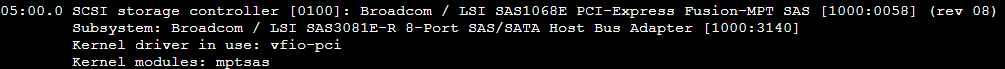

I had to change the following things in Proxmox to get the TrueNAS VM to even start with PCI device 05:00.0 passed through:

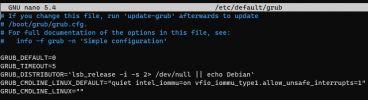

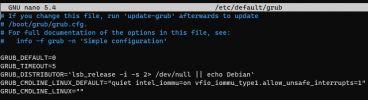

1. /etc/default/grub:

Note: Without vfio_iommu_type1.allow_unsafe_interrupts i get a "Permission denied" error when trying to start the VM

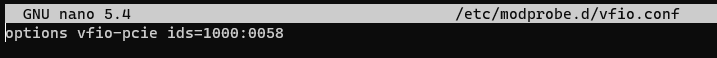

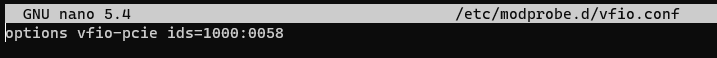

2. /etc/modprobe.d/vfio.conf:

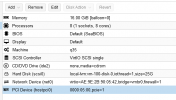

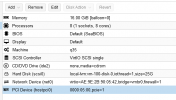

The TrueNAS VM uses SeaBIOS with the following settings (this is the only VM right now):

Now to the problem:

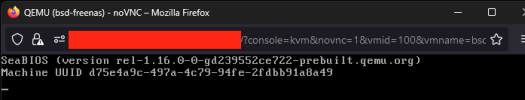

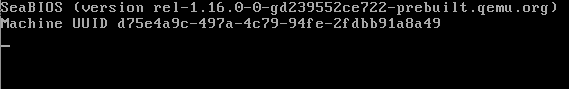

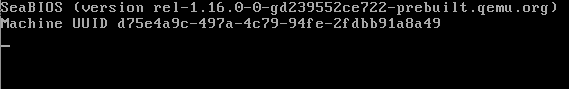

When i start this VM, it freezes in the BIOS here:

When i remove the PCI Device (hostpci0), the VM starts up normally and boots from SCSI0 as expected.

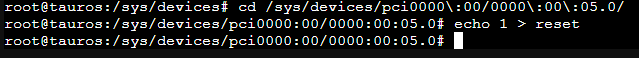

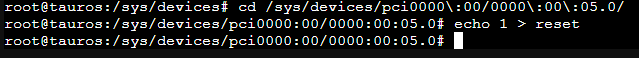

I've read somewhere that the PCI device may not be resetted correctly for some reason. So i tried soft-resetting the LSI controller in Proxmox using...

... which had no effect.

BUT:

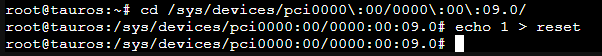

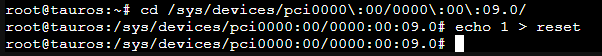

During testing i accidentally resetted a different PCI device here (i navigated to the wrong device folder initially by mistake) ...

... and this caused the VM to start up (!!!)

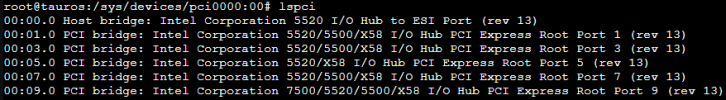

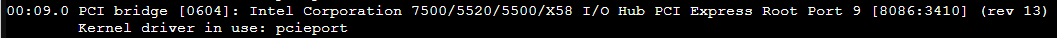

This PCI device ID seems to belong to this PCIe bridge:

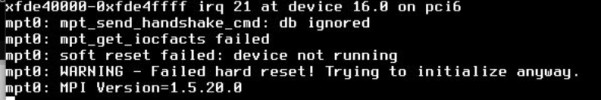

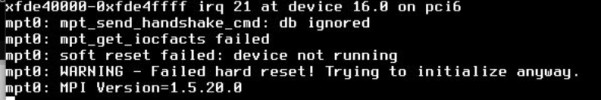

The LSI controller was furthermore recognized in TrueNAS, but it complained the following during boot:

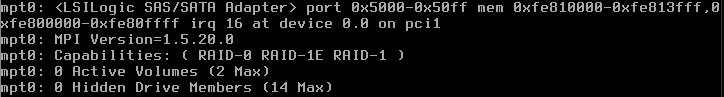

This behavior only occures sometimes though. During this boot for example, the controller seemed to be recognized just fine by TrueNAS:

This way, the controller fully works in TrueNAS. I was even able to reimport my old storage pool.

I'm stepping a bit in the dark here, maybe someone can explain this. Could it be a hardware problem?

Furthermore, my BIOS seems to support interrupt remapping, but dmesg complains here:

This would explain why i need unsafe_interrupts in grub options. I've updated the BIOS of this machine prior to installing Proxmox to version R0069, which is the latest to-date. (see here: https://www.intel.de/content/www/de/de/products/sku/36599/intel-server-board-s5520hc/downloads.html)

Further dmesg outputs for IOMMU:

Any input here would be appreciated. Using this reset-method as a workaround to start the VM is not feasable in my opinion... all my other infrastructure depends on TrueNAS to much.

Proxmox version: 7.3-3

Thanks in advance

I am currently in the process of migrating from a Windows Server-based host to Proxmox on my 2009 Intel Xeon X5560-based server. While setting up a TrueNAS VM, i've noticed some strange behavior regarding PCI passthrough.

System specs:

- Intel S5520HC motherboard

- 128GByte DDR3 ECC memory

- 2x Xeon X5560 CPU

- 125GByte SATA SSD to boot Proxmox from

I run a LSI SAS1068E PCI-Express Fusion-MPT Storage Controller as HBA with 6 disks attached. The plan is to pass this controller card directly into a TrueNAS VM. The controller is recognized in Proxmox in its own IOMMU group (nr. 30, not shown here):

All attached disks are also visible in Proxmox when the controller is not passed to a VM, so the device seems to work fine here.

I had to change the following things in Proxmox to get the TrueNAS VM to even start with PCI device 05:00.0 passed through:

1. /etc/default/grub:

Note: Without vfio_iommu_type1.allow_unsafe_interrupts i get a "Permission denied" error when trying to start the VM

2. /etc/modprobe.d/vfio.conf:

The TrueNAS VM uses SeaBIOS with the following settings (this is the only VM right now):

Now to the problem:

When i start this VM, it freezes in the BIOS here:

When i remove the PCI Device (hostpci0), the VM starts up normally and boots from SCSI0 as expected.

I've read somewhere that the PCI device may not be resetted correctly for some reason. So i tried soft-resetting the LSI controller in Proxmox using...

... which had no effect.

BUT:

During testing i accidentally resetted a different PCI device here (i navigated to the wrong device folder initially by mistake) ...

... and this caused the VM to start up (!!!)

This PCI device ID seems to belong to this PCIe bridge:

The LSI controller was furthermore recognized in TrueNAS, but it complained the following during boot:

This behavior only occures sometimes though. During this boot for example, the controller seemed to be recognized just fine by TrueNAS:

This way, the controller fully works in TrueNAS. I was even able to reimport my old storage pool.

I'm stepping a bit in the dark here, maybe someone can explain this. Could it be a hardware problem?

Furthermore, my BIOS seems to support interrupt remapping, but dmesg complains here:

Code:

[ 1.049986] DMAR-IR: This system BIOS has enabled interrupt remapping

on a chipset that contains an erratum making that

feature unstable. To maintain system stability

interrupt remapping is being disabled. Please

contact your BIOS vendor for an updateThis would explain why i need unsafe_interrupts in grub options. I've updated the BIOS of this machine prior to installing Proxmox to version R0069, which is the latest to-date. (see here: https://www.intel.de/content/www/de/de/products/sku/36599/intel-server-board-s5520hc/downloads.html)

Further dmesg outputs for IOMMU:

Code:

root@tauros:~# dmesg | grep DMAR

[ 0.019950] ACPI: DMAR 0x000000008F6ED000 0001D8 (v01 INTEL S5520HC 00000001 MSFT 0100000D)

[ 0.019983] ACPI: Reserving DMAR table memory at [mem 0x8f6ed000-0x8f6ed1d7]

[ 0.443891] DMAR: IOMMU enabled

[ 1.049986] DMAR-IR: This system BIOS has enabled interrupt remapping

[ 1.477445] DMAR: Host address width 40

[ 1.477447] DMAR: DRHD base: 0x000000fe710000 flags: 0x1

[ 1.477457] DMAR: dmar0: reg_base_addr fe710000 ver 1:0 cap c90780106f0462 ecap f020f7

[ 1.477460] DMAR: RMRR base: 0x0000008f62f000 end: 0x0000008f631fff

[ 1.477462] DMAR: RMRR base: 0x0000008f61a000 end: 0x0000008f61afff

[ 1.477464] DMAR: RMRR base: 0x0000008f617000 end: 0x0000008f617fff

[ 1.477465] DMAR: RMRR base: 0x0000008f614000 end: 0x0000008f614fff

[ 1.477466] DMAR: RMRR base: 0x0000008f611000 end: 0x0000008f611fff

[ 1.477468] DMAR: RMRR base: 0x0000008f60e000 end: 0x0000008f60efff

[ 1.477469] DMAR: RMRR base: 0x0000008f60b000 end: 0x0000008f60bfff

[ 1.477470] DMAR: RMRR base: 0x0000008f608000 end: 0x0000008f608fff

[ 1.477471] DMAR: RMRR base: 0x0000008f605000 end: 0x0000008f605fff

[ 1.477476] DMAR: ATSR flags: 0x0

[ 1.477509] DMAR: No SATC found

[ 1.477515] DMAR: dmar0: Using Queued invalidation

[ 1.488505] DMAR: Intel(R) Virtualization Technology for Directed I/OAny input here would be appreciated. Using this reset-method as a workaround to start the VM is not feasable in my opinion... all my other infrastructure depends on TrueNAS to much.

Proxmox version: 7.3-3

Thanks in advance

Last edited: