Hi.

With MEGA as an S3 Endpoint, PBS has a strange behaviour.

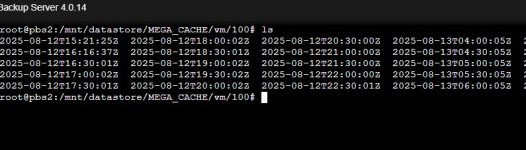

This is the content of my cache HD:

This is the content saved in MEGA:

For one single backup, there is more than one folder on MEGA, but just 1 on cache HD. If i try to prune or delete backups it give me errors or if i try to refresh content from bucket.

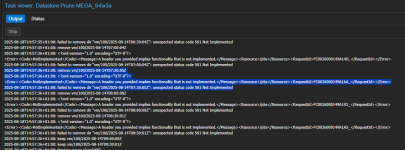

Errors Messages:

"unexpected status code 501 Not Implemented (400)."

"A header you provided implies functionality that is not implemented."

With MEGA as an S3 Endpoint, PBS has a strange behaviour.

This is the content of my cache HD:

This is the content saved in MEGA:

For one single backup, there is more than one folder on MEGA, but just 1 on cache HD. If i try to prune or delete backups it give me errors or if i try to refresh content from bucket.

Errors Messages:

"unexpected status code 501 Not Implemented (400)."

"A header you provided implies functionality that is not implemented."