i am having this issue forever but now i am frustrated enough to ask for help.

i have a pbs and 2x prxmox hosts with several vms. ever since the use of the PBS, it just crashes during or after the backup's been done. its installed on a 3 disk zfs "raid".

sometimes it was running through for a day or two but more often than not it just hung and i had to reset.

a couple weeks / months ago i was googling for this issue and found somewhere in this forum (cant remember where) that i would need tet some zfs_arc_max param so that it would run through. i tried that, it was a little better (run a few days) but still crashes now.

these are my specs the nvme disk is currently not used):

System:

Host: proxmox-backup Kernel: 6.8.4-3-pve arch: x86_64 bits: 64 compiler: gcc v: 12.2.0

Console: pty pts/0 Distro: Debian GNU/Linux 12 (bookworm)

Machine:

Type: Desktop Mobo: ASUSTeK model: TUF GAMING X570-PLUS v: Rev X.0x serial: 200670021600043

UEFI: American Megatrends v: 5003 date: 10/07/2023

Memory:

RAM: total: 31.25 GiB used: 20.06 GiB (64.2%)

Array-1: capacity: 128 GiB slots: 4 EC: None max-module-size: 32 GiB note: est.

Device-1: DIMM_A1 type: no module installed

Device-2: DIMM_A2 type: no module installed

Device-3: DIMM_B1 type: DDR4 size: 16 GiB speed: 2133 MT/s

Device-4: DIMM_B2 type: DDR4 size: 16 GiB speed: 2133 MT/s

CPU:

Info: 12-core model: AMD Ryzen 9 3900X bits: 64 type: MT MCP arch: Zen 2 rev: 0 cache:

L1: 768 KiB L2: 6 MiB L3: 64 MiB

Speed (MHz): avg: 3800 min/max: 2200/4672 boost: enabled cores: 1: 3800 2: 3800 3: 3800

4: 3800 5: 3800 6: 3800 7: 3800 8: 3800 9: 3800 10: 3800 11: 3800 12: 3800 13: 3800 14: 3800

15: 3800 16: 3800 17: 3800 18: 3800 19: 3800 20: 3800 21: 3800 22: 3800 23: 3800 24: 3800

bogomips: 182059

Flags: avx avx2 ht lm nx pae sse sse2 sse3 sse4_1 sse4_2 sse4a ssse3

Graphics:

Device-1: AMD Navi 22 [Radeon RX 6700/6700 XT/6750 XT / 6800M/6850M XT] vendor: Tul / PowerColor

driver: amdgpu v: kernel arch: RDNA-2 bus-ID: 0e:00.0

Display: server: No display server data found. Headless machine? tty: 220x81

resolution: 2560x1440

API: OpenGL Message: GL data unavailable in console for root.

Audio:

Device-1: AMD Navi 21/23 HDMI/DP Audio driver: snd_hda_intel v: kernel bus-ID: 0e:00.1

Device-2: AMD Starship/Matisse HD Audio vendor: ASUSTeK driver: snd_hda_intel v: kernel

bus-ID: 10:00.4

API: ALSA v: k6.8.4-3-pve status: kernel-api

Network:

Device-1: Realtek RTL8125 2.5GbE driver: r8169 v: kernel port: c000 bus-ID: 06:00.0

IF: enp6s0 state: up speed: 2500 Mbps duplex: full mac: 00:e0:4c:2a:04:54

Device-2: Realtek RTL8125 2.5GbE driver: r8169 v: kernel port: b000 bus-ID: 07:00.0

IF: enp7s0 state: down mac: 00:e0:4c:2a:04:55

Device-3: Realtek RTL8111/8168/8411 PCI Express Gigabit Ethernet

vendor: ASUSTeK RTL8111/8168/8211/8411 driver: r8169 v: kernel port: d000 bus-ID: 08:00.0

IF: enp8s0 state: up speed: 1000 Mbps duplex: full mac: 24:4b:fe:06:03:1e

IF-ID-1: bonding_masters state: N/A speed: N/A duplex: N/A mac: N/A

RAID:

Device-1: rpool type: zfs status: ONLINE level: raidz1-0 raw: size: 21.8 TiB free: 16.7 TiB

zfs-fs: size: 14.41 TiB free: 10.98 TiB

Components: Online: 1: sda3 2: sdb3 3: sdc3

Drives:

Local Storage: total: raw: 22.35 TiB usable: 14.94 TiB used: 3.44 TiB (23.0%)

ID-1: /dev/nvme0n1 vendor: Samsung model: SSD 950 PRO 512GB size: 476.94 GiB temp: 39.9 C

ID-2: /dev/sda vendor: Western Digital model: WD80EFZZ-68BTXN0 size: 7.28 TiB

ID-3: /dev/sdb vendor: Western Digital model: WD80EFZZ-68BTXN0 size: 7.28 TiB

ID-4: /dev/sdc vendor: Western Digital model: WD80EFZZ-68BTXN0 size: 7.28 TiB

Partition:

ID-1: / size: 14.41 TiB used: 3.44 TiB (23.8%) fs: zfs logical: rpool/ROOT/pbs-1

Swap:

Alert: No swap data was found.

Sensors:

System Temperatures: cpu: 42.2 C mobo: N/A gpu: amdgpu temp: 45.0 C

Fan Speeds (RPM): N/A gpu: amdgpu fan: 0

Info:

Processes: 491 Uptime: 54m Init: systemd target: graphical (5) Compilers: N/A Packages: 511

Shell: Bash v: 5.2.15 inxi: 3.3.26

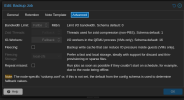

this is the config i changed back then:

root@proxmox-backup:~# cat /sys/module/zfs/parameters/zfs_arc_max

17179869184

root@proxmox-backup:~# cat /etc/modprobe.d/zfs.conf

options zfs zfs_arc_max=17179869184

this is the log, which doesnt show anything suspicious unfortunately:

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: host/archive/2024-08-16T19:00:36Z keep

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: host/archive/2024-08-17T07:00:37Z keep

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: host/archive/2024-08-24T07:00:35Z keep

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: TASK OK

Aug 24 11:34:57 proxmox-backup smartd[1226]: Device: /dev/sdb [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 125 to 124

Aug 24 11:34:57 proxmox-backup smartd[1226]: Device: /dev/sdc [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 125 to 124

Aug 24 11:35:10 proxmox-backup proxmox-backup-proxy[1593]: write rrd data back to disk

Aug 24 11:35:10 proxmox-backup proxmox-backup-proxy[1593]: starting rrd data sync

Aug 24 11:35:10 proxmox-backup proxmox-backup-proxy[1593]: rrd journal successfully committed (25 files in 0.069 seconds)

Aug 24 12:04:57 proxmox-backup smartd[1226]: Device: /dev/sdc [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 124 to 125

Aug 24 12:05:10 proxmox-backup proxmox-backup-proxy[1593]: write rrd data back to disk

Aug 24 12:05:10 proxmox-backup proxmox-backup-proxy[1593]: starting rrd data sync

Aug 24 12:05:10 proxmox-backup proxmox-backup-proxy[1593]: rrd journal successfully committed (25 files in 0.054 seconds)

Aug 24 12:10:01 proxmox-backup dhclient[1362]: DHCPREQUEST for 192.168.0.252 on enp8s0 to 192.168.0.1 port 67

Aug 24 12:10:01 proxmox-backup dhclient[1362]: DHCPACK of 192.168.0.252 from 192.168.0.1

Aug 24 12:10:01 proxmox-backup dhclient[1362]: bound to 192.168.0.252 -- renewal in 3529 seconds.

Aug 24 12:17:01 proxmox-backup CRON[2900]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Aug 24 12:17:01 proxmox-backup CRON[2901]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Aug 24 12:17:01 proxmox-backup CRON[2900]: pam_unix(cron:session): session closed for user root

Aug 24 12:35:10 proxmox-backup proxmox-backup-proxy[1593]: write rrd data back to disk

Aug 24 12:35:10 proxmox-backup proxmox-backup-proxy[1593]: starting rrd data sync

Aug 24 12:35:10 proxmox-backup proxmox-backup-proxy[1593]: rrd journal successfully committed (25 files in 0.098 seconds)

-- Reboot --

Aug 25 10:57:44 proxmox-backup kernel: Linux version 6.8.4-3-pve (build@proxmox) (gcc (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.40) #1 SMP PREEMPT_DYNAMIC PMX 6.8.4-3 (2024-05-02T11:55Z) ()

Aug 25 10:57:44 proxmox-backup kernel: Command line: initrd=\EFI\proxmox\6.8.4-3-pve\initrd.img-6.8.4-3-pve root=ZFS=rpool/ROOT/pbs-1 boot=zfs

if you need any more info, please shoot, i am more than happy to provide that.

thanks a lot!

i have a pbs and 2x prxmox hosts with several vms. ever since the use of the PBS, it just crashes during or after the backup's been done. its installed on a 3 disk zfs "raid".

sometimes it was running through for a day or two but more often than not it just hung and i had to reset.

a couple weeks / months ago i was googling for this issue and found somewhere in this forum (cant remember where) that i would need tet some zfs_arc_max param so that it would run through. i tried that, it was a little better (run a few days) but still crashes now.

these are my specs the nvme disk is currently not used):

System:

Host: proxmox-backup Kernel: 6.8.4-3-pve arch: x86_64 bits: 64 compiler: gcc v: 12.2.0

Console: pty pts/0 Distro: Debian GNU/Linux 12 (bookworm)

Machine:

Type: Desktop Mobo: ASUSTeK model: TUF GAMING X570-PLUS v: Rev X.0x serial: 200670021600043

UEFI: American Megatrends v: 5003 date: 10/07/2023

Memory:

RAM: total: 31.25 GiB used: 20.06 GiB (64.2%)

Array-1: capacity: 128 GiB slots: 4 EC: None max-module-size: 32 GiB note: est.

Device-1: DIMM_A1 type: no module installed

Device-2: DIMM_A2 type: no module installed

Device-3: DIMM_B1 type: DDR4 size: 16 GiB speed: 2133 MT/s

Device-4: DIMM_B2 type: DDR4 size: 16 GiB speed: 2133 MT/s

CPU:

Info: 12-core model: AMD Ryzen 9 3900X bits: 64 type: MT MCP arch: Zen 2 rev: 0 cache:

L1: 768 KiB L2: 6 MiB L3: 64 MiB

Speed (MHz): avg: 3800 min/max: 2200/4672 boost: enabled cores: 1: 3800 2: 3800 3: 3800

4: 3800 5: 3800 6: 3800 7: 3800 8: 3800 9: 3800 10: 3800 11: 3800 12: 3800 13: 3800 14: 3800

15: 3800 16: 3800 17: 3800 18: 3800 19: 3800 20: 3800 21: 3800 22: 3800 23: 3800 24: 3800

bogomips: 182059

Flags: avx avx2 ht lm nx pae sse sse2 sse3 sse4_1 sse4_2 sse4a ssse3

Graphics:

Device-1: AMD Navi 22 [Radeon RX 6700/6700 XT/6750 XT / 6800M/6850M XT] vendor: Tul / PowerColor

driver: amdgpu v: kernel arch: RDNA-2 bus-ID: 0e:00.0

Display: server: No display server data found. Headless machine? tty: 220x81

resolution: 2560x1440

API: OpenGL Message: GL data unavailable in console for root.

Audio:

Device-1: AMD Navi 21/23 HDMI/DP Audio driver: snd_hda_intel v: kernel bus-ID: 0e:00.1

Device-2: AMD Starship/Matisse HD Audio vendor: ASUSTeK driver: snd_hda_intel v: kernel

bus-ID: 10:00.4

API: ALSA v: k6.8.4-3-pve status: kernel-api

Network:

Device-1: Realtek RTL8125 2.5GbE driver: r8169 v: kernel port: c000 bus-ID: 06:00.0

IF: enp6s0 state: up speed: 2500 Mbps duplex: full mac: 00:e0:4c:2a:04:54

Device-2: Realtek RTL8125 2.5GbE driver: r8169 v: kernel port: b000 bus-ID: 07:00.0

IF: enp7s0 state: down mac: 00:e0:4c:2a:04:55

Device-3: Realtek RTL8111/8168/8411 PCI Express Gigabit Ethernet

vendor: ASUSTeK RTL8111/8168/8211/8411 driver: r8169 v: kernel port: d000 bus-ID: 08:00.0

IF: enp8s0 state: up speed: 1000 Mbps duplex: full mac: 24:4b:fe:06:03:1e

IF-ID-1: bonding_masters state: N/A speed: N/A duplex: N/A mac: N/A

RAID:

Device-1: rpool type: zfs status: ONLINE level: raidz1-0 raw: size: 21.8 TiB free: 16.7 TiB

zfs-fs: size: 14.41 TiB free: 10.98 TiB

Components: Online: 1: sda3 2: sdb3 3: sdc3

Drives:

Local Storage: total: raw: 22.35 TiB usable: 14.94 TiB used: 3.44 TiB (23.0%)

ID-1: /dev/nvme0n1 vendor: Samsung model: SSD 950 PRO 512GB size: 476.94 GiB temp: 39.9 C

ID-2: /dev/sda vendor: Western Digital model: WD80EFZZ-68BTXN0 size: 7.28 TiB

ID-3: /dev/sdb vendor: Western Digital model: WD80EFZZ-68BTXN0 size: 7.28 TiB

ID-4: /dev/sdc vendor: Western Digital model: WD80EFZZ-68BTXN0 size: 7.28 TiB

Partition:

ID-1: / size: 14.41 TiB used: 3.44 TiB (23.8%) fs: zfs logical: rpool/ROOT/pbs-1

Swap:

Alert: No swap data was found.

Sensors:

System Temperatures: cpu: 42.2 C mobo: N/A gpu: amdgpu temp: 45.0 C

Fan Speeds (RPM): N/A gpu: amdgpu fan: 0

Info:

Processes: 491 Uptime: 54m Init: systemd target: graphical (5) Compilers: N/A Packages: 511

Shell: Bash v: 5.2.15 inxi: 3.3.26

this is the config i changed back then:

root@proxmox-backup:~# cat /sys/module/zfs/parameters/zfs_arc_max

17179869184

root@proxmox-backup:~# cat /etc/modprobe.d/zfs.conf

options zfs zfs_arc_max=17179869184

this is the log, which doesnt show anything suspicious unfortunately:

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: host/archive/2024-08-16T19:00:36Z keep

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: host/archive/2024-08-17T07:00:37Z keep

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: host/archive/2024-08-24T07:00:35Z keep

Aug 24 11:23:28 proxmox-backup proxmox-backup-proxy[1593]: TASK OK

Aug 24 11:34:57 proxmox-backup smartd[1226]: Device: /dev/sdb [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 125 to 124

Aug 24 11:34:57 proxmox-backup smartd[1226]: Device: /dev/sdc [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 125 to 124

Aug 24 11:35:10 proxmox-backup proxmox-backup-proxy[1593]: write rrd data back to disk

Aug 24 11:35:10 proxmox-backup proxmox-backup-proxy[1593]: starting rrd data sync

Aug 24 11:35:10 proxmox-backup proxmox-backup-proxy[1593]: rrd journal successfully committed (25 files in 0.069 seconds)

Aug 24 12:04:57 proxmox-backup smartd[1226]: Device: /dev/sdc [SAT], SMART Usage Attribute: 194 Temperature_Celsius changed from 124 to 125

Aug 24 12:05:10 proxmox-backup proxmox-backup-proxy[1593]: write rrd data back to disk

Aug 24 12:05:10 proxmox-backup proxmox-backup-proxy[1593]: starting rrd data sync

Aug 24 12:05:10 proxmox-backup proxmox-backup-proxy[1593]: rrd journal successfully committed (25 files in 0.054 seconds)

Aug 24 12:10:01 proxmox-backup dhclient[1362]: DHCPREQUEST for 192.168.0.252 on enp8s0 to 192.168.0.1 port 67

Aug 24 12:10:01 proxmox-backup dhclient[1362]: DHCPACK of 192.168.0.252 from 192.168.0.1

Aug 24 12:10:01 proxmox-backup dhclient[1362]: bound to 192.168.0.252 -- renewal in 3529 seconds.

Aug 24 12:17:01 proxmox-backup CRON[2900]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Aug 24 12:17:01 proxmox-backup CRON[2901]: (root) CMD (cd / && run-parts --report /etc/cron.hourly)

Aug 24 12:17:01 proxmox-backup CRON[2900]: pam_unix(cron:session): session closed for user root

Aug 24 12:35:10 proxmox-backup proxmox-backup-proxy[1593]: write rrd data back to disk

Aug 24 12:35:10 proxmox-backup proxmox-backup-proxy[1593]: starting rrd data sync

Aug 24 12:35:10 proxmox-backup proxmox-backup-proxy[1593]: rrd journal successfully committed (25 files in 0.098 seconds)

-- Reboot --

Aug 25 10:57:44 proxmox-backup kernel: Linux version 6.8.4-3-pve (build@proxmox) (gcc (Debian 12.2.0-14) 12.2.0, GNU ld (GNU Binutils for Debian) 2.40) #1 SMP PREEMPT_DYNAMIC PMX 6.8.4-3 (2024-05-02T11:55Z) ()

Aug 25 10:57:44 proxmox-backup kernel: Command line: initrd=\EFI\proxmox\6.8.4-3-pve\initrd.img-6.8.4-3-pve root=ZFS=rpool/ROOT/pbs-1 boot=zfs

if you need any more info, please shoot, i am more than happy to provide that.

thanks a lot!