Hi team.

Posted this on reddit, but realised this is likely a better place.

Hopefully someone has seen this issue before. Just can't get this container to restore, so not sure if I should continue using PBS on this type of workload.

The resource is a container, running docker compose. 2 containers: Immich app + database. Storage is ZFS on the pve server and the pbs server.

Restoring over 1Gbe only.

6.8.12-1-pve

Backup Server 3.2-7

Restore logs

I have only found one reference to this issue, but wasn't quite the same, and there was no solution.

My second attempt at restoring failed on the same file. But that could be the first error of many. 37 minutes each attempt.

I have had no issues restoring other containers and VMs (up to 50GB), but have never tested this container, which is much bigger with a lot more files. Files are also 99% video/photo.

The datastore verification log is full of this;

Verification logs

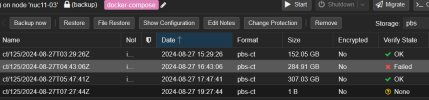

All backups in the chain fail verification. No I wasn't running verification until today. (2 weeks of backups)

I seem to be able to download individual files from the backup without issue, but will be downloading the entire folder I want when I get home just in case.

Edit: Third restore attempt failed on the same single file again.

PBS task ends with;

Posted this on reddit, but realised this is likely a better place.

Hopefully someone has seen this issue before. Just can't get this container to restore, so not sure if I should continue using PBS on this type of workload.

The resource is a container, running docker compose. 2 containers: Immich app + database. Storage is ZFS on the pve server and the pbs server.

Restoring over 1Gbe only.

6.8.12-1-pve

Backup Server 3.2-7

Restore logs

Code:

recovering backed-up configuration from 'pbs:backup/ct/104/2024-08-26T02:05:12Z'

restoring 'pbs:backup/ct/104/2024-08-26T02:05:12Z' now..

Error: error extracting archive - encountered unexpected error during extraction: error at entry "IMG_0399.JPG": failed to extract file: failed to copy file contents: Failed to parse chunk fd1b048f2d5f3aa2bfd17333a077abb6069cc4c81195be42f0dbae2be8864505 - Data blob has wrong CRC checksum.

TASK ERROR: unable to restore CT 125 - command 'lxc-usernsexec -m u:0:100000:65536 -m g:0:100000:65536 -- /usr/bin/proxmox-backup-client restore '--crypt-mode=none' ct/104/2024-08-26T02:05:12Z root.pxar /var/lib/lxc/125/rootfs --allow-existing-dirs --repository root@pam@192.168.1.104:datastore' failed: exit code 255I have only found one reference to this issue, but wasn't quite the same, and there was no solution.

My second attempt at restoring failed on the same file. But that could be the first error of many. 37 minutes each attempt.

I have had no issues restoring other containers and VMs (up to 50GB), but have never tested this container, which is much bigger with a lot more files. Files are also 99% video/photo.

The datastore verification log is full of this;

Verification logs

Code:

2024-08-26T15:17:53+12:00: chunk fd1b048f2d5f3aa2bfd17333a077abb6069cc4c81195be42f0dbae2be8864505 was marked as corrupt

2024-08-26T15:17:55+12:00: verified 744.73/965.90 MiB in 1.85 seconds, speed 402.57/522.12 MiB/s (1 errors)

2024-08-26T15:17:55+12:00: verify datastore:ct/104/2024-08-12T16:00:17Z/root.pxar.didx failed: chunks could not be verified

2024-08-26T15:17:55+12:00: check catalog.pcat1.didxAll backups in the chain fail verification. No I wasn't running verification until today. (2 weeks of backups)

I seem to be able to download individual files from the backup without issue, but will be downloading the entire folder I want when I get home just in case.

Edit: Third restore attempt failed on the same single file again.

PBS task ends with;

Code:

2024-08-26T16:18:19+12:00: GET /chunk

2024-08-26T16:18:19+12:00: download chunk "/datastore/.chunks/fd1b/fd1b048f2d5f3aa2bfd17333a077abb6069cc4c81195be42f0dbae2be8864505"

2024-08-26T16:18:19+12:00: GET /chunk: 400 Bad Request: reading file "/datastore/.chunks/fd1b/fd1b048f2d5f3aa2bfd17333a077abb6069cc4c81195be42f0dbae2be8864505" failed: No such file or directory (os error 2)

Last edited: