Hi there,

I'm hitting an error that I don't know how to solve - let me explain. I'm on PBS 4.0.14 btw.

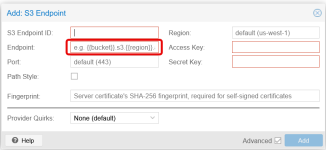

I'm trying to connect my Backblaze B2 storage (https://www.backblaze.com/cloud-storage) to the new S3 option in PBS. I managed to setup the S3 endpoint, I then moved on to create a datastore. Selecting the S3 endpoint automatically also selects the correct bucket - so PBS is talking to Backblaze to retrieve the available buckets (I think).

On clicking "Add", I'm only getting this error: "failed to access bucket: unexpected status code 405 Method Not Allowed (400)".

To debug this, I installed the aws cli locally, set the credentials and tried accessing the data in the bucket with this command:

That works, it's showing the image I added there - the credentials are the same ones I added in PBS, so I don't think that's it.

Is there anything I'm doing obviously wrong? And how can I debug this further / get it fixed?

I'm hitting an error that I don't know how to solve - let me explain. I'm on PBS 4.0.14 btw.

I'm trying to connect my Backblaze B2 storage (https://www.backblaze.com/cloud-storage) to the new S3 option in PBS. I managed to setup the S3 endpoint, I then moved on to create a datastore. Selecting the S3 endpoint automatically also selects the correct bucket - so PBS is talking to Backblaze to retrieve the available buckets (I think).

On clicking "Add", I'm only getting this error: "failed to access bucket: unexpected status code 405 Method Not Allowed (400)".

To debug this, I installed the aws cli locally, set the credentials and tried accessing the data in the bucket with this command:

Code:

aws --endpoint-url https://s3.eu-central-003.backblazeb2.com --region eu-central-003 s3 ls s3://<bucket-name>Is there anything I'm doing obviously wrong? And how can I debug this further / get it fixed?

Last edited: