Hello!

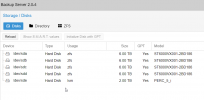

I have a small installation with PBS running for almost a year, with 4 disks in ZFS RAIDZ-1.

At one point I forgot to clean up backups and the disk space ran out, making it impossible for me to boot up the computer.

So I had to re-install another PBS in other disks, and then proceeded to re-import the "rpool" pool with the original disks.

PBS setup ->

2x2T sata zfs raid1 (boot, actual pbs system)

4 x 4T sata raidz1 (old /rpool/datastore dataset mounted ok)

Zpool list:

root@pbs1211:/rpool/nfs/dump# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 21.8T 21.7T 178G - - 68% 99% 1.00x ONLINE -

I managed to re-import the Datastore configuration and it is now accessible through the web interface of the new installation.

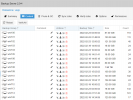

However, I am unable to make space or remove old items through the shell. I tried to remove some files that were not part of the datastore, with commands

# cat /dev/null > /file/to/delete

# rm /file/to/delete

root@pbs1211:/rpool/nfs/dump# rm vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo

rm: cannot remove 'vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo': No space left on device

root@pbs1211:/rpool/nfs/dump# cat /dev/null > vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo

-bash: vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo: No space left on device

But I haven't had any success with them either.

Any ideas or help?

Thanks !!

I have a small installation with PBS running for almost a year, with 4 disks in ZFS RAIDZ-1.

At one point I forgot to clean up backups and the disk space ran out, making it impossible for me to boot up the computer.

So I had to re-install another PBS in other disks, and then proceeded to re-import the "rpool" pool with the original disks.

PBS setup ->

2x2T sata zfs raid1 (boot, actual pbs system)

4 x 4T sata raidz1 (old /rpool/datastore dataset mounted ok)

Zpool list:

root@pbs1211:/rpool/nfs/dump# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 21.8T 21.7T 178G - - 68% 99% 1.00x ONLINE -

I managed to re-import the Datastore configuration and it is now accessible through the web interface of the new installation.

However, I am unable to make space or remove old items through the shell. I tried to remove some files that were not part of the datastore, with commands

# cat /dev/null > /file/to/delete

# rm /file/to/delete

root@pbs1211:/rpool/nfs/dump# rm vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo

rm: cannot remove 'vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo': No space left on device

root@pbs1211:/rpool/nfs/dump# cat /dev/null > vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo

-bash: vzdump-qemu-702-2021_07_03-19_00_03.vma.lzo: No space left on device

But I haven't had any success with them either.

Any ideas or help?

Thanks !!

Last edited:

![2022-03-26 15_49_07-mc [root@pbs1211]_~_VMRescate.png](/data/attachments/35/35175-8a833e9832cf191fe66a4ba18063e0f8.jpg?hash=ioM-mDLPGR)

![2022-03-26 15_56_53-mc [root@pbs1211]_~_VMRescate.png](/data/attachments/35/35176-35103e9350fa70f8766e0043439c7778.jpg?hash=NRA-k1D6cP)